The ability of systems to adapt over time without losing previous knowledge, known as continual learning (CL), poses a significant challenge. While adept at processing large amounts of data, neural networks often suffer from catastrophic forgetting, where acquiring new information can erase what was learned previously. This phenomenon is particularly problematic in environments with restricted data retention capacities or extensive task sequences.

Traditionally, strategies to combat catastrophic forgetting have focused on rehearsal and multitask learning, using bounded memory buffers to store and replay past examples or sharing representations across tasks. These methods help but are prone to overfitting and often fail to generalize effectively across diverse tasks. They struggle, especially in low-buffer scenarios, where the limited data can’t sufficiently represent all past learnings.

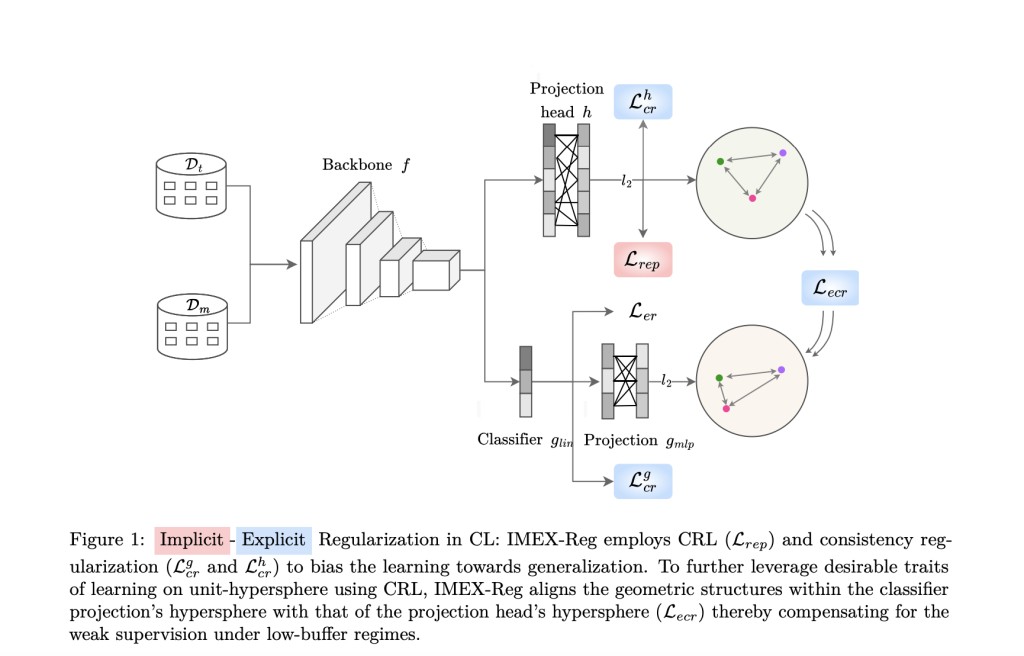

Researchers from Eindhoven University of Technology and Wayve introduced a novel framework called IMEX-Reg, which stands for Implicit-Explicit Regularization. This approach combines contrastive representation learning (CRL) with consistency regularization to foster more robust generalization. The method emphasizes preserving past data and ensuring the learning process inherently discourages forgetting by enhancing the model’s ability to generalize across tasks and conditions.

IMEX-Reg operates on two levels: it employs CRL to encourage the model to identify and emphasize useful features across different data presentations, effectively using positive and negative pairings to refine its predictions. Consistent regularization helps align the classifier’s outputs more closely with real-world data distributions, thus maintaining accuracy even when trained data is limited. This dual approach significantly enhances the model’s stability and ability to adapt without forgetting crucial information.

Empirical results underscore the efficacy of IMEX-Reg, showing it outperforms existing methods in several benchmarks. For instance, in low-buffer regimes, IMEX-Reg reduces forgetting and substantially improves task accuracy compared to traditional rehearsal-based methods. In scenarios with just 200 memory slots, IMEX-Reg achieves top-1 accuracy improvements of 9.6% and 37.22% on challenging datasets like Seq-CIFAR100 and Seq-TinyImageNet, respectively. These performance gains highlight the framework’s capacity to effectively utilize even limited data to maintain high levels of task-specific performance.

IMEX-Reg demonstrates resilience against natural and adversarial disturbances, which is crucial for applications in dynamic, real-world environments where data corruption or malicious attacks might occur. This robustness, paired with less task-recency bias—where recent tasks overshadow older ones in the learning process—positions IMEX-Reg as a forward-thinking solution that retains past knowledge and ensures equitable learning across all tasks.

In conclusion, the IMEX-Reg framework significantly advances continual learning by integrating strong inductive biases with innovative regularization techniques. Its success across various metrics and conditions attests to its potential to create more adaptable, stable, and robust learning systems. As such, it sets a new standard for future developments in the field, promising enhanced performance in continual learning applications and paving the way for more intelligent, durable neural networks.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 41k+ ML SubReddit

The post Enhancing Continual Learning with IMEX-Reg: A Robust Approach to Mitigate Catastrophic Forgetting appeared first on MarkTechPost.

Source: Read MoreÂ