Software vulnerability detection is a critical field focused on safeguarding system security and user privacy by identifying security flaws in software systems. Ensuring software systems are secure against potential attacks is crucial with increasingly sophisticated cyber threats. The application of advanced AI technologies, particularly large language models (LLMs) and deep learning, has become instrumental in enhancing the detection of software vulnerabilities.

The core challenge in software vulnerability detection lies in accurately identifying vulnerabilities in increasingly complex software systems to prevent potential breaches. Traditional vulnerability detection methods, such as static analysis tools and machine learning-based models, often produce high false positive rates and cannot keep up with the continuously evolving threats. The existing tools are limited by their reliance on predefined patterns or datasets, leading to inaccuracies and missed vulnerabilities.

Current research in software vulnerability detection includes frameworks like GRACE and ChatGPT-driven models that leverage deep learning and LLMs for better detection accuracy. These approaches integrate prompt engineering with machine learning-based models and utilize chain-of-thought guidance to improve detection capabilities. However, existing frameworks often need help with high false positive rates and limited adaptability, highlighting the need for more sophisticated solutions in vulnerability detection.

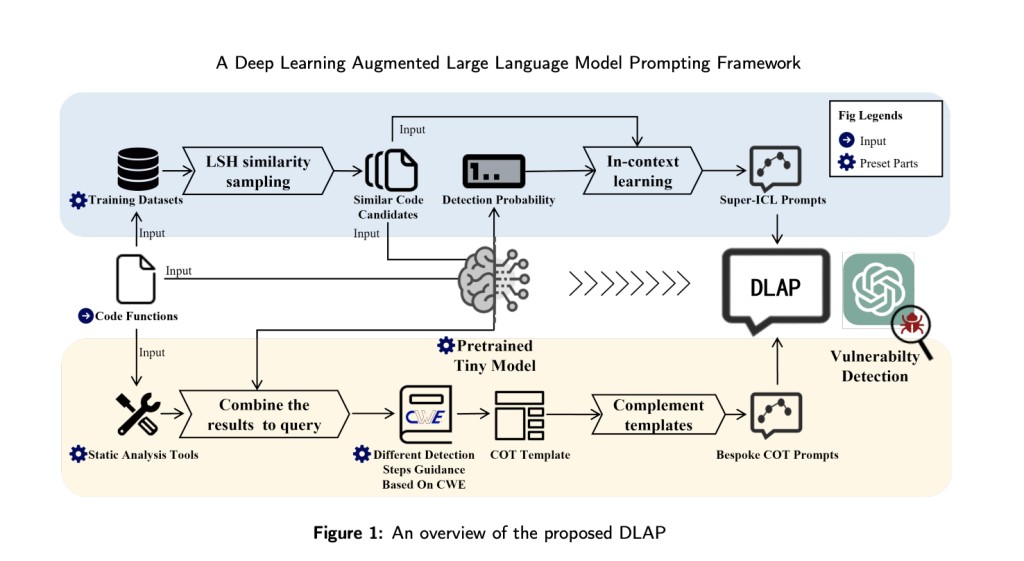

Researchers from Nanjing University, China, and Southern Cross University, Australia, have introduced DLAP, a framework that stands out due to its combination of LLMs, deep learning, and prompt engineering. DLAP refines vulnerability detection through a hierarchical taxonomy and chain-of-thought (COT) guidance, allowing it to guide LLMs accurately. It utilizes custom prompts tailored to specific categories to help the models understand and detect complex vulnerabilities effectively, addressing the limitations of traditional tools.

The DLAP framework leverages static analysis tools and deep learning models to create prompts that enhance LLMs. Evaluated on a dataset of over 40,000 examples from four major software projects, DLAP integrates static analysis results with LLMs for in-depth semantic and logical analysis. The framework employs COT guidance to improve prompt accuracy, enabling efficient identification of software vulnerabilities. This integration of methodologies allows DLAP to detect code vulnerabilities while minimizing false positives precisely.

The four datasets DLAP was tested on were: Chrome, Android, Linux, and Qemu, each comprising thousands of functions and vulnerabilities. Compared to other methods, DLAP achieved up to 10% higher F1 scores and 20% higher Matthews Correlation Coefficient (MCC). For Chrome, DLAP attained 40.4% precision and 73.3% recall, with F1 scores of 52.1% for Chrome, 49.3% for Android, 65.4% for Linux, and 66.7% for Qemu, demonstrating its strong and consistent performance across diverse datasets.

To conclude, the research introduced the DLAP framework, combining deep learning and LLMs for effective software vulnerability detection. By using specialized prompts and chain-of-thought guidance, DLAP enhances detection precision and recall while reducing false positives. Its performance across four large datasets demonstrated superior accuracy compared to existing methods, highlighting its significant potential in improving cybersecurity practices. The research underscores the importance of innovative approaches for tackling evolving software vulnerabilities, offering a reliable tool for software security.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 41k+ ML SubReddit

The post DLAP: A Deep Learning Augmented LLMs Prompting Framework for Software Vulnerability Detection appeared first on MarkTechPost.

Source: Read MoreÂ