The large language models (LLMs) research domain emphasizes aligning these models with human preferences to produce helpful, unbiased, and safe responses. Researchers have made significant strides in training LLMs to improve their ability to understand, comprehend, and interact with human-generated text, enhancing communication between humans and machines.

A primary challenge in NLP is teaching LLMs to provide responses that align with human preferences, avoiding biases, and generating useful and safe answers. Supervised fine-tuning offers a foundational approach to refining model behavior, but achieving true alignment with human preferences requires more intricate methods. Complex pipelines, especially reinforcement learning from human feedback (RLHF), are often necessary to refine these models, but their technical complexities and significant resource demands can hinder broader adoption.

While tools like HuggingFace TRL and DeepSpeedChat offer valuable resources for model alignment, they lack the scalability and performance necessary for managing today’s large-scale models. The complexity and size of modern LLMs necessitate specialized, optimized solutions that efficiently handle their training requirements, allowing researchers to focus on fine-tuning model behavior without being stuck by technical constraints.

Researchers at NVIDIA introduced NeMo-Aligner, a novel tool designed to streamline the training process for large-scale LLMs using reinforcement learning. This tool leverages NVIDIA’s NeMo framework to optimize the entire RLHF pipeline, from supervised fine-tuning to reward model training and proximal policy optimization (PPO). The team’s focus on optimizing parallelism and distributed computing techniques has resulted in a tool capable of efficiently managing the complexities inherent in training large models. It enables the distribution of compute workloads across different clusters, making the most of available hardware.

The architecture of NeMo-Aligner is designed to make model alignment more accessible and efficient. The tool incorporates various optimizations to support multiple stages of the RLHF pipeline. For instance, it separates the training pipeline into three phases:

Supervised fine-tuning

Reward model trainingÂ

PPO

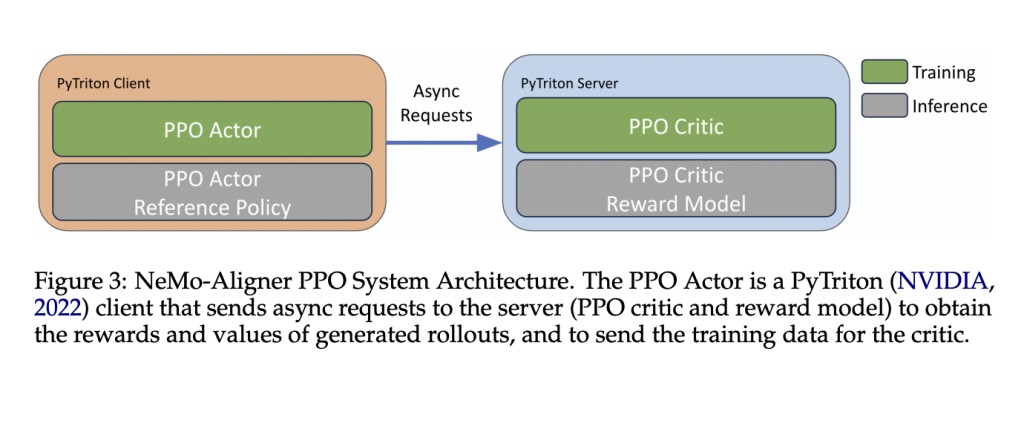

During PPO, it dynamically balances workloads among data-parallel workers, leading to significant performance improvements in training efficiency. By integrating advanced distributed computing strategies, NeMo-Aligner handles large-scale models effectively, using the PyTriton server to communicate across models during PPO.

Performance results from NeMo-Aligner highlight its significant efficiency improvements, especially during the PPO stage. TensorRT-LLM integration reduces training times by up to seven times compared to traditional methods, demonstrating the remarkable impact of this optimization. The framework is also designed with extensibility, enabling users to adapt it to new algorithms quickly. The tool supports training models with as many as 70 billion parameters, allowing researchers to handle unprecedented scales with improved efficiency and reduced training times.

The researchers demonstrated the extensibility of NeMo-Aligner by integrating it with various alignment algorithms like Supervised Finetuning, Direct Preference Optimization, and SPIN. This adaptability allows the tool to support different optimization strategies, such as using Attribute Prediction Models to align models with human preferences across semantic aspects like correctness and toxicity. NeMo-Aligner’s approach makes it possible to enhance model responses in a targeted, data-driven manner.

In conclusion, NeMo-Aligner provides a robust and flexible solution for training large language models using reinforcement learning techniques. By addressing the challenges of scalability and performance head-on, the researchers have created a comprehensive framework that streamlines the process of aligning LLMs with human preferences. The result is a tool that improves training efficiency and ensures that the models can be fine-tuned to produce helpful and safe responses aligned with human expectations.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 41k+ ML SubReddit

The post NVIDIA AI Open-Sources ‘NeMo-Aligner’: Transforming Large Language Model Alignment with Efficient Reinforcement Learning appeared first on MarkTechPost.

Source: Read MoreÂ