Amazon DynamoDB is a serverless, NoSQL database service that enables you to develop modern applications at any scale. DynamoDB on-demand mode offers a truly serverless experience that can serve millions of requests per second without capacity planning, and automatic scale down to zero when no requests are being issued against the table. With on-demand mode’s simple, pay-per-request pricing model, you don’t have to worry about idle capacity because you only pay for the capacity you actually use. Customers increasingly use on-demand mode to build new applications where the database workload is complex to forecast, or for applications with unpredictable or variable traffic pattern, or when working with serverless stacks with pay-per-use pricing. For tables using on-demand mode, DynamoDB instantly scales customers’ workloads as they ramp up or down to meet the demand, ensuring that the application responds to requests.

The default throughput rate for each on-demand table is 40,000 read requests per second and 40,000 write requests per second (can be raised using AWS Service Quotas), which uniformly applies to all tables within the account, and cannot be customized or tailored for diverse workloads and differing requirements within an account. Since on-demand mode scales rapidly to accommodate varying traffic patterns, a piece of unoptimized or hastily written code can rapidly scale up and consume resources, thereby making it challenging to keep table-level usage and costs bounded. Customers regularly ask us for more flexibility and control with on-demand mode to strike an optimal balance between costs and performance.

Today we are launching a new, optional feature that allows you to configure maximum read or write (or both) throughput for individual on-demand tables and associated global secondary indexes (GSIs), which makes it simpler to balance table-level costs and performance. On-demand requests in excess of the maximum throughput specified will be throttled, but you can modify the maximum throughput settings at any time based on your application requirements. On-demand maximum throughput can be set on new and existing single-region and multi-region tables, indexes, as well as during a table restore and data import from Amazon Simple Storage Service (Amazon S3) workflows.

In this post, we explore some common use cases and demonstrate how you can implement maximum throughput for on-demand tables to achieve your organizational goals. We also discuss how this feature works with other DynamoDB resources.

Common use cases

In this section, we discuss common use cases for configuring maximum throughput for on-demand tables:

Optimizing on-demand throughput costs. The flexibility to set maximum throughput quota for on-demand tables provides an additional layer of cost predictability and manageability, and empowers customers to more broadly embrace the serverless experience across their production and development environments based on their differing workload requirements and budget.

Protect against runaway costs. By setting predefined maximum throughput for on-demand tables, teams can prevent the accidental surge in read or write consumption that may arise from unoptimized code or rogue processes. This protective measure ensures that even in the early stages of application development or in non-productive environments, cost implications can be well managed.

Manage API usage. In scenarios where customers switch from provisioned capacity to on-demand mode, setting an upper-bound consumption threshold for on-demand throughput can ensure that teams are not caught off-guard with how much workload traffic they are able to unexpectedly drive against the table. This prevents organizations from consuming excessive resources within a certain time frame.

Safeguarding downstream services. A customer application can include serverless and non-serverless technologies. The serverless piece of the architecture can scale rapidly to match demand. But downstream components with fixed capacities could be overwhelmed. Implementing maximum throughput settings for on-demand tables can prevent large volume of events from propagating to multiple downstream components with unexpected side effects.

Getting started

When you create an on-demand table, by default, maximum throughput settings are not enabled on the workload. This is an optional feature that you can configure on a per-table basis and for associated global secondary indexes, which allows you to independently set maximum throughput for reads and writes to fine-tune your approach based on specific requirements. The maximum throughput for an on-demand table is applied on a best-effort basis and should be thought of as targets rather than guaranteed request ceilings. Your workload might temporarily exceed the maximum throughput specified because of burst capacity. There is no additional cost to use this feature, and you can get started by using the AWS Command Line Interface (AWS CLI), AWS Management Console, AWS SDKs, or AWS CloudFormation.

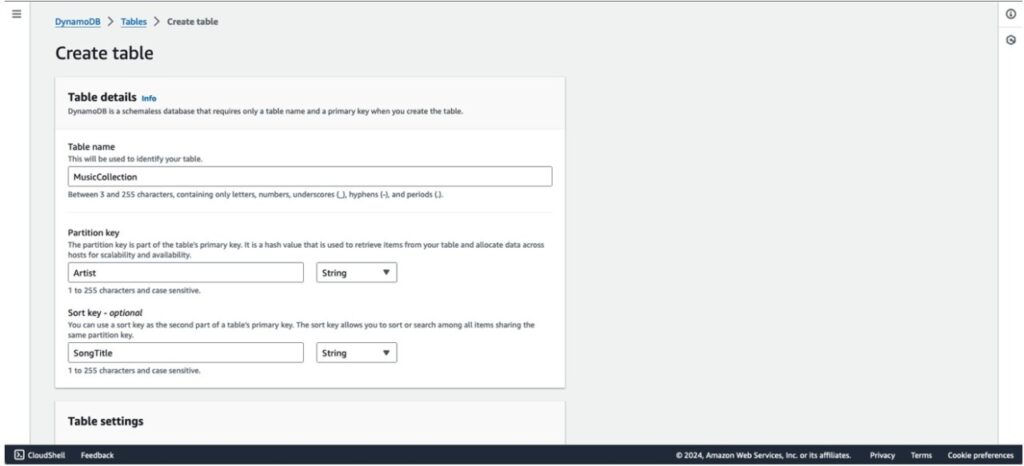

To enable on-demand maximum throughput using the DynamoDB console, complete the following steps:

On the DynamoDB console, choose Tables in the navigation pane.

Choose Create table.

Specify a name, partition key, and sort key for the table.

For Table settings, select Customize settings.

For Read/write capacity settings, select On-demand.

Under Maximum table throughput, specify the limits for read, write, or both (between 1–40,000 request units).

Choose Create table.

You can also set on-demand maximum throughput for a table or index via the CLI, SDK, or AWS CloudFormation using the OnDemandThroughput parameter, which includes MaxReadRequestUnits and MaxWriteRequestUnits. These parameters allow you to specify the maximum read and write units within the valid range of 1 to AccountMaxTableLevelReads or AccountMaxTableLevelWrites. Setting the value to -1 disables the limit, offering flexibility for scenarios where on-demand throughput limits are not desired.

You have the flexibility to configure maximum throughput settings independently for tables and indexes. Additionally, you can selectively apply these settings to either reads, writes, or both, based on your specific requirements. It’s recommended to align the maximum throughput settings for a global secondary index with those of the associated table, following best practices. This is particularly important for MaxWriteRequestUnits to ensure the global secondary index can effectively replicate data written to the table.

The following example shows setting on-demand maximum throughput for a table and index using the CLI:

Global Tables

You can configure maximum throughput for on-demand mode to manage capacity for global tables, and it follows similar semantics to ProvisionedThroughput. When you specify the maximum read or write (or both) throughput settings on one global table replica, the same maximum throughput settings are automatically applied to all replica tables. It is important that the replica tables and secondary indexes in a global table have identical write throughput settings to ensure proper replication of data. An OnDemandThroughputOverride parameter introduced in the UpdateTable API allows you to set distinct read limits for each replica, providing flexibility and independence from other replica settings. To learn more about capacity settings for global tables, refer to the Best Practices and Requirements guide for managing global tables.

An example of adding an override for MaxReadRequestUnits for a global table replica using the CLI:

When the table update is complete, the DescribeTable call will now include the overridden values for reads:

Restore and Import from S3 workflows

DynamoDB’s backup and restore capability also supports on-demand maximum throughput. Backups retain the throughput settings at the time of creation and are applied to the new table upon restoration, unless manually overridden. When utilizing ImportTable to import data from Amazon S3, you have the option to specify on-demand maximum throughput limits, mirroring the flexibility offered in CreateTable operations.

Specifying OnDemandThroughput settings for restores or imports does not impact the duration of these operations.

Monitoring, alerting, and troubleshooting

Before specifying maximum table throughput for on-demand tables, it is important to understand your workload and traffic. AWS provides many services to help you monitor and understand your applications. For example, Amazon CloudWatch collects monitoring and operational data in logs, metrics, and events, providing you with a unified view of AWS resources, applications, and services. You can understand the normal state of your applications by effectively monitoring them. Once you understand your workloads, you can determine which tables are best suited to be rate limited. To simplify monitoring, CloudWatch provides metrics for OnDemandMaxReadRequestUnits and OnDemandMaxWriteRequestUnits. These metrics are emitted at 5-minute intervals, aligning with the frequency of ProvisionedReadCapacityUnits and ProvisionedWriteCapacityUnits metrics

The maximum throughput for an on-demand table is applied on a best-effort basis and should be thought of as targets rather than guaranteed request ceilings. Your workload might temporarily exceed the maximum throughput specified because of burst capacity. In some cases, DynamoDB uses burst capacity to accommodate reads or writes in excess of your table’s throughput settings. With burst capacity, unexpected read or write requests can succeed for up to where they otherwise would be throttled. For more information, see Using burst capacity effectively.

If your application exceeds the maximum read or write throughput you have set on your on-demand table, DynamoDB begins to throttle those requests, indicated by a ThrottlingException message, demonstrating the system’s adherence to the pre-set throughput setting. When throttled for surpassing the on-demand maximum throughput limit, the message returned is: ‘Throughput exceeds the maximum OnDemandThroughput configured on table or index’. The exception message is exclusive to this feature, serving as a distinctive identifier to swiftly discern throttling instances. For more information on exceptions for DynamoDB, refer to Error handling with DynamoDB.

Conclusion

In this post, we showed how you can configure maximum throughput for on-demand tables to avoid unnecessary runaway DynamoDB throughput costs and help limit the impact on downstream applications. There is no additional cost to use this feature, and you can get started by using the AWS Management Console, AWS CLI, AWS SDK, DynamoDB APIs, or AWS CloudFormation. To learn more refer to the DynamoDB Developer Guide.

About the authors

Lee Hannigan is a Sr. DynamoDB Specialist Solutions Architect based in Donegal, Ireland. He brings a wealth of expertise in distributed systems, backed by a strong foundation in big data and analytics technologies. In his role as a DynamoDB Specialist Solutions Architect, Lee excels in assisting customers with the design, evaluation, and optimization of their workloads leveraging DynamoDB’s capabilities.

Mazen Ali is a Principal Product Manager on Amazon DynamoDB at AWS, and is based in New York City. With a strong background in product management and technology roles, Mazen is passionate about engaging with customers to understand their requirements, defining product strategy, and working with cross-functional teams to build delightful experiences. Outside work, Mazen enjoys traveling, reading books, skiing, and hiking.

Source: Read More