Natural Language Processing (NLP) is integral to artificial intelligence, enabling seamless communication between humans and computers. This interdisciplinary field incorporates linguistics, computer science, and mathematics, facilitating automatic translation, text categorization, and sentiment analysis. Traditional NLP methods like CNN, RNN, and LSTM have evolved with transformer architecture and large language models (LLMs) like GPT and BERT families, providing significant advancements in the field.

However, LLMs face challenges, including hallucination and the need for domain-specific knowledge. Researchers from East China University of Science and Technology and Peking University have surveyed the integrated retrieval-augmented approaches to language models. Retrieval-Augmented Language Models (RALMs), such as Retrieval-Augmented Generation (RAG) and Retrieval-Augmented Understanding (RAU), enhance NLP tasks by incorporating external information retrieval to refine the output. This has expanded their applications to translation, dialogue generation, and knowledge-intensive applications.

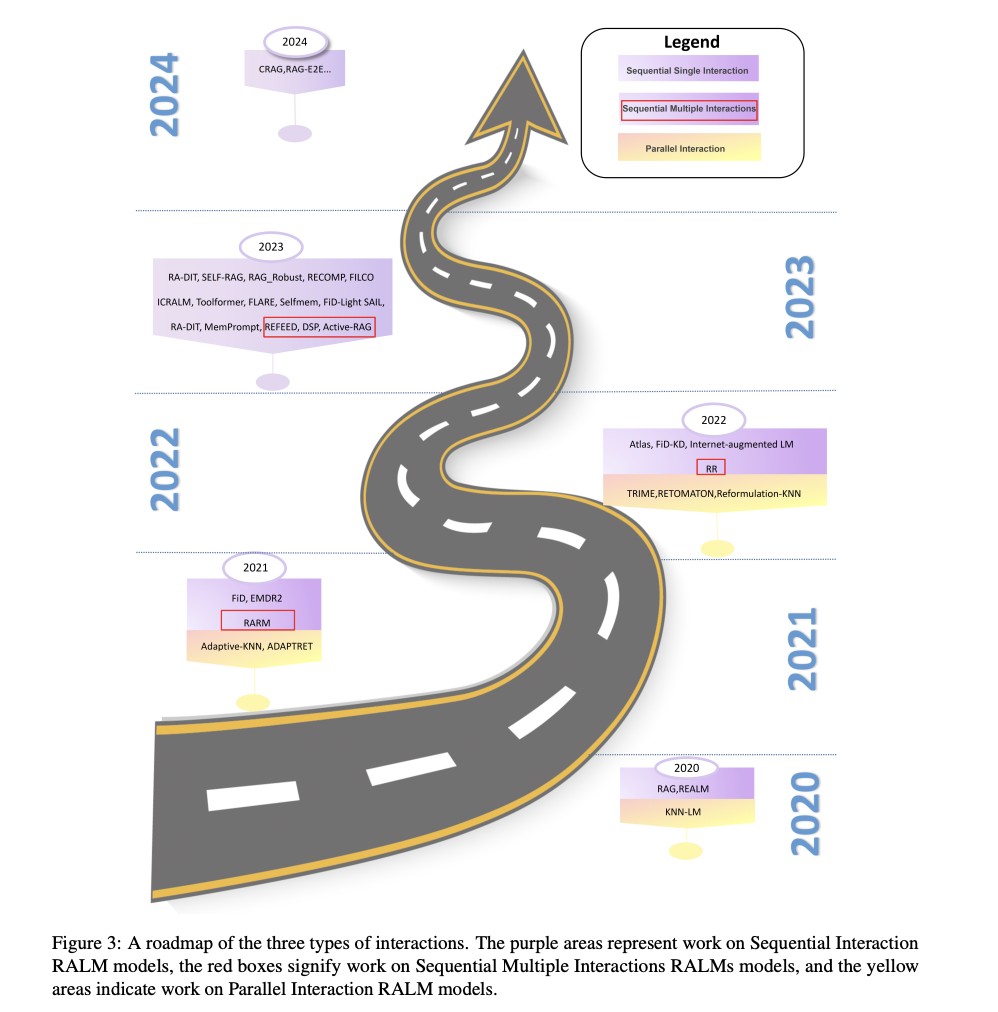

RALMs refine language models’ outputs using retrieved information, categorized into sequential single interaction, sequential multiple interaction, and parallel interaction. In sequential single interaction, retrievers identify relevant documents, which the language model then uses to predict the output. Sequential multiple interactions allow for iterative refinement, while parallel interaction allows retrievers and language models to work independently, interpolating their outputs.

Retrievers play a pivotal role in RALMs, with sparse, dense, internet, and hybrid retrieval methods enhancing RALM capabilities. Sparse retrieval employs simpler techniques like TF-IDF and BM25, while dense retrieval leverages deep learning to improve accuracy. Internet retrieval provides a plug-and-play approach using commercial search engines, and hybrid retrieval combines different methods to maximize performance.

RALMs’ language models are categorized into autoencoder, autoregressive, and encoder-decoder models. Autoencoder models like BERT are well-suited for understanding tasks, while autoregressive models such as the GPT family excel at generating natural language. Encoder-decoder models like T5 and BART benefit from the transformer architecture’s parallel processing, offering versatility in NLP tasks.

Enhancing RALMs involves improving retrievers, language models, and overall architecture. Retriever enhancements focus on quality control and timing optimization to ensure relevant documents are retrieved and used correctly. Language model enhancements include pre-generation retrieval processing and structural model optimization, while overall RALM enhancements involve end-to-end training and intermediate modules.

RAG and RAU are specialized RALMs designed for natural language generation and understanding. RAG focuses on enhancing the generation of natural language tasks like text summarization and machine translation, while RAU is tailored to understand tasks like question-answering and commonsense reasoning.

The versatility of RALMs has enabled their application in diverse NLP tasks, including machine translation, dialogue generation, and text summarization. Machine translation benefits from RALMs’ improved memory capabilities, while dialogue generation utilizes RALMs’ ability to generate contextually relevant responses in multi-round dialogues. These applications showcase RALMs’ adaptability and efficiency, extending to tasks like code summarization, question answering, and knowledge graph completion.

In conclusion, RALMs, including RAG and RAU, represent a significant advancement in NLP by combining external data retrieval with large language models to enhance their performance across various tasks. Researchers have refined the retrieval-augmented paradigm, optimizing retriever-language model interactions, thus expanding RALMs’ potential in natural language generation and understanding. As NLP evolves, RALMs offer promising avenues for improving computational language understanding.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 41k+ ML SubReddit

The post A Survey of RAG and RAU: Advancing Natural Language Processing with Retrieval-Augmented Language Models appeared first on MarkTechPost.

Source: Read MoreÂ