Large Language Models (LLMs) often provide confident answers, raising concerns about their reliability, especially for factual questions. Despite widespread hallucination in LLM-generated content, no established method to assess response trustworthiness exists. Users lack a “trustworthiness score†to determine response reliability without further research or verification. The aim is for LLMs to yield predominantly high trust scores, reducing the need for extensive user verification.

LLM evaluation has become pivotal in assessing model performance and resilience to input variations, which is crucial for real-world applications. The FLASK method evaluates LLMs’ consistency across stylistic inputs, emphasizing alignment skills for precise model evaluation. Concerns over vulnerabilities in model-graded evaluations raise doubts about their reliability. Challenges in maintaining performance across rephrased instructions prompt the development of methods to enhance zero-shot robustness. PromptBench framework systematically evaluates LLMs’ resilience to adversarial prompts, stressing the need to understand model responses to input changes. Recent studies explore adding noise to prompts to assess LLM robustness, proposing unified frameworks and privacy-preserving prompt learning techniques. Addressing LLM vulnerabilities to noisy inputs, especially in high-stakes scenarios, underscores the importance of consistent predictions. Methods for measuring LLM confidence, such as black-box and reflection-based methods, are gaining momentum. NLP literature suggests enduring sensitivity to perturbations, emphasizing the ongoing relevance of input robustness studies.

Researchers from VISA introduce an innovative approach to assess the real-time robustness of any black-box LLM, both in stability and explainability. This method relies on measuring local deviation from harmoniticity, denoted as γ, offering a model-agnostic and unsupervised means of evaluating response robustness. Human annotation experiments establish a positive correlation between γ and false or misleading answers. Also, employing stochastic gradient ascent along the gradient of γ efficiently reveals adversarial prompts, demonstrating the method’s effectiveness. The proposed work extends the application of Harmonic Robustness (The prior method developed by authors to measure the robustness of predictive machine learning models) to LLMs.

The researchers present an algorithm for computing γ, a measure of robustness, for input to LLMs. This method calculates the angle between the average output embedding of perturbed inputs and the original output embedding. Human annotation experiments demonstrate the correlation between γ and false or misleading answers. Examples illustrate the stability of GPT-4 outputs under perturbations, showing γ = 0 for stable answers. However, slight grammatical variations lead to small, non-zero γ values, indicating trustworthy responses. For significant variations, γ increases, suggesting decreased trustworthiness, though not always indicating incorrectness. Empirical measurement across models and domains is proposed to clarify the correlation between γ and trustworthiness.

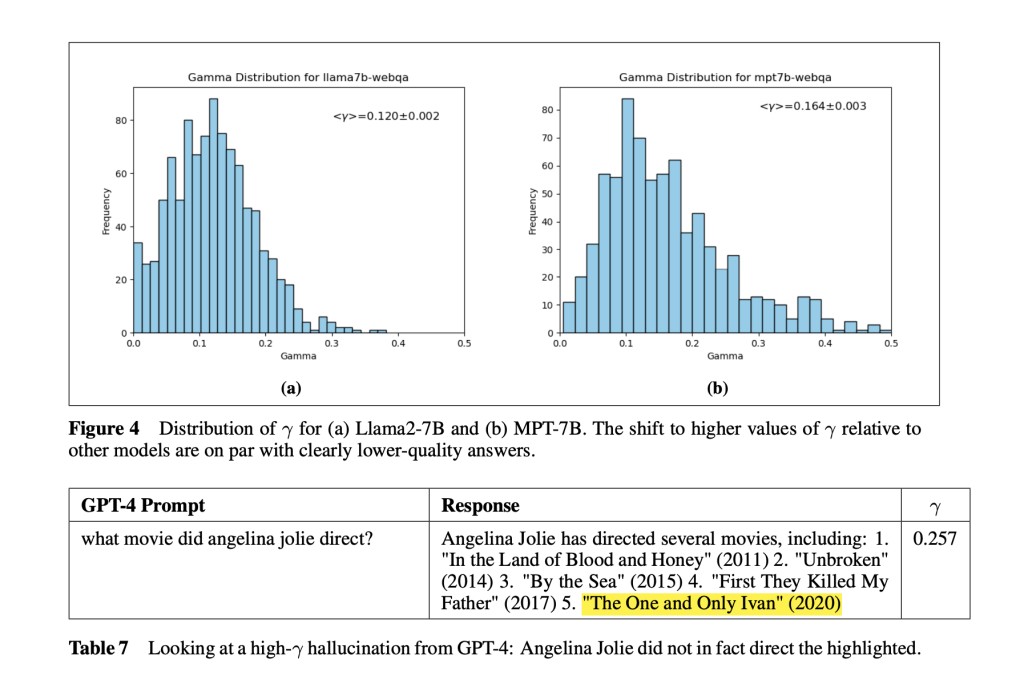

The researchers measure the correlation between γ, LLM robustness, and trustworthiness across various LLMs and question-answer (QA) corpora. Five leading LLMs, GPT-4, ChatGPT, Claude 2.1, Mixtral-8x7B, and Smaug-72B, and two older, smaller models, Llama2-7B and MPT-7B are evaluated. Three QA corpora, Web QA, TruthfulQA, and Programming QA, are considered to capture different domains. Human annotators rate the truthfulness and relevance of LLM answers using a 5-point scale. Fleiss’ Kappa indicates consistent inter-annotator agreement. γ values below 0.05 generally correspond to trustworthy responses, while increasing γ tends to correlate with decreased quality, although model and domain-dependent. Larger LLMs exhibit lower γ values, suggesting higher trustworthiness, with GPT-4 generally leading in quality and certified trustworthiness.

In conclusion, this study presents a robust approach to assess LLM response robustness using γ values, offering insights into their trustworthiness. Correlating γ with human annotations provides a practical metric for evaluating LLM reliability across various models and domains. Across all models and domains tested by researchers, human ratings confirm that γ → 0 indicates trustworthiness, and the low-γ leaders among the tested models are GPT-4, ChatGPT, and Smaug-72B.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post Evaluating LLM Trustworthiness: Insights from Harmoniticity Analysis Research from VISA Team appeared first on MarkTechPost.

Source: Read MoreÂ