Imagine harnessing the power of advanced language models to understand and respond to your customers’ inquiries. Amazon Bedrock, a fully managed service providing access to such models, makes this possible. Fine-tuning large language models (LLMs) on domain-specific data supercharges tasks like answering product questions or generating relevant content.

In this post, we show how Amazon Bedrock and Amazon SageMaker Canvas, a no-code AI suite, allow business users without deep technical expertise to fine-tune and deploy LLMs. You can transform customer interaction using datasets like product Q&As with just a few clicks using Amazon Bedrock and Amazon SageMaker JumpStart models.

Solution overview

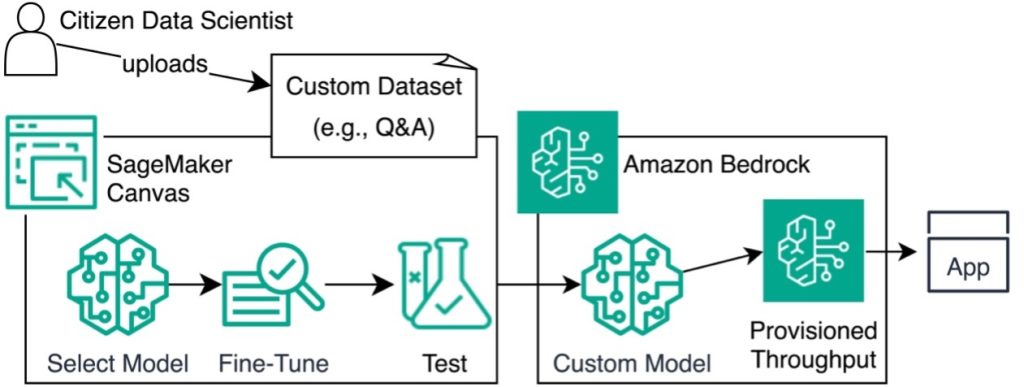

The following diagram illustrates this architecture.

In the following sections, we show you how to fine-tune a model by preparing your dataset, creating a new model, importing the dataset, and selecting a foundation model. We also demonstrate how to analyze and test the model, and then deploy the model via Amazon Bedrock.

Prerequisites

First-time users need an AWS account and AWS Identity and Access Management (IAM) role with SageMaker, Amazon Bedrock, and Amazon Simple Storage Service (Amazon S3) access.

To follow along with this post, complete the prerequisite steps to create a domain and enable access to Amazon Bedrock models:

Create a SageMaker domain.

On the domain details page, view the user profiles.

Choose Launch by your profile, and choose Canvas.

Confirm that your SageMaker IAM role and domain roles have the necessary permissions and trust relationships.

On the Amazon Bedrock console, choose Model access in the navigation pane.

Choose Manage model access.

Select Amazon to enable the Amazon Titan model.

Prepare your dataset

Complete the following steps to prepare your dataset:

Download the following CSV dataset of question-answer pairs.

Confirm that your dataset is free from formatting issues.

Copy the data to a new sheet and delete the original.

Create a new model

SageMaker Canvas allows simultaneous fine-tuning of multiple models, enabling you to compare and choose the best one from a leaderboard after fine-tuning. However, this post focuses on the Amazon Titan Text G1-Express LLM. Complete the following steps to create your model:

In SageMaker canvas, choose My models in the navigation pane.

Choose New model.

For Model name, enter a name (for example, MyModel).

For Problem type¸ select Fine-tune foundation model.

Choose Create.

The next step is to import your dataset into SageMaker Canvas:

Create a dataset named QA-Pairs.

Upload the prepared CSV file or select it from an S3 bucket.

Choose the dataset, then choose Select dataset.

Select a foundation model

After you upload your dataset, select a foundation model and fine-tune it with your dataset. Complete the following steps:

On the Fine-tune tab, on the Select base models menu¸ select Titan Express.

For Select input column, choose question.

For Select output column, choose answer.

Choose Fine-tune.

Wait 2–5 hours for SageMaker to finish fine-tuning your models.

Analyze the model

When the fine-tuning is complete, you can view the stats about your new model, including:

Training loss – The penalty for each mistake in next-word prediction during training. Lower values indicate better performance.

Training perplexity – A measure of the model’s surprise when encountering text during training. Lower perplexity suggests higher model confidence.

Validation loss and validation perplexity – Similar to the training metrics, but measured during the validation stage.

To get a detailed report on your custom model’s performance across various dimensions, such as toxicity and accuracy, choose Generate evaluation report. Then select Download report.

Canvas offers a Python Jupyter notebook detailing your fine-tuning job, alleviating concerns about vendor lock-in associated with no-code tools and enabling detail sharing with data science teams for further validation and deployment.

If you selected multiple foundation models to create custom models from your dataset, check out the Model leaderboard to compare them on dimensions like loss and perplexity.

Test the models

You now have access to custom models that can be tested in SageMaker Canvas. Complete the following steps to test the models:

Choose Test in Ready-to-Use Models and wait 15–30 minutes for your test endpoint to be deployed.

This test endpoint will only stay up for 2 hours to avoid unintended costs.

When the deployment is complete, you’ll be redirected to the SageMaker Canvas playground, with your model pre-selected.

Choose Compare and select the foundation model used for your custom model.

Enter a phrase directly from your training dataset, to make sure the custom model at least does better at such a question.

For this example, we enter the question, “Who developed the lie-detecting algorithm Fraudoscope?â€

The fine-tuned model responded correctly:

“The lie-detecting algorithm Fraudoscope was developed by Tselina Data Lab.â€

Amazon Titan responded incorrectly and verbosely. However, to its credit, the model produced important ethical concerns and limitations of facial recognition technologies in general:

Let’s ask a question about an NVIDIA chip, which powers Amazon Elastic Compute Cloud (Amazon EC2) P4d instances: “How much memory in an A100?â€

Again, the custom model not only gets the answer more correct, but it also answers with the brevity you would want from a question-answer bot:

“An A100 GPU provides up to 40 GB of high-speed HBM2 memory.â€

The Amazon Titan answer is incorrect:

Deploy the model via Amazon Bedrock

For production use, especially if you’re considering providing access to dozens or even thousands of employees by embedding the model into an application, you can deploy the models as API endpoints. Complete the following steps to deploy your model:

On the Amazon Bedrock console, choose Foundation models in the navigation pane, then choose Custom models.

Locate the model with the prefix Canvas- with Amazon Titan as the source.

Alternatively, you can use the AWS Command Line Interface (AWS CLI): aws bedrock list-custom-models

Make note of the modelArn, which you’ll use in the next step, and the modelName, or save them directly as variables:

To start using your model, you must provision throughput.

On the Amazon Bedrock console, choose Purchase Provisioned Throughput.

Name it, set 1 model unit, no commitment term.

Confirm the purchase.

Alternatively, you can use the AWS CLI:

Or, if you saved the values as variables in the previous step, use the following code:

After about five minutes, the model status changes from Creating to InService.

If you’re using the AWS CLI, you can see the status via aws bedrock list-provisioned-model-throughputs.

Use the model

You can access your fine-tuned LLM through the Amazon Bedrock console, API, CLI, or SDKs.

In the Chat Playground, choose the category of fine-tuned models, select your Canvas- prefixed model, and the provisioned throughput.

Enrich your existing software as a service (SaaS), software platforms, web portals, or mobile apps with your fine-tuned LLM using the API or SDKs. These let you send prompts to the Amazon Bedrock endpoint using your preferred programming language.

The response demonstrates the model’s tailored ability to answer these types of questions:

“The lie-detecting algorithm Fraudoscope was developed by Tselina Data Lab.â€

This improves the response from Amazon Titan before fine-tuning:

“Marston Morse developed the lie-detecting algorithm Fraudoscope.â€

For a full example of invoking models on Amazon Bedrock, refer to the following GitHub repository. This repository provides a ready-to-use code base that lets you experiment with various LLMs and deploy a versatile chatbot architecture within your AWS account. You now have the skills to use this with your custom model.

Another repository that may spark your imagination is Amazon Bedrock Samples, which can help you get started on a number of other use cases.

Conclusion

In this post, we showed you how to fine-tune an LLM to better fit your business needs, deploy your custom model as an Amazon Bedrock API endpoint, and use that endpoint in application code. This unlocked the custom language model’s power to a broader set of people within your business.

Although we used examples based on a sample dataset, this post showcased these tools’ capabilities and potential applications in real-world scenarios. The process is straightforward and applicable to various datasets, such as your organization’s FAQs, provided they are in CSV format.

Take what you learned and start brainstorming ways to use custom AI models in your organization. For further inspiration, see Overcoming common contact center challenges with generative AI and Amazon SageMaker Canvas and AWS re:Invent 2023 – New LLM capabilities in Amazon SageMaker Canvas, with Bain & Company (AIM363).

About the Authors

Yann Stoneman is a Solutions Architect at AWS focused on machine learning and serverless application development. With a background in software engineering and a blend of arts and tech education from Juilliard and Columbia, Yann brings a creative approach to AI challenges. He actively shares his expertise through his YouTube channel, blog posts, and presentations.

Davide Gallitelli is a Specialist Solutions Architect for AI/ML in the EMEA region. He is based in Brussels and works closely with customer throughout Benelux. He has been a developer since very young, starting to code at the age of 7. He started learning AI/ML in his later years of university, and has fallen in love with it since then.

Source: Read MoreÂ