Graph Transformers (GTs) have successfully achieved state-of-the-art performance on various platforms. GTs can capture long-range information from nodes that are at large distances, unlike the local message-passing in graph neural networks (GNNs). In addition, the self-attention mechanism in GTs permits each node to look at other nodes in a graph directly, helping collect information from arbitrary nodes. The same self-attention in GTs also provides much flexibility and capacity to collect information globally and adaptively.Â

Despite being advantageous over a large variety of tasks, the self-attention mechanism in GTs doesn’t pay more attention to the special features of graphs, such as biases related to structure. Although some methods that account for these features leverage positional encoding and attention bias model inductive biases, they are ineffective in overcoming this problem. Also, the self-attention mechanism doesn’t utilize the full advantage of intrinsic feature biases in graphs, which creates critical challenges in capturing the essential graph structural information. Neglecting structural correlation can lead to an equal focus on each node by the mechanism, creating an inadequate focus on key information and the aggregation of redundant information.Â

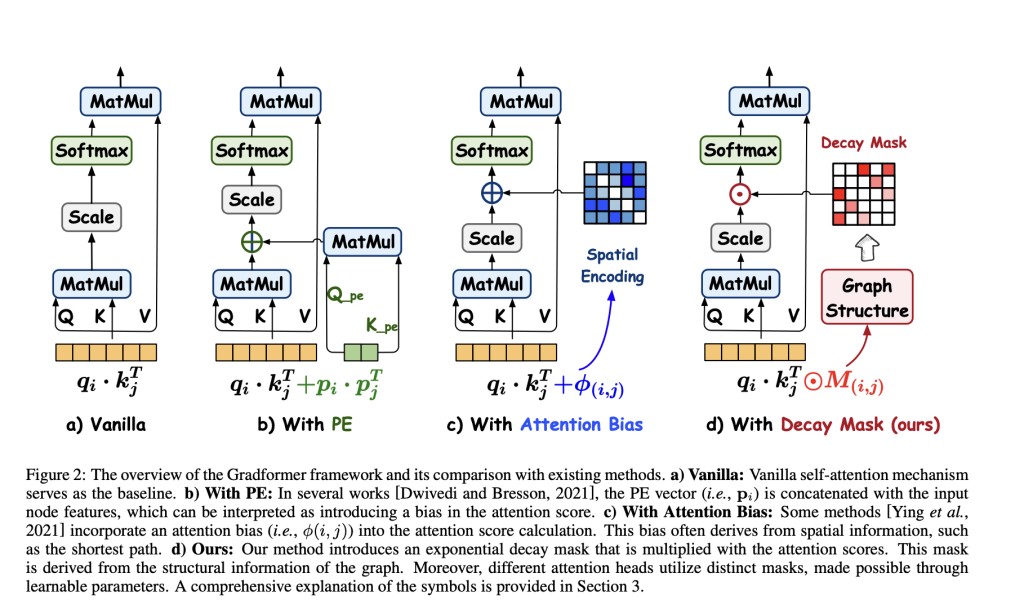

Researchers from Wuhan University China, JD Explore Academy China, The University of Melbourne, and Griffith University, Brisbane, proposed Gradformer, a novel method that innovatively integrates GTs with inductive bias. Gradformer includes a special feature called exponential decay mask into the GT self-attention architecture. This approach helps to control each node’s attention weights relative to other nodes by multiplying the mask with the attention score. The gradual reduction in attention weights due to exponential decay helps the decay mask effectively guide the learning process within the self-attention framework.Â

Gradformer achieves state-of-the-art results on five datasets, highlighting the efficiency of this proposed method. When tested on small datasets like NC11 and PROTEINS, it outperforms all 14 methods with improvements of 2.13% and 2.28%, respectively. This shows that Gradformer effectively incorporates inductive biases into the GT model, which becomes important if available data is limited. Moreover, it performs well on big datasets such as ZINC, which shows that it applies to datasets of different sizes.Â

Researchers performed an efficiency analysis on Gradformer and compared its training cost with other important methods like SAN, Graphormer, and GraphGPS, mostly focussing on parameters such as GPU memory usage and time. The results obtained from the comparison demonstrated that Gradformer can balance efficiency and accuracy optimally, outperforming SAN and GraphGPS in computational efficiency and accuracy. Further, despite having a longer runtime than Graphormer, it outperforms Graphormer in accuracy, showing its superiority in resource usage with good performance results.Â

In conclusion, researchers proposed Gradformer, a novel integration of GT with intrinsic inductive biases, achieved by applying an exponential decay mask with learnable parameters to the attention matrix. Gradformer outperforms 14 methods of GTs and GNNs with improvements of 2.13% and 2.28%, respectively. Gradformer excels in its capacity to maintain or even exceed the accuracy of shallow models while incorporating deeper network architectures. Future work on Gradformer includes (a) exploring the feasibility of achieving a state-of-the-art structure without using MPNN and (b) investigating the capability of the decay mask operation to improve GT efficiency.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post Gradformer: A Machine Learning Method that Integrates Graph Transformers (GTs) with the Intrinsic Inductive Bias by Applying an Exponential Decay Mask to the Attention Matrix appeared first on MarkTechPost.

Source: Read MoreÂ