In recent times, contrastive learning has become a potent strategy for training models to learn efficient visual representations by aligning image and text embeddings. However, one of the difficulties with contrastive learning is the computation needed for pairwise similarity between image and text pairs, especially when working with large-scale datasets.

In recent research, a team of researchers has presented a new method for pre-training vision models with web-scale image-text data in a weakly supervised manner. Called CatLIP (Categorical Loss for Image-text Pre-training), this approach solves the trade-off between efficiency and scalability on web-scale image-text datasets with weak labeling.

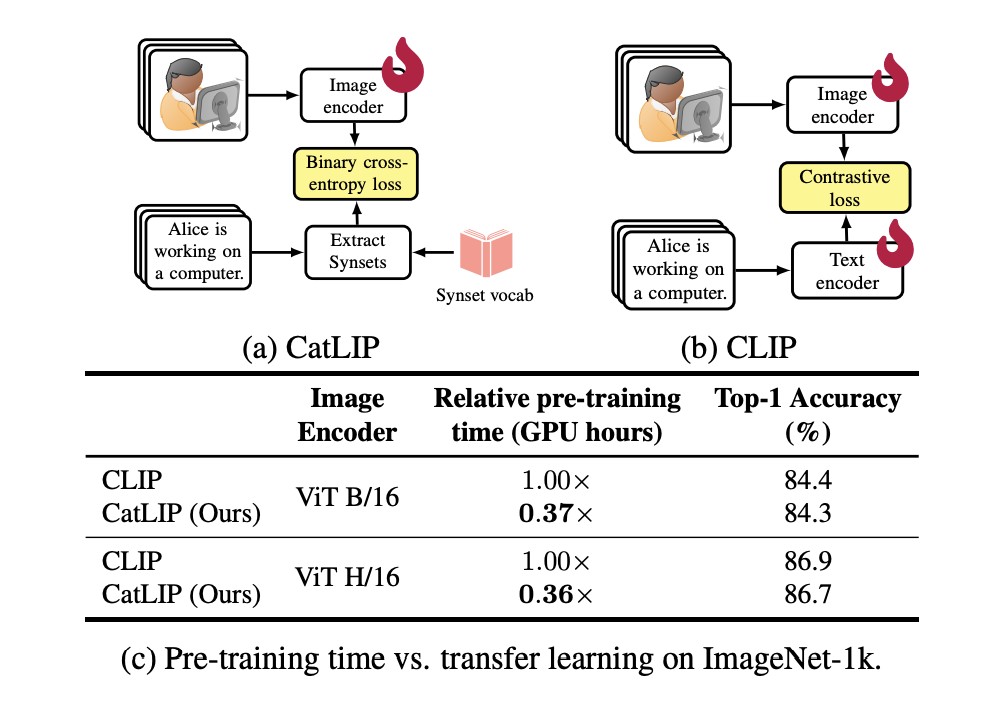

By extracting labels from text captions, CatLIP views image-text pre-training as a classification problem. The team has shared that this method maintains performance on downstream tasks like ImageNet-1k classification and is much more efficient to train than CLIP. Comprehensive tests have been showcased to confirm CatLIP’s effectiveness.

The effectiveness of CatLIP was assessed by the team through a comprehensive set of tests involving a range of vision tasks, such as object detection and image segmentation. They showed that this approach preserves high-quality representations that perform well in a variety of visual tests, even with a change in training paradigm.

The team has summarized their primary contributions as follows.

By recasting image-text data as a classification job, this study presents a unique way to expedite the pre-training of vision models on such data.Â

CatLIP performs better with data and model scaling, which is especially noticeable in tests utilizing tiny amounts of image-text data. When training the model for longer periods of time than with conventional contrastive learning techniques such as CLIP, the model performs far better.

Using embeddings linked to target labels from the classification layer, the team has suggested a technique that allows the pre-trained model to transfer information to target tasks in an efficient manner. With this method, embeddings acquired during pre-training can be used to initialize the classification layer in subsequent tasks, enabling data-efficient transfer learning.Â

By means of extensive tests covering multiple downstream tasks, including object recognition and semantic segmentation, the team has demonstrated the effectiveness of the representations that CatLIP has learned. CatLIP achieves similar performance as CLIP but with a much shorter pre-training time, as demonstrated by a pre-training time that is 2.7× faster on the DataComp-1.3B dataset.

In conclusion, by rephrasing the job as a classification problem, this research proposes a new approach to pre-train vision models on large-scale image-text data. This strategy not only retains good representation quality across varied visual tasks but also significantly speeds up training times.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post This AI Paper from Apple Introduces a Weakly-Supervised Pre-Training Method for Vision Models Using Publicly Available Web-Scale Image-Text Data appeared first on MarkTechPost.

Source: Read MoreÂ