In Large language models(LLM), developers and researchers face a significant challenge in accurately measuring and comparing the capabilities of different chatbot models. A good benchmark for evaluating these models should accurately reflect real-world usage, distinguish between different models’ abilities, and regularly update to incorporate new data and avoid biases.

Traditionally, benchmarks for large language models, such as multiple-choice question-answering systems, have been static. These benchmarks do not frequently update and fail to capture real-world application nuances. They also may not effectively demonstrate the differences between more closely performing models, which is crucial for developers aiming to improve their systems.

‘Arena-Hard‘ has been developed by LMSYS ORG to address these shortcomings. This system creates benchmarks from live data collected from a platform where users continuously evaluate large language models. This method ensures the benchmarks are up-to-date and rooted in fundamental user interactions, providing a more dynamic and relevant evaluation tool.

To adapt this for real-world benchmarking of LLMs:

Continuously Update the Predictions and Reference Outcomes: As new data or models become available, the benchmark should update its predictions and recalibrate based on actual performance outcomes.

Incorporate a Diversity of Model Comparisons: Ensure a wide range of model pairs is considered to capture various capabilities and weaknesses.

Transparent Reporting: Regularly publish details on the benchmark’s performance, prediction accuracy, and areas for improvement.

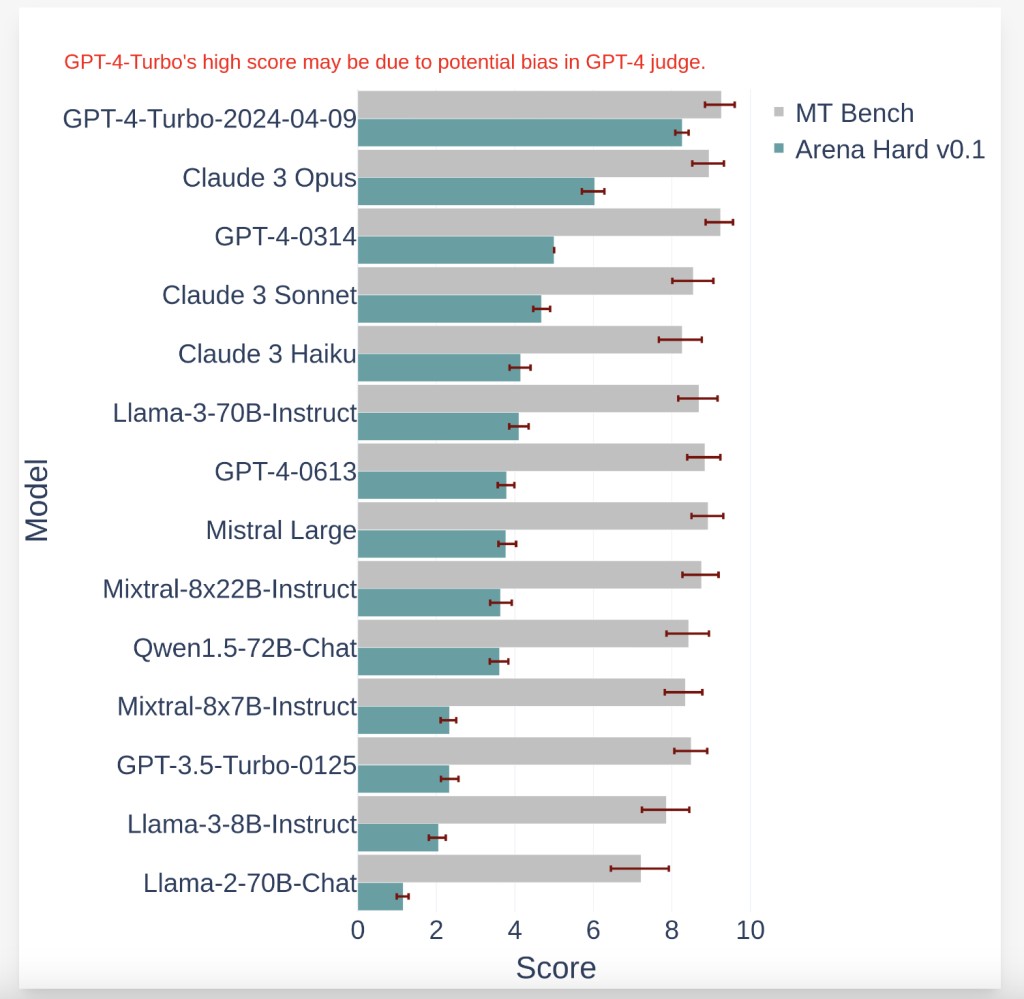

The effectiveness of Arena-Hard is measured by two primary metrics: its ability to agree with human preferences and its capacity to separate different models based on their performance. Compared with existing benchmarks, Arena-Hard showed significantly better performance in both metrics. It demonstrated a high agreement rate with human preferences. It proved more capable of distinguishing between top-performing models, with a notable percentage of model comparisons having precise, non-overlapping confidence intervals.

In conclusion, Arena-Hard represents a significant advancement in benchmarking language model chatbots. By leveraging live user data and focusing on metrics that reflect both human preferences and clear separability of model capabilities, this new benchmark provides a more accurate, reliable, and relevant tool for developers. This can drive the development of more effective and nuanced language models, ultimately enhancing user experience across various applications.

Check out the GitHub page and Blog. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post LMSYS ORG Introduces Arena-Hard: A Data Pipeline to Build High-Quality Benchmarks from Live Data in Chatbot Arena, which is a Crowd-Sourced Platform for LLM Evals appeared first on MarkTechPost.

Source: Read MoreÂ