Graphs are important in representing complex relationships in various domains like social networks, knowledge graphs, and molecular discovery. Alongside topological structure, nodes often possess textual features providing context. Graph Machine Learning (Graph ML), especially Graph Neural Networks (GNNs), has emerged to effectively model such data, utilizing deep learning’s message-passing mechanism to capture high-order relationships. With the rise of Large Language Models (LLMs), a trend has emerged, integrating LLMs with GNNs to tackle diverse graph tasks and enhance generalization capabilities through self-supervised learning methods. The rapid evolution and immense potential of Graph ML pose a need for conducting a comprehensive review of recent advancements in Graph ML.

Initial methods in graph learning, such as random walks and graph embedding, were foundational, facilitating node representation learning while preserving graph topology. GNNs, empowered by deep learning, have made significant strides in graph learning, introducing techniques like GCNs and GATs to enhance node representation and focus on crucial nodes. Also, the advent of LLMs has sparked innovation in graph learning, with models like GraphGPT and GLEM utilizing advanced language model techniques to understand and manipulate graph structures effectively. Foundation Models (FMs) have revolutionized NLP and vision domains in the broader AI spectrum. However, the development of Graph Foundation Models (GFMs) is still evolving, necessitating further exploration to advance Graph ML capabilities.

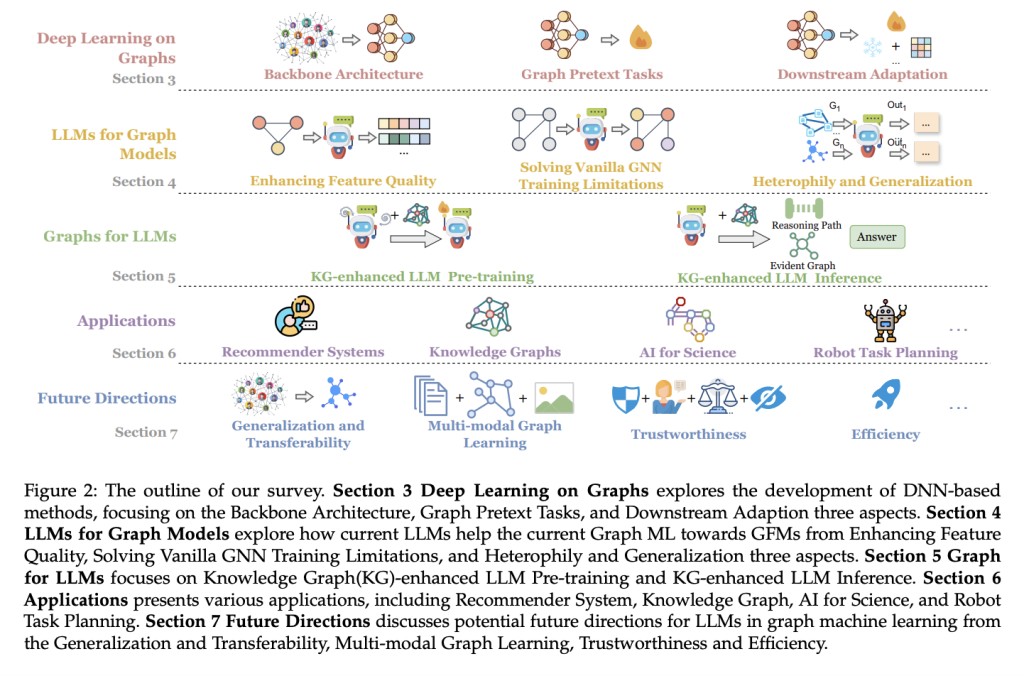

In this survey, researchers from Hong Kong Polytechnic University, Wuhan University, and North Carolina State University aim to provide a thorough review of Graph ML in the era of LLMs. The key contributions of this research are the following:

They detailed the evolution from early graph learning methods to the latest GFMs in the era of LLMs

They have comprehensively analyzed current LLM-enhanced Graph ML methods, highlighting their advantages and limitations and offering a systematic categorization.

Provide a thorough investigation of the potential of graph structures to address the limitations of LLMs.

They also explored the applications and prospective future directions of Graph ML and discussed both research and practical applications in various fields.

Graph ML based on GNNs faces inherent limitations, including the need for labeled data and shallow text embeddings that hinder semantic extraction. LLMs offer a solution with their ability to handle natural language, conduct zero/few-shot predictions, and provide unified feature spaces. The researchers explored how LLMs can enhance Graph ML by improving feature quality and aligning feature space, using their extensive parameter volume and rich open-world knowledge to address these challenges. The researcher also discussed the applications of Graph ML in various fields, such as robot task planning and AI for science.

Although LLMs excel in constructing GFMs, their operational efficiency for processing large and complex graphs remains an issue. Current practices, such as using APIs like GPT4, can result in high costs, and deploying large open-source models like LLaMa requires significant computational resources and storage. Recent studies propose techniques like LoRA and QLoRA for more efficient parameter fine-tuning to address these issues. Model pruning is also promising, simplifying LLMs for graph machine learning by removing redundant parameters or structures.

In conclusion, The researchers conducted a comprehensive survey detailing the evolution of graph learning methods and analyzing current LLM-enhanced Graph ML techniques. Despite advancements, challenges in operational efficiency persist. However, recent studies suggest techniques like parameter fine-tuning and model pruning to overcome these obstacles, signaling continued progress in the field.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post Integrating Large Language Models with Graph Machine Learning: A Comprehensive Review appeared first on MarkTechPost.

Source: Read MoreÂ