Snowflake AI Research has launched the Arctic, a cutting-edge open-source large language model (LLM) specifically designed for enterprise AI applications, setting a new standard for cost-effectiveness and accessibility. This model leverages a unique Dense-MoE Hybrid transformer architecture to handle SQL generation, coding, and following instructions efficiently. With its capability to operate under lower computing budgets, Arctic offers a high-performance solution for businesses looking to integrate advanced AI without the substantial costs typically involved.

Snowflake Arctic includes two variants:Â

Both models are hosted on Hugging Face and are readily accessible for use. The Arctic Base provides a solid foundation for general AI tasks, suitable for most enterprise needs. Meanwhile, the Arctic Instruct is optimized for more specific instructions, offering tailored performance enhancements such as precise command response and advanced query handling. Both versions are designed to be highly adaptable and scalable to meet diverse business requirements.

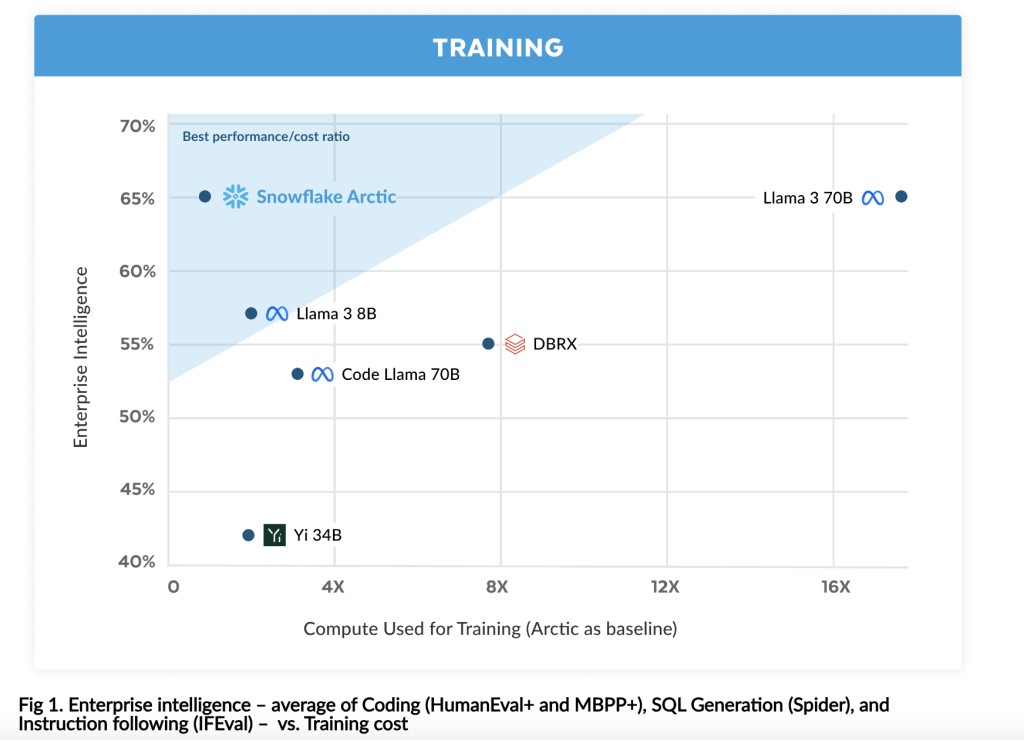

Arctic’s training process reflects significant advancements in efficiency, utilizing less than $2 million in computing costs, a fraction of what is typically required. This efficiency is achieved through its innovative architecture, combining a 10B dense transformer with a 128×3.66B MoE MLP, optimizing cost and performance. Snowflake’s strategic approach allows Arctic to deliver top-tier enterprise intelligence while maintaining competitive performance across various metrics, including coding, SQL generation, and instruction following.

Snowflake Arctic is open-sourced under the Apache 2.0 license to improve transparency and collaboration, providing ungated access to both the model weights and the underlying code. This initiative supports technological innovation and fosters a community around the Arctic, enabling developers and researchers to build upon and extend the model’s capabilities. Open sourcing includes comprehensive documentation and data recipes, further aiding users in customizing the model for their needs.

Getting started with Snowflake Arctic is streamlined through platforms such as Hugging Face, AWS, and NVIDIA AI Catalog, among others. Users can download the model variants directly and access detailed tutorials for setting up Arctic for inference, including basic setups and more complex deployments. This ease of access facilitates rapid integration and utilization of Arctic in enterprise environments, ensuring that businesses can quickly benefit from its advanced AI capabilities.

In conclusion, Snowflake Arctic represents a significant leap forward in enterprise AI. By dramatically reducing the cost and complexity of deploying advanced AI solutions, Arctic enables businesses of all sizes to harness the power of large language models. Its open-source nature and robust support ecosystem further enhance its value, making it an ideal choice for organizations seeking to innovate and excel in today’s competitive market.

The post Snowflake AI Research Team Unveils Arctic: An Open-Source Enterprise-Grade Large Language Model (LLM) with a Staggering 480B Parameters appeared first on MarkTechPost.

Source: Read MoreÂ