Amazon Aurora backs up cluster volumes automatically and retains restore data for the length of the backup retention period. Aurora automated backups are continuous and incremental, so you can restore to any point within the backup retention period from 1–35 days. If you need to retain backups for more than 35 days, you can take manual snapshots or snapshot export, which never expire. Manual snapshots incur full snapshot costs as long as they are stored. The increase in costs highlights the need for a cost-effective solution to retain backups for a long time.

In this post, we discuss how to reduce the Aurora backup cost for long retention periods by using MySQL Shell integrated with Amazon Simple Storage Service (Amazon S3). MySQL Shell can help back up your Amazon Aurora MySQL-Compatible Edition database using multiple threads, compress it and then upload it to Amazon S3 in one step. These backups can then be lifecycled and archived to low-cost cold storage Amazon S3 Glacier storage classes. You can restore a temporary copy as needed to an S3 bucket from S3 Glacier storage classes for a specified duration.

Solution overview

MySQL Shell provides dump and data-loading utilities to backup your database with various options to Amazon S3 in a compressed format. For example, you can use the MySQL Shell dump utility to dump an entire instance, or specific schemas, or particular tables from a schema.

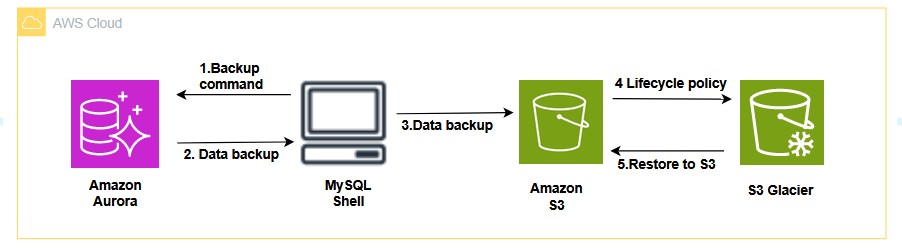

The following diagram illustrates the architecture for this solution

The workflow includes the following steps:

Connect to the Aurora database from MySQL Shell and start a database backup to Amazon S3.

MySQL Shell connects to Aurora and runs the database backup.

MySQL Shell backs up the dump files to Amazon S3.

Configure an S3 Life Cycle configuration to move the backup dump files from Amazon S3 to S3 Glacier.

Restore the backup files from S3 Glacier to Amazon S3 when needed.

Prerequisites

To complete this walk through, you must have MySQL Shell installed on an Amazon Elastic Compute Cloud (Amazon EC2) instance within the same VPC environment as Aurora. In this section, we provide setup details using Linux, Windows, and macOS.

To install MySQL Shell on Linux, complete the following steps. For more details, refer to Installing MySQL Shell on Linux

For license details, refer to the Licensing Information User Manual.

Download the MySQL Yum repository:x

Install the downloaded package:

Run the following command to update the package database:

Install MySQL Shell:

Verify MySQL Shell installation by running the following command:

Set up credentials for the AWS Command Line Interface ( AWS CLI ) before you connect to MySQL Shell.

Make sure you have connectivity between the MySQL Shell client and the Aurora database.

To set up MySQL Shell using Windows, refer to Installing MySQL Shell on Microsoft Windows.

For macOS, refer to Installing MySQL Shell on macOS.

Connect to MySQL Shell

Start MySQL Shell by issuing the following command:

This opens MySQL Shell without connecting to a server, by default in JavaScript mode. You can change modes using the sql, py, and js commands.

Connect to the Aurora MySQL database

Connect to the Aurora MySQL database with a user that has sufficient privileges to backup the database using an Aurora endpoint by issuing the following command:

For more information, refer to refer to MySQL shell commands.

Compress and upload data using Amazon S3

Now you can back up the database to Amazon S3 in compressed format in one step. You can use different options to back up the entire instance, specific schemas, specific tables from a schema, or all the views and tables from the specific schema. Backup commands run at the MySQL Shell prompt and data goes through the client to Amazon S3. You should size your client EC2 instance appropriately based on the number of threads you are going to use.

Dump the entire instance:Â

The following example illustrates how to dump a MySQL instance to a folder, called test, in an S3 bucket, called Bucket001, containing multiple threads with some compatibility options:

The output shows the compression ratio:

Dump specific schemas:

The following code dumps the world schema from the connected MySQL instance to an S3 bucket, with some compatibility modifications:

Dump specific tables from a schema

The following code dumps the table sbtest1 from the schema mylab to an S3 bucket, with multiple threads with some compatibility options:

Dump all views and tables from a specified schema

The following code dumps all views and tables from a specific schema:

Move backups to S3 Glacier storage classes

To retain backups long term or indefinitely at reduced cost, you can apply a lifecycle policy to your data in Amazon S3 storage to move it to S3 Glacier storage classes. For instance, to retain files for more than 35 days using S3 Glacier Flexible Retrieval, apply a lifecycle policy with the following code:

Restore a backup from S3 Glacier storage classes to Amazon S3

To access your backups archived in S3 Glacier storage classes, use the RestoreObject operation. This restores a temporary copy of the backup file to its S3 bucket for the specified duration. Now the restore object remains available in the S3 bucket for GET requests, until it expires. If a permanent copy of the object is needed, restore the backup and then create a copy of it in an S3 bucket.

Use the restore-object command to restore objects from S3 Glacier Flexible Retrieval. The following example restores the object dir1/example.obj in DOC-EXAMPLE-BUCKET for 25 days:

If the JSON syntax used in the example results in an error on a Windows client, replace the restore request with the following syntax:

To monitor the status of the restore-object request, use the following command:

Restore backup from Amazon S3 to an Aurora MySQL instance

To restore data from Amazon S3 to an Aurora MySQL instance, create an AWS Identity and Access Management (IAM) role to delegate permissions from Amazon Relational Database Service (Amazon RDS) to your S3 bucket. When you create an IAM role, you attach trust and permission policies. For more information, refer to Creating a role to delegate permissions to an AWS service.

You can import data from Amazon S3 to a new Aurora MySQL DB instance using the MySQL Shell util.loadDump utility. The following example shows the load of a MySQL dump from a folder, called test, in an S3 bucket, called Bucket001:

For Windows, refer to Restoring a backup into a MySQL DB instance

Cost comparison

We analyzed the AWS storage costs for size of 1 TB of an Aurora snapshot, Amazon S3, and S3 Glacier storage classes for different retention periods and found a cost savings of up to 59% for Amazon S3 standard backup storage and up to 580% for S3 Glacier Flexible Retrieval storage classes compared to an Aurora snapshot. The following table shows the costs for the US East (N. Virginia) AWS Region. Note that these number are based on our test, and the savings may change based on the type of data you have and the compression ratio of resulting dump file.

ServiceÂ

Monthly Cost

( $ Per GB)

Size (TB)

Month

1 Year

7 Years

Amazon Aurora Snapshot

$0.021

1

$21.50

$258.048

$1,806

Amazon S3 Standard-Infrequent

$0.012

1

$12.80

$153.600

$1,075

Amazon S3 Glacier Flexible Retrieval

$0.0036

1

$3.68

$44.230

$309

For more information about pricing in different Regions, refer to Amazon Aurora pricing and Amazon S3 pricing.

Clean up

To prevent incurring future expenses, after you test the solution, delete your resources with the following steps:

Delete the backup file from the S3 bucket using the AWS CLI:

Delete the backup archive file from Amazon S3 bucket using AWS CLI:

Delete the Aurora MySQL instance from the DB cluster or Aurora cluster.

Delete the EC2 instance.

Conclusion

In this post, we explored how to reduce the cost for long-retention Aurora backups using MySQL Shell, Amazon S3, and S3 Glacier storage classes. This approach provides the flexibility to take a backup of an entire instance, specific schemas, or specific tables, and also a backup of all views and tables of specific schemas, using multiple threads for faster backups and in compressed format in a single step. This process allows you to avoid the cost of data export to Amazon S3 and manual snapshots for longer retention. MySQL Shell offers a straight forward and cost-effective way to back up your Aurora MySQL databases for longer retention periods.

Test the procedure outlined in this post by deploying the sample code provided and share your feedback in the comments section.

About the Authors

Pooja Singh is a Senior Technical Account Manager within Enterprise Support at Amazon Web Services (AWS). Pooja provides strategic technical guidance for independent software vendors (ISVs) to innovate and operate their workloads efficiently on AWS.

Rekha Anupati  is a Database Specialist SA. She specializes in assisting customers migrate and modernize their databases to AWS cloud. In her spare time, she loves spending time with family and friends.

Aditya Samant is a relational database industry veteran with over 2 decades of experience working with commercial and open-source databases. He currently works at Amazon Web Services as a Pr. Database Specialist Solutions Architect. In his role, he spends his time working with customers designing scalable, secure and robust cloud native architectures. Aditya also works closely with the service teams and collaborates on designing and delivery of the new features for Amazon’s flagship relational database, Amazon Aurora.

Source: Read More