AWS recently announced Amazon Relational Database Service (Amazon RDS) for Db2 as a supported target for AWS Database Migration Service (AWS DMS). This addition allows you to move your database workloads from self-managed Db2 LUW databases to Amazon RDS for Db2 or another self-managed Db2 LUW instance. By using the migration capabilities of AWS DMS, you can now migrate your existing databases and replicate ongoing changes to Db2 LUW to make the switch fast and simple with near zero downtime.

In this post, we show how to improve the performance of full load and ongoing replication from a self-managed Db2 instance to Amazon RDS for Db2. A well-tuned task allows you to keep latency low and perform fast cutovers to the target DB instance.

Solution overview

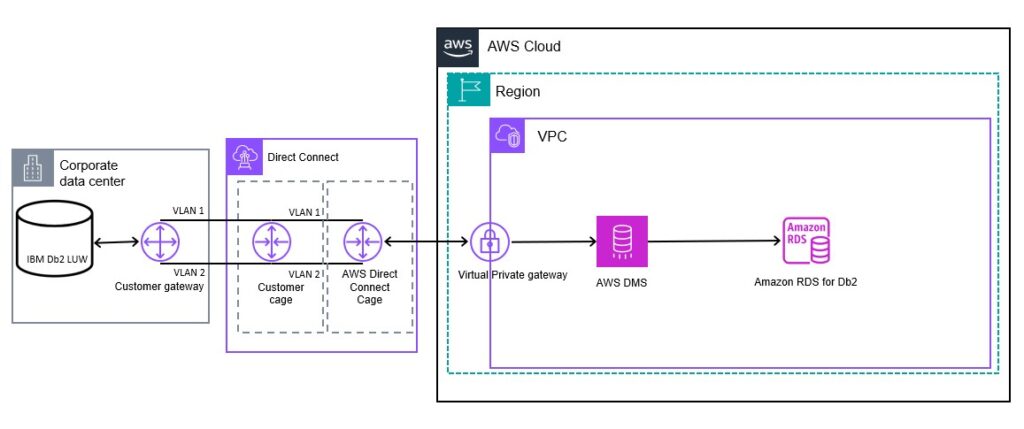

The following diagram illustrates the solution architecture.

The Db2 LUW Database from Corporate data center is connected to AWS DMS via AWS Direct Connect, alternatively you can also use a site-to-site VPN service to connect to AWS privately. AWS DMS Service is comprised of a Replication Instance and one or more replication tasks to the migrate and/or replicate data to the target RDS Db2 Instance from the Source DB Instance.

To migrate a large volume of data and replicate ongoing changes faster, AWS DMS supports segmented or parallel load and batch apply processing features. AWS DMS is configured to use the Db2 load utility on a Db2 target during the full load and ongoing replication with batch apply enabled.

Prerequisites

You should have a basic understanding of how AWS DMS works and how to set up source and target systems for migration. If you’re just getting started with AWS DMS, review the AWS DMS documentation. You should also have a supported Db2 LUW source database set up and an Amazon RDS for Db2 target to perform the migration. To get started, refer to the following resources:

Create a replication instance using the AWS DMS console

Specify source and target endpoints

Create a task and migrate data

Full load migration

To perform a full load migration, you need to grant the DATAACCESS permission on the source database to extract the data and create a user with all privileges granted on schemas that will be migrated or use the RDS primary user on the target database.

The Db2 native db2load API provides better performance, so we use it for the initial full load. We only support limited LOB mode when using a Db2 target. You can configure the value of LobMaxSize in KBs to allow the transfer of the LOB data inline with rows and the maximum recommended size as 100 MB. The LOB size exceeding LobMaxSize is truncated, and a warning is issued to the log file. This mode provides a performance boost, but at the expense of some data loss. You can find the maximum size of your LOB columns and adjust the same in LobMaxSize to mitigate data loss. If your tables don’t have any LOBs, you don’t have to worry about it.

LobMaxSize

You can find if LOBs are present, and also their maximum sizes, by running the following PL/SQL code in your source database. You will need to answer the prompts with your schema and table names.

Based on the output from the preceding query, you can identify and set the value of LobMaxSize to migrate the LOB data inline with the row data. The following is the output from the preceding query.

Dataset

For this post, we migrate a sample TPC-C dataset to Amazon RDS for Db2, which contains nine tables with a total size of about 75 GB. You can use the following query to find the size of the tables and count of the rows in a particular schema:

The following is the output from the preceding query.

Source, Target and Replication Instance configuration

For this full load operation, we use the following configuration for source, target, and replication instances. We test the performance of a full load with default and tuned settings for comparison. We also use the same hardware configuration for both the tests, only modifying various settings.

Configuration

Source

Replication Instance

Target

CPUs

16

16

8

Memory

64 GB

128 GB

64 GB

IOPS

20000

600

3000

Network Performance

Up to 12.5 Gbps

Up to 12.5 Gbps

Up to 12.5 Gbps

Migrate data using default task settings

Because this is a base task and needed only for comparison, we use default values in the task and endpoint settings to migrate 75 GB of data from source to target. With the data transfer occurring over a public network, a full load migration took 4 hours and 32 minutes at approximately 4.6 MB/second.

Full load settings

With the default settings, we load eight tables in parallel; primary keys on target tables are pre-created and the load process commits 10,000 rows per table. This limits the number of tables loaded concurrently and increases the time of the full load because we constantly keep the index updated with each batch of data load happening on the target.

Selection rules

With selection rules, you can select the data source such as schema, table, and views to migrate data. Advanced settings such as usage of filters on the data and load order of tables can be applied; however, we are not applying any filters or load order because this will only be a one-time migration anticipated for a cutover. See the following code:

Table statistics

AWS DMS provides table state, counters related to full load rows, along with load time, inserts, and updates and deletes applied on the target for monitoring performance. The following screenshot shows tables sorted by migration time – the longest of which was 4 hours, 30 minutes, and 25 seconds.

Tunable settings for a full load only task

We can use the following tunable parameters to improve the full load performance for a full load only task.

We use the following task settings:

CreatePkAfterFullLoad – To control the behavior of the primary key creation before or after full load

MaxFullLoadSubTasks – To control the number of tables or table segments to load in parallel

CommitRate – To control the maximum number of records that can be transferred together

We use the following segmented unload settings:

partitions-auto – Unload data from all partitions in parallel

partitions-list – Unload specific partitions in parallel

Range – Specify range-based data unload using boundaries specified for columns

We use the following target endpoint settings:

WriteBufferSize – Maximum size of buffer in KB to generate the CSV file

MaxFileSize – Maximum file size in KB to load data in the Db2 target

The preceding endpoint settings are also applicable for change data capture (CDC) when we enable batch apply.

Migrate data using custom settings and segmented load

We proceed with another run of the full load operation using the same hardware configuration as earlier for the source, target, and replication instance, but we modify the full load and endpoint settings and attempt a segmented data load to make use of the parallel table load.

Full load settings

In the following settings, we create a task to create the primary key after the full load to reduce the number of times database writes entries to primary key index and maximum sub-tasks to the maximum limit to support extra parallel load threads and commit a rate to transfer maximum limit of 50K records:

Endpoint settings

We increase the values of the following endpoint settings to improve performance. The values chosen are based on the memory availability and number of parallel load threads:

“WriteBufferSize”=204800;”MaxFileSize”=409600;

Selection rules to parallel load using ranges

In the following code, we define a task to use parallel load using load types partitions and ranges. The partition-auto option allows DMS to automatically create individual load thread per partition and the boundary ranges provided are based on data distribution and these boundaries provide close to equal data distribution among all segments:

AWS DMS log

The following AWS DMS log snippet shows the segments that have been initialized for performing parallel unload and load:

Table statistics

The following screenshot shows the longest time a table migrated, which was 3 hours, 46 minutes, and 30 seconds.

By using segmented unload and tuning settings, we reduced the load time by about 45 minutes. You can further increase the speed of full load migration by scaling up the instances and increasing storage IOPS during the full load activity.

Summary of performance tests

The following table summarizes our performance tests, showing the time taken to perform full load.

Test

Time Taken (seconds)

Using default settings

16,225

Use custom settings and segmented load

13,590

Replication between source Db2 to Amazon RDS for Db2

During ongoing replication, we mine changes from the source Db2 instance’s transaction logs and apply the changes on the target in transactional or batch apply mode based on the task configuration. Changes on the target are applied using a single thread. Using transactional mode provides the benefit of applying changes in the same order as commit on the source, and it helps keep the referential integrity constraints. Although batch apply mode helps improve performance, as we load changes on an intermediate net changes table and apply all the changes on the target database’s table, this will violate the referential integrity constraints. If you choose batch apply mode, you will need to disable the constraints on the target and enable before the cutover process.

Prerequisites

To replicate changes from the source Db2 to Amazon RDS for Db2, you must perform the following on the source database:

Set the database in recoverable mode by setting the database configuration parameter to LOGARCHMETH1.

Grant the database user account that mines changes from the transaction logs with DBADM authorization.

For example, GRANT DBADM ON DATABASE TO USER RDSDB;

In many cases, the start position of a CDC task is in the past and must be provided manually. To set the start position to mine changes, you need to set the endpoint setting StartFromContext. Valid values for this setting are:

A timestamp in UTC time zone to start the replication based on the timestamp.

For example, timestamp:2023-11-21T00:00:00

Along with “CurrentLSN”: “scan”, the source must be 10.5 or higher for this to work:

‘{“CurrentLSN”: “scan”, “StartFromContext”: “NOW”}’

A specific log record identifier (LRI).

For example, ‘{“StartFromContext”: “0100000000000022CC000000000004FB13”}’

AWS DMS by default enables CDC for all tables that are part of replication.

Workload on source Db2

For the ongoing replication, we use a workload generator that is configured to run for 600 seconds with 240 seconds of warmup time to scale the number of connections on the source that increases workload.

The connection rate is going to scale in the order of 1, 10, and 25 concurrent connections with a wait time of 240 seconds between each run. We’ve enabled batch apply mode for all the following tests to improve the performance of CDC tasks. As explained earlier, we disabled the referential integrity constraints to boost performance.

The scope of the replication performance testing includes:

Replication performance using default settings

Replication performance using custom settings:

Sample run

Continuous load batches

Task split and continuous load batches

Continuous load batches and task split

To summarize, we perform two major tests. In the initial test, AWS DMS runs with all default task and endpoint settings, then we modify to include custom settings to change the processing performance where we perform a sample run with the same length of time as the test case with the default task settings. For the next two cases, we leave the workload for some amount of time to see if the workload migrated when there is an increased concurrency in transactions.

We decided to scale up the previously created replication instance for full load activity to the dms.r6i.8xlarge instance class, which has 32 vCPUs and 256 GB of memory to keep up with a large volume of changes applied on the source.

Replication performance using default settings

We set up a replication task to replicate nine tables with default values for all task settings except with batch apply enabled.

The following is the AWS DMS log snippet:

AWS DMS swap file generation

We started the task at 17:19 UTC. At 17:23 UTC, reading from the source was paused due to swap file generation. After 10 minutes, we stopped the workload after the first run, which generated a load using one connection. The latency continued until it reached its peak at 1,257 seconds, and then finally dropped down to 0 seconds. When the swap files are generated, AWS DMS stops reading from the source to avoid space consumption and further increases the latency.

CDC performance

In the following figure, we plotted latency and CDC throughput rows for the source and target databases. The source metrics (CDCLatencySource and CDCThroughputRowsSource) are plotted in blue, and the target metrics (CDCLatencyTarget and CDCThroughputRowsTarget) are plotted in orange.

The left plot shows the latency in capturing the changes from the source compared to latency in applying the changes on the target. The right plot shows the rate at which the change events are captured from the source compared to applied on the target.

We captured the following key metrics from this task:

The source latency compared to target latency reached 807 seconds compared to 1,257 seconds

The CDC throughput row on the source compared to the target reached 76,642 rows/second compared to 34,140 rows/second

Tunable parameters

We use the following tunable task settings to improve the replication performance between the source Db2 and Amazon RDS for Db2.

BatchApplyEnabled – To control the behavior of applying changes in batch or transactional mode

BatchApplyTimeoutMin – To control the minimum amount of time that AWS DMS waits between batches

BatchApplyTimeoutMax – To control the maximum amount of time that AWS DMS waits between batches

BatchApplyMemoryLimit – To control that maximum amount of memory in MB to use for preprocessing in batch-optimized apply mode

BatchSplitSize – To control the maximum changes supported in a single batch

MemoryLimitTotal – The maximum size in MB that transactions can occupy in memory before being written to disk

MemoryKeepTime – The maximum time in seconds that each transaction can stay in memory before being written to a disk

The WriteBuffersize and MaxFileSize endpoint settings are applicable in the CDC phase as well.

Replication performance using custom settings with a sample run

Because we faced a large latency issue with the default task settings, we proceed to tune the change processing tuning task settings. The Batch Apply timeout settings below allows more frequent batch executions, Batch split and apply memory limit are considered after carefully finding a balance between batch size and execution time of each batch operation. The Memory limit and keep time allows to keep more amount of changes in memory for longer duration, it’s dependent on the available memory. Statement cache allows transactions to be prepared to reduce the execution time during change replay on the target. Through this, we see an improvement when compared with the preceding task run. We started the task at 10:15 UTC and stopped after 10 minutes because this is only a sample run; this time, we didn’t see any occurrences of swapping and latency was well under limits.

We used the following task settings:

In the following figure, we plotted latency and CDC throughput rows for the source and target.

We captured the following key metrics from this task:

The source latency compared to target latency reached 4 seconds compared to 10 seconds

The CDC throughput row on the source compared to the target reached 2,409 rows/second compared to 1,828 rows/second

Replication performance using custom settings with continuous load batches

Because we were able to achieve low latency on the target with a 10-minute hard stop on workload generation, we proceed to the next stage to allow replication to run for a duration of 60 minutes, which includes all three runs to generate a workload with 1, 10, and 25 concurrent connections.

We started the workload at 03:56 UTC and observed that the source capture paused after 55 minutes due to swap file generation. We let the workload run for the full 60 minutes (stopping the task at 04:56 UTC). Target latency spiked up to 11,029 seconds.

The following is the AWS DMS log snippet:

We used the following task settings:

We used the following endpoint settings:

“WriteBufferSize”=204800;”MaxFileSize”=204800;

In the following figure, we plotted the latency and CDC throughput rows on source and target.

We captured the following key metrics:

The source latency compared to target latency reached 6,185 seconds compared to 10,970 seconds

The CDC throughput row on source compared to target reached 58,216 rows/second compared to 38,085 rows/second

Replication performance using custom settings with task split and continuous load batches

To improve performance, we decided to split the tables into three sets, with each set containing three tables, and create multiple replication tasks. We started the workload at 13:34 UTC and let it run for 60 minutes (stopping at 14:34 UTC). We observed the task with the lowest target latency was 433 seconds and the task with the highest latency was 4,158 seconds.

In the following figure, we plotted latency and CDC throughput rows on source and target for the three tasks.

We captured the following metrics for task 1:

The source latency compared to target latency reached 4 seconds compared to 4,158 seconds

The CDC throughput row on source compared to target reached 11,778 rows/second compared to 29,543 rows/second

We captured the following metrics for task 2:

The source latency compared to target latency reached 4 seconds compared to 433 seconds

The CDC throughput row on source compared to target reached 4,543 rows/second compared to 9,511 rows/second

We captured the following metrics for task 3:

The source latency compared to target latency reached 141 seconds compared to 3,011 seconds

The CDC throughput row on source compared to target reached 98,912 rows/second compared to 84,326 rows/second

Summary of performance tests

The following table summarizes our performance tests, including the maximum values we observed.

Test

Source Latency (seconds)

Target Latency (seconds)

Source Throughput (rows/sec)

Target Throughput (rows/sec)

Using default settings

807

1,257

76,642

34,140

Use custom settings

4

10

2,409

1,828

Use custom settings with continuous load batches

6,185

10,970

58,216

38,085

Use custom settings with tasks split and continuous load batches (peak of both source and targets)

141

4,158

98,912

84,326

Performance Summary of Full Load and Ongoing Replication

Tuning DMS task led to following performance improvement during full load phase:

After using custom settings and segmented load, we observed a performance boost of 19.46%.

We also saw the following improvements for ongoing replication:

With a 10-minute sample workload before and after tuning the settings, we observed a performance boost of 12,470%.

With continuous batches before and after the task split, we observed a performance boost of 163.82%.

Clean up

When the migration is complete and there is no source or target latency, you can stop traffic to the source database and perform the cutover. Furthermore, you can clean up your AWS DMS resources by following these steps:

Stop the replication task using StopReplicationTask.

Delete the replication task using DeleteReplicationTask.

Delete the replication instance using DeleteReplicationInstance.

Conclusion

In this post, we shared some insights into tuning Full Load and CDC performance of an AWS DMS task migrating data from a Db2 LUW source to an Amazon RDS for Db2 target. For a better understanding of the factors that affect replication performance and load on source and target, refer to the following:

Improving the performance of an AWS DMS migration

Reducing the load on your source database

Reducing the bottlenecks on your target database

About the Author

Vinay Kumar Dumpa is a Database Engineer with the AWS DMS team. He works with our customers to provide guidance and technical assistance on database migration projects, helping them improve the value of their solutions when using AWS.

Source: Read More