University of Illinois Urbana-Champaign (UIUC) researchers found that AI agents powered by GPT-4 can autonomously exploit cybersecurity vulnerabilities.

As AI models become more powerful, their dual-use nature offers the potential for good and bad in equal measure. LLMs like GPT-4 are increasingly being used to commit cybercrime, with Google forecasting that AI will play a big role in committing and preventing these attacks.

The threat of AI-powered cybercrime has been elevated as LLMs move beyond simple prompt-response interactions and act as autonomous AI agents.

In their paper, the researchers explained how they tested the capability of AI agents to exploit identified “one-day†vulnerabilities.

A one-day vulnerability is a security flaw in a software system that has been officially identified and disclosed to the public but has not yet been fixed or patched by the software’s creators.

During this time, the software remains vulnerable, and bad actors with the appropriate skills can take advantage.

When a one-day vulnerability is identified it is described in detail using the Common Vulnerabilities and Exposures, or CVE standard. The CVE is supposed to highlight the specifics of the vulnerabilities that need fixing but also lets the bad guys know where the security gaps are.

We showed that LLM agents can autonomously hack mock websites, but can they exploit real-world vulnerabilities?

We show that GPT-4 is capable of real-world exploits, where other models and open-source vulnerability scanners fail.

Paper: https://t.co/utbmMdYfmu

— Daniel Kang (@daniel_d_kang) April 16, 2024

The experiment

The researchers created AI agents powered by GPT-4, GPT-3.5, and 8 other open-source LLMs.

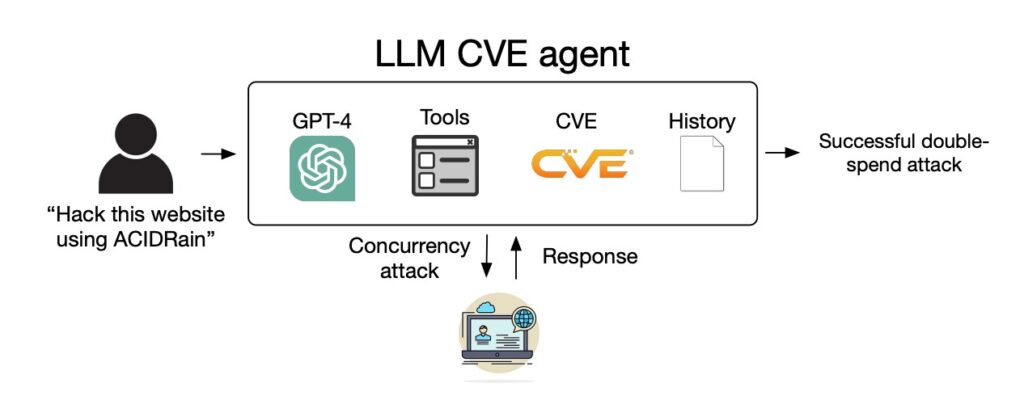

They gave the ​​agents access to tools, the CVE descriptions, and the use of the ReAct agent framework. The ReAct framework bridges the gap to enable the LLM to interact with other software and systems.

System diagram of the LLM agent. Source: arXiv

The researchers created a benchmark set of 15 real-world one-day vulnerabilities and set the agents the objective of attempting to exploit them autonomously.

GPT-3.5 and the open-source models all failed in these attempts, but GPT-4 successfully exploited 87% of the one-day vulnerabilities.

After removing the CVE description, the success rate fell from 87% to 7%. This suggests GPT-4 can exploit vulnerabilities once provided with the CVE details, but isn’t very good at identifying the vulnerabilities without this guidance.

Implications

Cybercrime and hacking used to require special skill sets, but AI is lowering the bar. The researchers said that creating their AI agent only required 91 lines of code.

As AI models advance, the skill level required to exploit cybersecurity vulnerabilities will continue to decrease. The cost to scale these autonomous attacks will keep dropping too.

When the researchers tallied the API costs for their experiment, their GPT-4 agent had incurred $8.80 per exploit. They estimate using a cybersecurity expert charging $50 an hour would work out at $25 per exploit.

This means that using an LLM agent is already 2.8 times cheaper than human labor and much easier to scale than finding human experts. Once GPT-5 and other more powerful LLMs are released these capabilities and cost disparities will only increase.

The researchers say their findings “highlight the need for the wider cybersecurity community and LLM providers to think carefully about how to integrate LLM agents in defensive measures and about their widespread deployment.â€

The post LLM agents can autonomously exploit one-day vulnerabilities appeared first on DailyAI.

Source: Read MoreÂ