In the ever-expanding landscape of artificial intelligence, Large Language Models (LLMs) have emerged as versatile tools, making significant strides across various domains. As they venture into multimodal realms like visual and auditory processing, their capacity to comprehend and represent complex data, from images to speech, becomes increasingly indispensable. Nevertheless, this expansion brings forth many challenges, particularly in developing efficient tokenization techniques for diverse data types, such as images, videos, and audio streams.

Among the myriad applications of LLMs, the domain of music poses unique challenges that necessitate innovative approaches. Despite achieving remarkable musical performance, these models often need to improve in capturing the structural coherence crucial for aesthetically pleasing compositions. The reliance on the Musical Instrument Digital Interface (MIDI) presents inherent limitations, hindering musical structures’ readability and faithful representation.

Addressing these challenges, a team of researchers, including M-A-P, University of Waterloo, HKUST, University of Manchester, and many others, have proposed integrating ABC notation, offering a promising alternative to overcome the constraints imposed by MIDI formats. Advocates for this approach highlight ABC notation’s inherent readability and structural coherence, underscoring its potential to enhance the fidelity of musical representations. By fine-tuning LLMs with ABC notation and leveraging techniques like instruction tuning, researchers aim to elevate the models’ musical output capabilities.

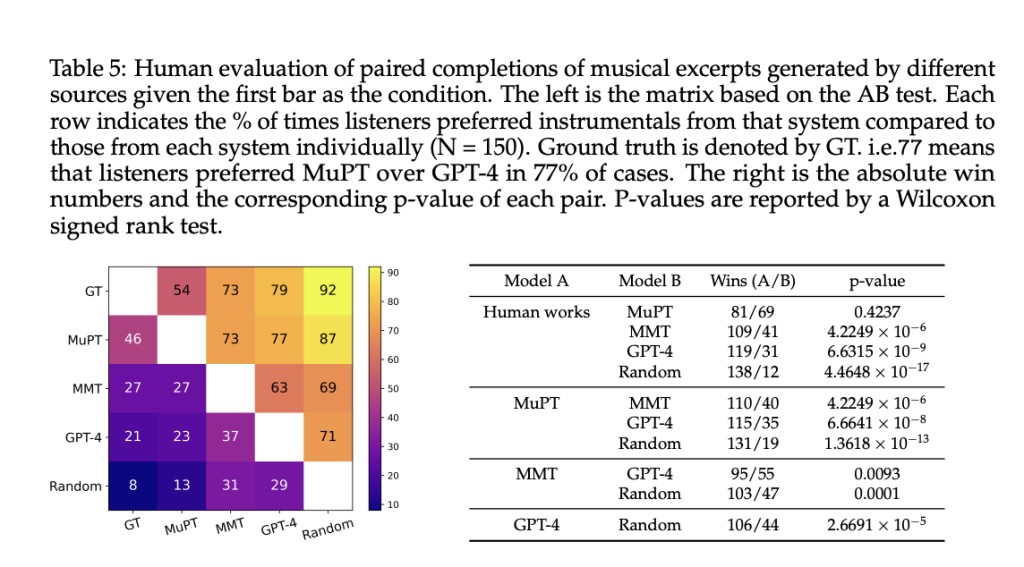

Their ongoing research extends beyond mere adaptation to proposing a standardized training approach tailored explicitly for symbolic music generation tasks. By employing transformer decoder-only architecture, suitable for both single and multi-track music generation, they aim to tackle inherent discrepancies in representing musical measures. Their proposed SMT-ABC notation facilitates a deeper understanding of each measure’s expression across multiple tracks, mitigating issues stemming from the traditional ‘next-token-prediction’ paradigm.

Furthermore, their investigation reveals that additional training epochs yield tangible benefits for the ABC Notation model, indicating a positive correlation between repeated data exposure and model performance. They introduce the SMS Law to elucidate this phenomenon, which explores how scaling up training data influences model performance, particularly concerning validation loss. Their findings provide valuable insights into optimizing training strategies for symbolic music generation models, paving the way for enhanced musical fidelity and creativity in AI-generated compositions.

Their research underscores the importance of continuous innovation and refinement in developing AI models for music generation. By delving into the nuances of symbolic music representation and training methodologies, they strive to push the boundaries of what is achievable in AI-generated music. Through ongoing exploration of novel tokenization techniques, such as ABC notation, and meticulous optimization of training processes, they aim to unlock new levels of structural coherence and expressive richness in AI-generated compositions. Ultimately, their efforts not only contribute to advancing the field of AI in music but also hold the promise of enhancing human-AI collaboration in creative endeavors, ushering in a new era of musical exploration and innovation.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

For Content Partnership, Please Fill Out This Form Here..

The post MuPT: A Series of Pre-Trained AI Models for Symbolic Music Generation that Sets the Standard for Training Open-Source Symbolic Music Foundation Models appeared first on MarkTechPost.

Source: Read MoreÂ