We are excited to announce a new version of the Amazon SageMaker Operators for Kubernetes using the AWS Controllers for Kubernetes (ACK). ACK is a framework for building Kubernetes custom controllers, where each controller communicates with an AWS service API. These controllers allow Kubernetes users to provision AWS resources like buckets, databases, or message queues simply by using the Kubernetes API.

Release v1.2.9 of the SageMaker ACK Operators adds support for inference components, which until now were only available through the SageMaker API and the AWS Software Development Kits (SDKs). Inference components can help you optimize deployment costs and reduce latency. With the new inference component capabilities, you can deploy one or more foundation models (FMs) on the same Amazon SageMaker endpoint and control how many accelerators and how much memory is reserved for each FM. This helps improve resource utilization, reduces model deployment costs on average by 50%, and lets you scale endpoints together with your use cases. For more details, see Amazon SageMaker adds new inference capabilities to help reduce foundation model deployment costs and latency.

The availability of inference components through the SageMaker controller enables customers who use Kubernetes as their control plane to take advantage of inference components while deploying their models on SageMaker.

In this post, we show how to use SageMaker ACK Operators to deploy SageMaker inference components.

How ACK works

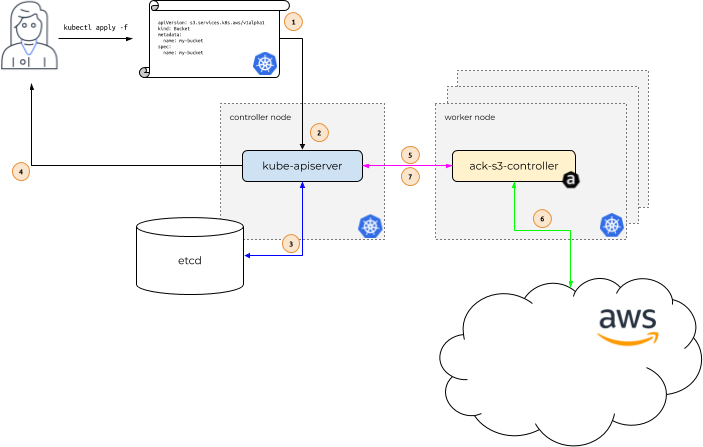

To demonstrate how ACK works, let’s look at an example using Amazon Simple Storage Service (Amazon S3). In the following diagram, Alice is our Kubernetes user. Her application depends on the existence of an S3 bucket named my-bucket.

The workflow consists of the following steps:

Alice issues a call to kubectl apply, passing in a file that describes a Kubernetes custom resource describing her S3 bucket. kubectl apply passes this file, called a manifest, to the Kubernetes API server running in the Kubernetes controller node.

The Kubernetes API server receives the manifest describing the S3 bucket and determines if Alice has permissions to create a custom resource of kind s3.services.k8s.aws/Bucket, and that the custom resource is properly formatted.

If Alice is authorized and the custom resource is valid, the Kubernetes API server writes the custom resource to its etcd data store.

It then responds to Alice that the custom resource has been created.

At this point, the ACK service controller for Amazon S3, which is running on a Kubernetes worker node within the context of a normal Kubernetes Pod, is notified that a new custom resource of kind s3.services.k8s.aws/Bucket has been created.

The ACK service controller for Amazon S3 then communicates with the Amazon S3 API, calling the S3 CreateBucket API to create the bucket in AWS.

After communicating with the Amazon S3 API, the ACK service controller calls the Kubernetes API server to update the custom resource’s status with information it received from Amazon S3.

Key components

The new inference capabilities build upon SageMaker’s real-time inference endpoints. As before, you create the SageMaker endpoint with an endpoint configuration that defines the instance type and initial instance count for the endpoint. The model is configured in a new construct, an inference component. Here, you specify the number of accelerators and amount of memory you want to allocate to each copy of a model, together with the model artifacts, container image, and number of model copies to deploy.

You can use the new inference capabilities from Amazon SageMaker Studio, the SageMaker Python SDK, AWS SDKs, and AWS Command Line Interface (AWS CLI). They are also supported by AWS CloudFormation. Now you also can use them with SageMaker Operators for Kubernetes.

Solution overview

For this demo, we use the SageMaker controller to deploy a copy of the Dolly v2 7B model and a copy of the FLAN-T5 XXL model from the Hugging Face Model Hub on a SageMaker real-time endpoint using the new inference capabilities.

Prerequisites

To follow along, you should have a Kubernetes cluster with the SageMaker ACK controller v1.2.9 or above installed. For instructions on how to provision an Amazon Elastic Kubernetes Service (Amazon EKS) cluster with Amazon Elastic Compute Cloud (Amazon EC2) Linux managed nodes using eksctl, see Getting started with Amazon EKS – eksctl. For instructions on installing the SageMaker controller, refer to Machine Learning with the ACK SageMaker Controller.

You need access to accelerated instances (GPUs) for hosting the LLMs. This solution uses one instance of ml.g5.12xlarge; you can check the availability of these instances in your AWS account and request these instances as needed via a Service Quotas increase request, as shown in the following screenshot.

Create an inference component

To create your inference component, define the EndpointConfig, Endpoint, Model, and InferenceComponent YAML files, similar to the ones shown in this section. Use kubectl apply -f <yaml file> to create the Kubernetes resources.

You can list the status of the resource via kubectl describe <resource-type>; for example, kubectl describe inferencecomponent.

You can also create the inference component without a model resource. Refer to the guidance provided in the API documentation for more details.

EndpointConfig YAML

The following is the code for the EndpointConfig file:

Endpoint YAML

The following is the code for the Endpoint file:

Model YAML

The following is the code for the Model file:

InferenceComponent YAMLs

In the following YAML files, given that the ml.g5.12xlarge instance comes with 4 GPUs, we are allocating 2 GPUs, 2 CPUs and 1,024 MB of memory to each model:

Invoke models

You can now invoke the models using the following code:

Update an inference component

To update an existing inference component, you can update the YAML files and then use kubectl apply -f <yaml file>. The following is an example of an updated file:

Delete an inference component

To delete an existing inference component, use the command kubectl delete -f <yaml file>.

Availability and pricing

The new SageMaker inference capabilities are available today in AWS Regions US East (Ohio, N. Virginia), US West (Oregon), Asia Pacific (Jakarta, Mumbai, Seoul, Singapore, Sydney, Tokyo), Canada (Central), Europe (Frankfurt, Ireland, London, Stockholm), Middle East (UAE), and South America (São Paulo). For pricing details, visit Amazon SageMaker Pricing.

Conclusion

In this post, we showed how to use SageMaker ACK Operators to deploy SageMaker inference components. Fire up your Kubernetes cluster and deploy your FMs using the new SageMaker inference capabilities today!

About the Authors

Rajesh Ramchander is a Principal ML Engineer in Professional Services at AWS. He helps customers at various stages in their AI/ML and GenAI journey, from those that are just getting started all the way to those that are leading their business with an AI-first strategy.

Amit Arora is an AI and ML Specialist Architect at Amazon Web Services, helping enterprise customers use cloud-based machine learning services to rapidly scale their innovations. He is also an adjunct lecturer in the MS data science and analytics program at Georgetown University in Washington D.C.

Suryansh Singh is a Software Development Engineer at AWS SageMaker and works on developing ML-distributed infrastructure solutions for AWS customers at scale.

Saurabh Trikande is a Senior Product Manager for Amazon SageMaker Inference. He is passionate about working with customers and is motivated by the goal of democratizing machine learning. He focuses on core challenges related to deploying complex ML applications, multi-tenant ML models, cost optimizations, and making deployment of deep learning models more accessible. In his spare time, Saurabh enjoys hiking, learning about innovative technologies, following TechCrunch, and spending time with his family.

Johna Liu is a Software Development Engineer in the Amazon SageMaker team. Her current work focuses on helping developers efficiently host machine learning models and improve inference performance. She is passionate about spatial data analysis and using AI to solve societal problems.

Source: Read MoreÂ