The exploration of artificial intelligence within dynamic 3D environments has emerged as a critical area of research, aiming to bridge the gap between static AI applications and their real-world usability. Researchers at Google DeepMind have pioneered this realm, developing sophisticated agents capable of interpreting and acting on complex instructions within various simulated settings. This new wave of AI research extends beyond conventional paradigms, focusing on integrating visual perception and language processing to enable AI systems to perform human-like tasks across diverse virtual landscapes.

A fundamental issue in this field is the limited capability of AI agents to interact dynamically in three-dimensional spaces. Traditional AI models excel in environments where tasks and responses are clearly defined and static. However, they falter when required to engage in environments characterized by continuous change and multifaceted objectives. This gap highlights the need for a robust system that adapts and responds to unpredictable scenarios akin to real-world interactions.

Previous methodologies have often relied on rigid command-response frameworks, which confine AI agents to a narrow range of predictable, controlled actions. These agents operate under constrained conditions and cannot generalize their learned behaviors to new or evolving contexts. Such approaches are less effective in scenarios that demand real-time decision-making and adaptability, underscoring the necessity for more versatile and dynamic AI capabilities.

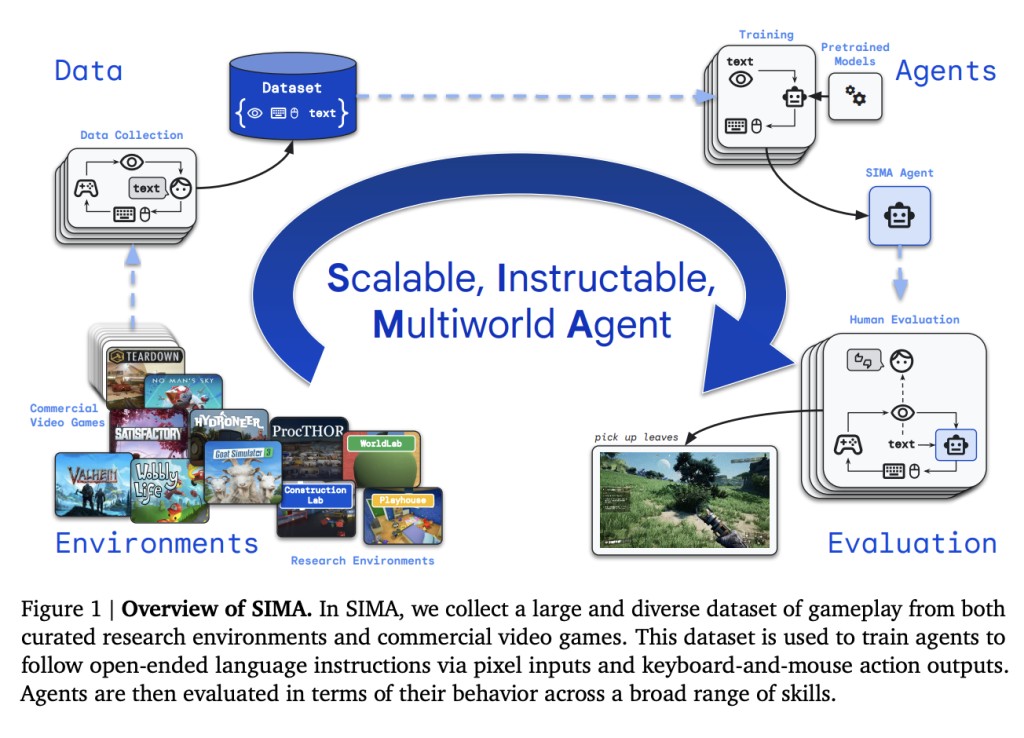

The SIMA (Scalable, Instructable Multiworld Agent) project by researchers at Google DeepMind and the University of British Columbia introduces a novel approach designed to transcend these limitations. The SIMA framework leverages advanced machine learning models and extensive datasets to train agents that can understand and execute various instructions. By integrating language instructions with sensory data from 3D environments, SIMA agents can perform complex tasks requiring cognitive functions and physical interactions.

The core methodology behind SIMA involves training agents to process combined inputs of language and visual data to navigate and interact within virtual environments. These environments range from meticulously curated simulation platforms to open-ended video games, offering agents a broad spectrum of tasks and scenarios. Using pretrained neural networks and ongoing learning processes, SIMA agents learn to generalize their capabilities across different settings, effectively bridging the gap between specific instructions and physical actions in a digital space.

Empirical evaluations of SIMA agents demonstrate their enhanced ability to interpret and act upon diverse instructions. Performance metrics across various platforms reveal significant successes in executing tasks that mimic real-world activities, such as navigation, object manipulation, and complex problem-solving. For instance, in one evaluation, SIMA agents achieved a task completion rate of 75% across multiple video games, showcasing their proficiency in adapting to different virtual environments and tasks.

In conclusion, the SIMA project addresses the significant challenge of enhancing AI adaptability in dynamic 3D environments. By integrating advanced machine learning techniques to combine language and visual inputs, the SIMA framework equips AI agents with the ability to execute complex, human-like tasks across various virtual platforms.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

The post Google DeepMind’s SIMA Project Enhances Agent Performance in Dynamic 3D Environments Across Various Platforms appeared first on MarkTechPost.

Source: Read MoreÂ