With the introduction of some brilliant generative Artificial intelligence models, such as ChatGPT, GEMINI, and BARD, the demand for AI-generated content is rising in a number of industries, especially multimedia. Effective text-to-audio, text-to-image, and text-to-video models that can produce high-quality material or prototypes fast are required to meet this need. It is imperative to enhance the realism of these models with respect to input prompts.

In order to align Large Language Model (LLM) replies with human preferences, supervised fine-tuning-based direct preference optimisation (DPO) has recently become a viable and reliable substitute for Reinforcement Learning with Human Feedback (RLHF). This method has been modified for diffusion models in order to match outputs that have been denoised to human preferences.

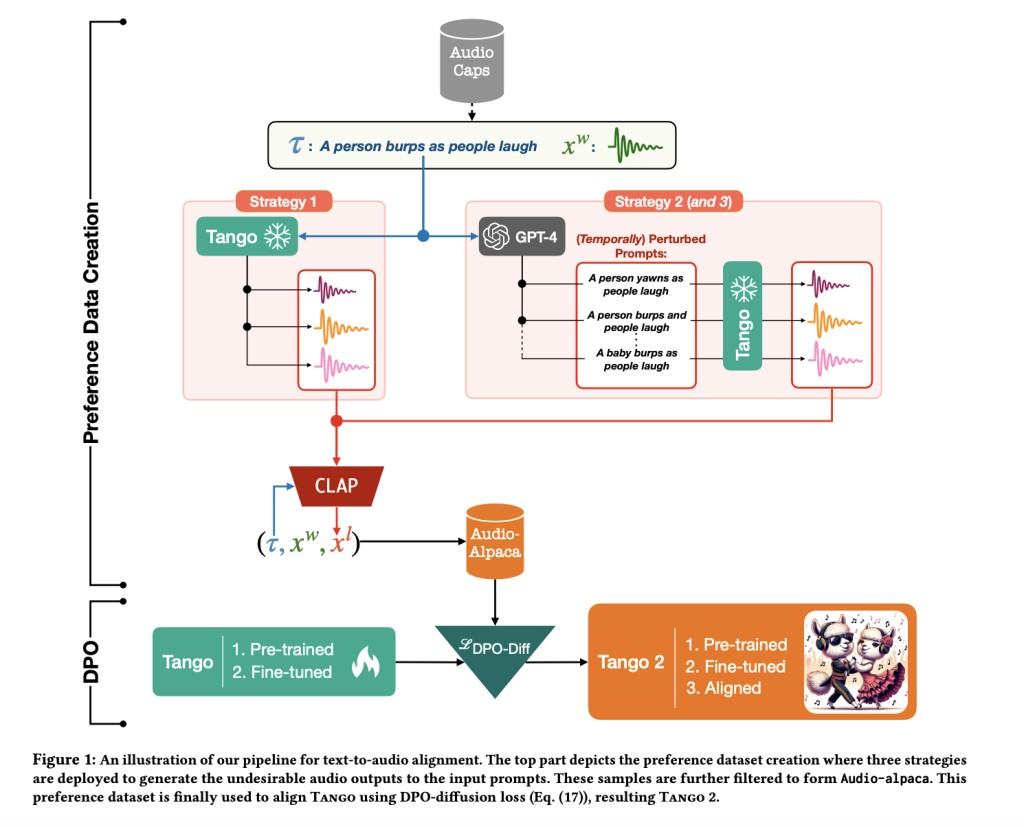

A team of researchers has employed the DPO-diffusion approach in a recent study to improve the semantic alignment of a text-to-audio model’s output audio with input prompts. They have used DPO-diffusion loss to optimize Tango, which is a publically available text-to-audio latent diffusion model, on a synthesized reference dataset. This dataset, called Audio-Alpaca, includes a variety of audio cues, along with their liked and unwanted sounds.Â

While the undesired audios have defects like missing concepts, incorrect temporal order, or excessive noise levels, the preferred audios faithfully capture their corresponding written descriptions. Techniques for producing unwanted sounds include causing disturbances to descriptions and using adversarial filtering to identify sounds with bad audio quality, or CLAP-score.

Based on criteria determined by CLAP-score differentials, the team has chosen a subset of data for DPO fine-tuning in order to handle noisy preference pairs that arise from automatic synthesis. This guarantees a minimum separation between preference pairs and a minimum proximity to the input prompt.Â

The team has shared that based on experimental results, Tango can be fine-tuned on the trimmed Audio-alpaca dataset to produce Tango 2, which performs better in both human and objective evaluations than Tango and AudioLDM2. Tango 2 is better able to map input prompt semantics into the audio space when it is exposed to the contrast between good and bad audio outputs during DPO fine-tuning. Even though Tango 2 creates synthetic preference data using the same dataset as Tango, it makes notable improvements, demonstrating its effectiveness.Â

The team has summarized their primary contributions as follows.

The study has presented a low-cost technique for producing a preference dataset semi-automatically for text-to-audio conversion. This method helps with model training by enabling the generation of a dataset where each prompt is linked to many unwanted and preferred audio outputs.Â

The preference dataset, known as Audio-Alpaca, has been made available to the research community. This dataset can be useful for benchmarking and more research in the future as text-to-audio generating methods are developed.

Tango 2 outperformed both Tango and AudioLDM2 in terms of objective and subjective measures, even though it hasn’t sourced any more out-of-distribution text-audio pairs outside of Tango’s dataset. This demonstrates how well the suggested methodology works to improve model performance.

Diffusion-DPO’s applicability has been shown by Tango 2’s performance, which highlights the technology’s potential for enhancing text-to-audio models and illustrates its usefulness in audio-generating tasks.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

The post Tango 2: The New Frontier in Text-to-Audio Synthesis and Its Superior Performance Metrics appeared first on MarkTechPost.

Source: Read MoreÂ