At AWS, we have been making steady progress towards bringing our zero-ETL vision to life. With Amazon Aurora zero-ETL integration to Amazon Redshift, you can bring together the transactional data of Amazon Aurora with the analytics capabilities of Amazon Redshift. The integration helps you derive holistic insights across many applications, break data silos in your organization, and gain significant cost savings and operational efficiencies. At AWS re:Invent 2023, AWS announced four new zero-ETL integrations to make data access and analysis faster and effortless across data stores.

Customers across industries today are looking to increase revenue and customer engagement by implementing near real-time analytics use cases like personalization strategies, fraud detection, inventory monitoring, and many more. The zero-ETL integrations enable you to unlock all such use cases.

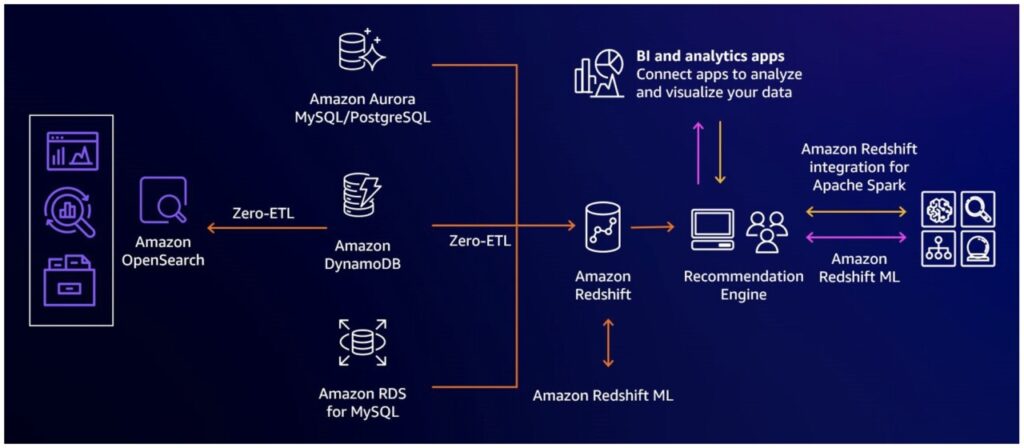

As of writing this post, Aurora zero-ETL integration with Amazon Redshift is generally available for Amazon Aurora MySQL, under public preview for Amazon Aurora PostgreSQL and Amazon RDS for MySQL, and under private preview for Amazon DynamoDB. For more information, see Working with Aurora zero-ETL integrations with Amazon Redshift. The following figure shows the currently offered zero-ETL integrations.

In this post, we show you how to handle tables without primary keys while creating Amazon Aurora MySQL or Amazon RDS for MySQL zero-ETL integrations with Amazon Redshift. A similar strategy for Aurora PostgreSQL has been explained in Handle tables without primary keys while creating Amazon Aurora PostgreSQL zero-ETL integrations with Amazon Redshift.

Zero-ETL and primary keys in MySQL

A primary key is an attribute that uniquely identifies a record (row) in the table. It’s defined as a combination of one or more columns, where none of the columns are nullable, and the combination of column values is unique across the table. In MySQL InnoDB, the term primary key is typically synonymous with clustered index, a tree-organized structure that stores row data.

There are three ways for InnoDB to choose a primary key:

The primary key can be declared explicitly in the table definition

If the primary key is not explicitly defined, InnoDB will choose the first UNIQUE index with all columns defined as NOT NULL

If the primary key is not defined, and the table doesn’t have a suitable UNIQUE index, InnoDB will create a hidden internal column using a 6-byte monotonically increasing number

Zero-ETL integration with Amazon Redshift depends on primary keys to track row changes. Tables without primary keys can be filtered out from a zero-ETL integration if you don’t need to replicate them into Amazon Redshift. However, if you wish to use a table with zero-ETL, the table must have an explicitly defined primary key, as described in the first option.

In this post, we walk you through the process of choosing a primary key for a table and modifying (altering) the table to add the primary key. But first, let’s discuss the symptoms you will observe in active zero-ETL integrations that encounter a table without a primary key.

To see tables with integration issues, go to the Table statistics tab on the integration details page on the Amazon Redshift console, or use the SVV_INTEGRATION_TABLE_STATE system view. You will notice a “Needs attention†message under the integration status, and a Failed status reported for one or more tables, as shown in the following screenshot.

You will also find the failure reason in the “reason†field, which should point to the lack of a primary key in the table.

Having determined that your table needs a primary key, you can decide whether to create one based on existing table columns, or to add a new column to act as a primary key.

If you don’t want the tables without primary keys to be replicated using zero-ETL integrations, you can use the data filtering feature within the zero-ETL integration wizard to filter out the source tables without primary keys.

Choosing a primary key

As discussed earlier, the primary key is a combination of one or more columns with values that are unique and not nullable. Some tables might already contain columns that meet this definition. When you define a primary key based on existing table columns that contain real data, it’s referred to as a natural key. For example, in a table storing driver license information, the driver license ID is most likely a value that’s unique and always present (never empty).

You can also define a primary key on a column with artificial values that uniquely identify rows but aren’t derived from real-world data. In MySQL, such a key is most commonly referred to as a synthetic or surrogate key. A numeric auto-increment column is the most common example of a synthetic primary key.

Choosing between a natural and synthetic primary key is a matter of performance and efficiency. The following are the main considerations that should factor into your primary key choice:

The clustered index, which is the tree structure that stores row data, is ordered by primary key values. As a result, the primary key choice determines where new rows will be stored in the structure. An unordered primary key (or a randomly ordered one, such as a UUID version 4) can lead to a more random access pattern on table pages, potentially resulting in lower performance. It can also exacerbate certain types of page contention, such as when InnoDB has to split existing pages to make room for new rows.

Every row entry for every secondary index in the table contains a copy of the primary key value. Longer primary keys mean more bloat in secondary index structures, which reduces storage efficiency and can noticeably impact performance when writing to the table.

Changing a primary key value for a row means physically moving the row from one database page to another, which requires a lot more work than changing a non-key column value. Consequently, columns with frequently changing values are typically not an optimal choice for a primary key.

In other words, the primary key isn’t merely an isolated construct that exists next to a table. It’s a major element of the table structure with direct and substantial effect on write performance and storage efficiency. Equipped with this knowledge, you can make a determination between using a natural primary key or a synthetic one. As a general rule, your choice should prioritize efficiency and performance, even if it means adding a new column. When designing a key for maximum efficiency, remember that certain value types can be encoded in multiple formats. For example, UUID values are commonly represented as a human-readable text, but a UUID is fundamentally just a number. A text-encoded value will be very long and inefficient, but the same value stored in a BINARY data type will take a lot less space.

When in doubt, an auto-incremented numeric column should work well as a primary key, providing low overhead and adequate performance in the majority of cases. When creating auto-incremented keys, use a BIGINT data type (8 bytes) instead of INT (4 bytes). The additional 4 bytes of storage is a small price to pay for not having to worry about running out of values.

Adding a primary key to a table

In the previous sections, we established that a primary key must be defined for each table participating in a zero-ETL integration, and explained the best practices for choosing a primary key. Finding the most optimal way of adding a primary key to the table is the final piece of the puzzle.

Adding or modifying a primary key is no trivial task, especially on large tables under write-intensive workloads. There are three main ways to change the structure of a table:

Use the ALTER TABLE DDL statement, which modifies the table directly.

Use a schema change tool such as pt-online-schema-change, gh-ost, or spirit. Schema change tools typically work by creating a new table, migrating the data along with any ongoing changes, and then swapping table names.

Develop a custom solution, tuned and optimized for your specific situation.

By using the ALTER TABLE statement, you ask InnoDB to restructure the clustered index, and therefore to rewrite table data in the order of the new primary key. This is a resource-intensive operation that can take many hours to complete on large tables. InnoDB supports online primary key modifications, which means that the table is not rendered unavailable for the entire duration of the change. However, online DDL operations still involve stages that require exclusive table locks, which can stall queries for an amount of time that’s difficult to predict. An ALTER-based approach can be a good choice for very small tables, tables that don’t experience active traffic, or non-critical tables where temporary workload impact is acceptable.

Schema change tools are a common answer to schema modification challenges, and are widely used in databases that can’t tolerate the impact of in-place ALTERs. The main premise of such tools is to avoid an in-place modification of the table, and instead to use a replacement table that can be populated with existing data and synchronized with ongoing changes. After the new table is fully populated, the tool typically uses a RENAME statement to switch the tables.

Regarding data migration and change synchronization, the implementation details differ from one tool to another. For example, pt-online-schema-change uses triggers to synchronize ongoing changes, whereas gh-ost and spirit use binary logs to extract change records. Each tool comes with its own set of configuration settings, and the implementation often reflects a specific focus on improving performance and reducing workload impact. If you’re planning to use a schema change tool, be sure to choose one that reflects your own priorities.

Finally, you can design and develop a custom schema change tool if none of the preceding options are sufficient. A custom tool would have to support four main tasks:

Create a new table with the desired structure.

Move data from the existing table to the new table.

Synchronize table changes introduced by active workloads during Step 2.

Atomically switch over from the old to the new table, typically by using the RENAME statement.

When developing a custom tool, the following goals come into play:

Control the impact of the schema change on workload performance – This consideration plays a key role in Steps 2 and 3, which are the most resource-intensive and performance-sensitive stages of the process. A common approach is to move data in chunks, with a configurable chunk size and sleep time between chunks to control the priority of your schema migration relative to other workloads. You can set the chunk size and sleep parameters manually, or you can implement logic to tune those parameters in real time based on key database metrics (for example, query latency or InnoDB row lock time).

Provide complete and correct synchronization of ongoing changes – This is a key consideration in Step 3. Here, your implementation decisions will be driven mostly by performance expectations. A table receiving a handful of simple updates per minute could be able to afford a simple synchronization mechanism based on triggers, whereas a busy table handling tens of thousands of writes per second might need a different approach.

Avoid coarse locks as much as possible – You might not be able to avoid locking entirely, because even a RENAME command will require a brief metadata lock.

Provide crash safety at every step of the process – This makes sure that interrupted schema changes don’t render the table unusable. This is a key consideration during the switchover step.

Be able to stop and resume a schema change – This can be valuable, especially for low-impact tools that can take several days to apply the change.

Reducing impact with the help of logical replication

Busy MySQL databases commonly use binary log replicas to perform large schema changes. In this approach, logical replication between the primary and the replica takes care of data synchronization. The schema change is performed on the replica, thereby insulating the primary database from much of the impact, although you still need to perform a switchover at the end of the process.

Depending on the extent of your schema change, replication compatibility might become a concern. For details, see Replication with Differing Table Definitions on Source and Replica in the MySQL Reference Manual.

The exact steps to set up a replication-backed solution will depend on your database solution. For RDS for MySQL database instances, you can use the service-provided read replicas. Following the schema change, a replica can be promoted to a standalone instance, and you can reconfigure your applications to use that replica.

For Amazon Aurora MySQL, or Amazon RDS for MySQL use cases in which you don’t want to use the built-in read replicas, you can follow these steps:

Enable binary logging on the RDS for MySQL database instance or an Aurora MySQL cluster:

In Amazon RDS for MySQL, you can turn on binary logging by enabling automated backups.

In Amazon Aurora MySQL, use the binlog_format parameter in a custom DB cluster parameter group.

Configure binary log retention using the rds_set_configuration stored procedure. Choose a retention setting that gives you enough time to follow the remaining setup steps.

Create a copy of the database instance or cluster:

In Amazon RDS for MySQL, you can restore the database from the most recent snapshot, or use the point-in-time restore feature.

In Amazon Aurora MySQL, you can create a clone of your cluster.

Configure replication between the original cluster and the copy using the replication stored procedures for Amazon RDS for MySQL or Amazon Aurora MySQL.

Run the schema change on the replica. You don’t have to stop replication before running the change, but doing so might help it complete more quickly.

Resume replication (if stopped) and verify that it’s working without errors.

Wait for replication to catch up.

Switch applications and workloads over to the replica. Keep in mind the standard best practices for switchovers to avoid data being modified simultaneously on both databases.

Recreate the zero-ETL integration with the new database.

Confirming a successful zero-ETL integration

From the zero-ETL integration point of view, adding a primary to an existing table should automatically result in a re-synchronization of the table. The integration status will turn from from Needs attention to Active, and the table status should transition from Failed to Resync Initiated, and finally to Synced when re-synchronization is complete. It might take a few minutes for the table to re-synchronize.

When working on adding or altering a primary key, regardless of the strategy you choose, it is essential to test your approach in a non-production environment before making final changes in production.

Conclusion

In this post, we explained how you can continue to use Amazon Aurora MySQL or Amazon RDS for MySQL zero-ETL integration to Amazon Redshift for scenarios where your source tables do not have a primary key defined. We also provided general guidance, strategies, and perspectives on how to handle primary key creation and modification in general in your Aurora or Amazon RDS environments.

Get started using zero-ETL integration to quickly and effortlessly run analytics on transactional or operational datasets.

About the authors

Rohit Vashishtha is a Senior Redshift Specialist Solutions Architect at AWS based in Dallas, Texas. He has over 17 years of experience architecting, building, leading, and maintaining big data platforms. Rohit helps customers modernize their analytic workloads using the breadth of AWS services and ensures that customers get the best price/performance with utmost security and data governance.

Szymon Komendera is a Database Solutions Architect at AWS, with 20 years of experience in databases, software development, and application availability. He spent the majority of his 9-year tenure at AWS as a member of Amazon Aurora MySQL and Amazon RDS for MySQL engineering teams. He’s a support person at heart, with a passion for helping and teaching.

Abhinav Dhandh is a Senior Product Manager – Technical for Amazon Aurora, and he leads all zero-ETL and change data capture (CDC) streaming product vision and roadmap for Aurora MySQL and PostgreSQL databases within AWS. He also leads the Aurora MySQL product management and has launched multiple capabilities around version currency, upgrades, migrations, availability, scaling, and performance.

Kanishka Chaturvedi is a Principal Engineer for Amazon Aurora, leading the technical direction of ZeroETL initiatives for Aurora engines. His background revolves around databases, having incubated other Amazon database services like Amazon DocumentDB and Amazon Timestream.

Stas Bogachinsky is a Sr. Database Specialist Solutions Architect at AWS. He works with AWS customers to design, secure, and optimize their database workloads.

Source: Read More