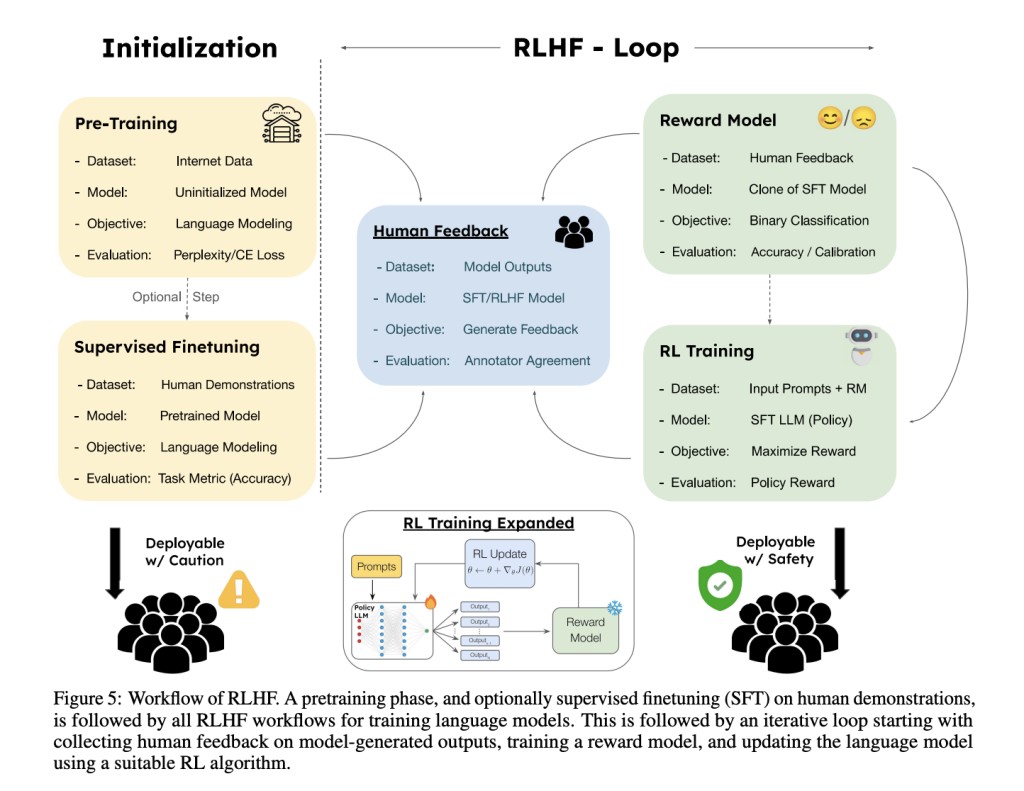

Large language models (LLMs) are widely used in various industries and are not just limited to basic language tasks. These models are used in sectors like technology, healthcare, finance, and education and can transform stable workflows in these critical sectors. A method called Reinforcement Learning from Human Feedback (RLHF) is used to make LLMs safe, trustworthy, and exhibit human-like qualities. RLHF became popular because of its ability to solve Reinforcement Learning (RL) problems like simulated robotic locomotion and playing Atari games by utilizing human feedback about preferences on demonstrated behaviors. It is often used to finetune LLMs using human feedback.

State-of-the-art LLMs are important tools for solving complex tasks. However, training LLMs to serve as effective assistants for humans requires careful consideration. The RLHF approach, which utilizes human feedback to update the model on human preferences, can be used to solve this issue and reduce problems like toxicity and hallucinations. However, understanding RLHF is largely complicated by the initial design choices that popularized the method. In this paper, the focus is on augmenting those choices rather than fundamentally improving the framework.

Researchers from the University of Massachusetts, IIT Delhi, Princeton University, Georgia Tech, and The Allen Institute for AI equally contributed to developing a comprehensive understanding of RLHF by analyzing the core components of the method. They adopted a Bayesian perspective of RLHF to design this method’s foundational questions and highlight the reward function’s importance. The reward function forms the central cog of the RLHF procedure, and to model this function, the formulation of RLHF depends on a set of assumptions. Analysis carried out by researchers leads to the formation of an oracular reward that serves as the theoretical golden standard for future efforts.

The main aim of reward learning in RLHF is to convert human feedback into an optimized reward function. Reward functions provide a dual purpose: they encode relevant information for measuring and inducing alignment with human objectives. With the help of the reward function, RL algorithms can be used to learn a language model policy to maximize the cumulative reward, resulting in an aligned language model. Two methods described in this paper are:

Value-based methods: These methods focus on learning the value of states based on the expected cumulative reward from that state following a policy.

Policy-gradient methods: Involve training a parameterized policy by using reward feedback. This approach applies gradient ascent to the policy parameters to maximize the expected cumulative reward.

An overview of the RLHF procedure along with the various challenges studied in this work:

Researchers finetuned RLHF of Language Models (LMs) by integrating the trained reward model. Also, Proximal Policy Optimization (PPO) and Advantage Actor-Critic (A2C) algorithms are used to update the parameters of the LM. It helps maximize the obtained reward using generated outputs. These are called policy-gradient algorithms that update the policy parameters directly using evaluative reward feedback. Moreover, the training process includes the pre-trained/SFT language model that is prompted with contexts from a prompting dataset. However, this dataset may or may not be identical to the one used for collecting human demonstrations in the SFT phase.

In conclusion, researchers worked on the fundamental aspects of RLHF to highlight its mechanism and limitations. They critically analyzed the reward models that constitute the core component of RLHF and highlighted the impact of different implementation choices. This paper addresses the challenges faced while learning these reward functions, showing both the practical and fundamental limitations of RLHF. Other aspects, including the types of feedback, the details and variations of training algorithms, and alternative methods for achieving alignment without using RL, are also discussed in this paper.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

The post This AI Paper Explores the Fundamental Aspects of Reinforcement Learning from Human Feedback (RLHF): Aiming to Clarify its Mechanisms and Limitations appeared first on MarkTechPost.

Source: Read MoreÂ