In digital media, the need for precise control over image and video generation has led to the development of technologies like ControlNets. These systems enable detailed manipulation of visual content using conditions such as depth maps, canny edges, and human poses. However, integrating these technologies with new models often entails significant computational resources and complex adjustments due to mismatches in feature spaces between different models.

The main challenge lies in adapting ControlNets, designed for static images, to dynamic video applications. This adaptation is critical as video generation demands spatial and temporal consistency, which existing ControlNets handle inefficiently. Direct application of image-focused ControlNets to video frames leads to inconsistencies over time, reducing the effectiveness of the output media.

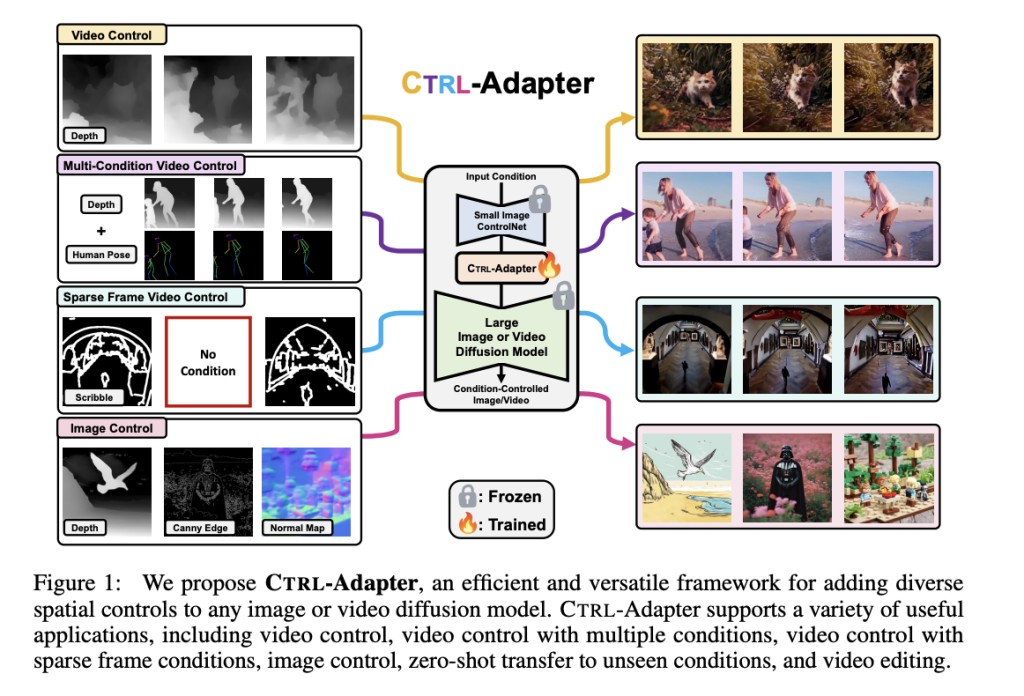

Researchers from UNC-Chapel Hill have developed the CTRL-Adapter, an innovative framework that facilitates the seamless integration of existing ControlNets with a new image and video diffusion models. This framework is designed to operate with the parameters of both ControlNets and diffusion models remaining unchanged, thus simplifying the adaptation process and significantly reducing the need for extensive retraining.

The CTRL-Adapter incorporates a combination of spatial and temporal modules, enhancing the framework’s ability to maintain consistency across frames in video sequences. It supports multiple control conditions by averaging the outputs of various ControlNets adjusting the integration based on each condition’s specific requirements. This approach allows for nuanced control over the generated media, making it possible to apply complex modifications across different conditions without extensive computational costs.

The effectiveness of CTRL-Adapter is demonstrated through extensive testing, where it has improved control in video generation while reducing computational demands. For instance, when adapted to video diffusion models like Hotshot-XL, I2VGen-XL, and SVD, CTRL-Adapter achieves top-tier performance on the DAVIS 2017 dataset, outperforming other methods in controlled video generation. It maintains high fidelity in the resulting media with reduced computational resources, taking under 10 GPU hours to surpass traditional methods that require hundreds of GPU hours.

CTRL-Adapter‘s versatility extends to its ability to handle sparse frame conditions and integrate multiple conditions seamlessly. This multiplicity allows for enhanced control over the visual characteristics of images and videos, enabling applications like video editing and complex scene rendering with minimal resource expenditure. For example, the framework allows the integration of conditions such as depth and human pose with an efficiency that previous systems could not achieve, maintaining high-quality output with an average FID (Frechet Inception Distance) improvement of 20-30% compared to baseline models.

In conclusion, the CTRL-Adapter framework significantly advances controlled image and video generation. Adapting existing ControlNets to new models reduces computational costs and enhances the capability to produce high-quality, consistent media across various conditions. Its ability to integrate multiple controls into a unified output model paves the way for innovative applications in digital media production, making it a crucial tool for developers and creatives aiming to push the boundaries of video and image generation technology.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

The post Researchers from UNC-Chapel Hill Introduce CTRL-Adapter: An Efficient and Versatile AI Framework for Adapting Diverse Controls to Any Diffusion Model appeared first on MarkTechPost.

Source: Read MoreÂ