Imagine having a digital assistant that can effortlessly navigate your computer, tackling complex tasks across different apps and operating systems with minimal guidance. It’s a fantasy prospect that could revolutionize productivity and accessibility in the digital realm. However, existing benchmarks for evaluating such autonomous agents have been very inadequate, confined to specific applications or lacking interactive environments altogether. That is, until now.

This paper introduces OSWorld, a groundbreaking platform that promises to propel the development of truly capable computer agents. Developed by a team of researchers, OSWorld is the first scalable, real computer environment designed to put multimodal agents to the test across Linux, Windows, macOS, and beyond.

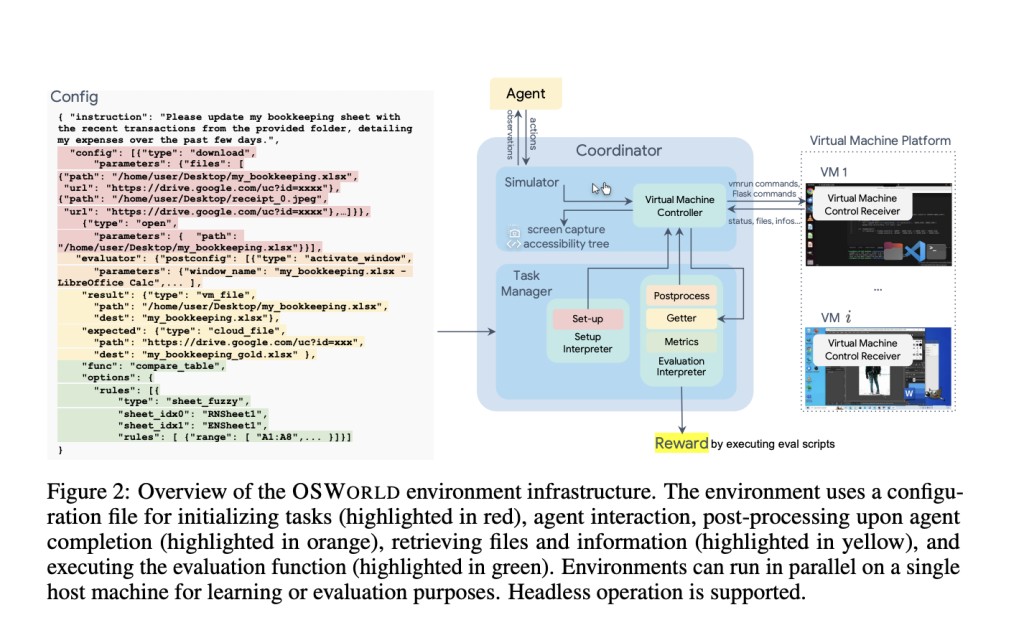

But what sets OSWorld apart? It’s an integrated, controllable environment that supports task setup, evaluation, and interactive learning. Agents can freely interact using raw mouse and keyboard inputs, just like a human user, engaging with any application installed on the system. No more narrow, simulated environments restricting the scope of tasks.

To showcase OSWorld’s potential, the researchers have curated a benchmark of 369 real-world computer tasks spanning web browsers, office suites, media players, coding IDEs, and multi-app workflows. Each meticulously annotated task includes natural language instructions, an initial setup configuration, and a custom execution-based evaluation script, ensuring reliable and reproducible assessment.

So, how did state-of-the-art language models and vision-language models like GPT-4V, Gemini-Pro, and Claude-3 Opus fare on this challenge? The results are eye-opening: even the best model achieved a mere 12.24% success rate, displaying significant deficiencies in GUI grounding, operational knowledge, and long-horizon planning capabilities.

But don’t despair, for these findings illuminate a path forward. The researchers identify key areas ripe for exploration, such as enhancing vision-language models’ GUI interaction prowess, developing agent architectures that foster exploration, memory, and reflection, addressing safety challenges in realistic environments, and expanding data and environments to fuel agent development.

OSWorld represents a turning point in pursuing autonomous digital assistants. By providing a realistic, scalable testing environment and a diverse benchmark, this platform paves the way for groundbreaking research that could one day make human-level computer task automation a reality. The future of effortless, intelligent computer interaction is tantalizingly close, and OSWorld is leading the charge.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

The post Meet OSWorld: Revolutionizing Autonomous Agent Development with Real-World Computer Environments appeared first on MarkTechPost.

Source: Read MoreÂ