In the realm of artificial intelligence, the emergence of powerful autoregressive (AR) large language models (LLMs), like the GPT series, has marked a significant milestone. Despite facing challenges such as hallucinations, these models are hailed as substantial strides toward achieving general artificial intelligence (AGI). Their effectiveness lies in their self-supervised learning strategy, which involves predicting the next token in a sequence. Studies have underscored their scalability and generalizability, which enables them to adapt to diverse, unseen tasks through zero-shot and few-shot learning. These characteristics position AR models as promising candidates for learning from vast amounts of unlabeled data, encapsulating the essence of AGI.

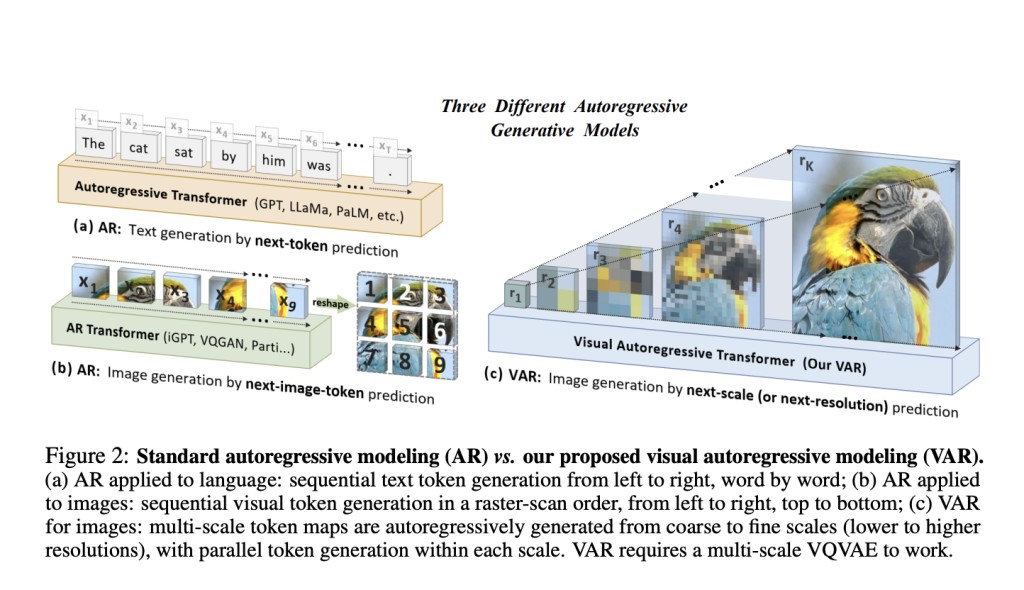

Simultaneously, the field of computer vision has been exploring the potential of large autoregressive or world models to replicate the scalability and generalizability witnessed in language models. Efforts such as VQGAN and DALL-E], alongside their successors, have showcased the capability of AR models in image generation. These models utilize a visual tokenizer to discretize continuous images into 2D tokens and then flatten them into a 1D sequence for AR learning. However, despite these advancements, the scaling laws of such models still need to be explored, and their performance significantly lags behind diffusion models.

To address this gap, researchers at Peking University have proposed a novel AI approach to autoregressive learning for images, termed Visual AutoRegressive (VAR) modeling. Inspired by the hierarchical nature of human perception and design principles of multi-scale systems, VAR introduces a “next-scale prediction†paradigm. In VAR, images are encoded into multi-scale token maps, and the autoregressive process begins from a low-resolution token map, progressively expanding to higher resolutions. Their methodology, leveraging GPT-2-like transformer architecture, has significantly improved AR baselines, especially in the ImageNet 256×256 benchmark.Â

The empirical validation of VAR models has revealed scaling laws akin to those observed in LLMs, highlighting their potential for further advancement and application in various tasks. Notably, VAR models have showcased zero-shot generalization capabilities in tasks such as image in-painting, out-painting, and editing. This breakthrough not only signifies a leap in visual autoregressive model performance but also marks the first instance of GPT-style autoregressive methods surpassing strong diffusion models in image synthesis.

In conclusion, the contributions outlined in their work encompass a new visual generative framework employing a multi-scale autoregressive paradigm, empirical validation of scaling laws and zero-shot generalization potential, significant advancements in visual autoregressive model performance, and the provision of a comprehensive open-source code suite. These efforts aim to propel the advancement of visual autoregressive learning, bridging the gap between language models and computer vision and unlocking new possibilities in artificial intelligence research and application.

Check out the Paper and Code. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

The post This AI Paper from Peking University and ByteDance Introduces VAR: Surpassing Diffusion Models in Speed and Efficiency appeared first on MarkTechPost.

Source: Read MoreÂ

1 Comment

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me.