Memory is significant for intelligence as it helps to recall past experiences and apply them to current situations. However, because of the way their attention mechanism works, both conventional Transformer models and Transformer-based Large Language Models (LLMs) have limitations when it comes to context-dependent memory. The memory consumption and computation time of this attention mechanism are both quadratic in complexity.

Compressive memory systems present a viable substitute, with the objective of being more efficient and scalable for managing very lengthy sequences. Compressive memory systems keep storage and computation costs in check by maintaining a constant number of parameters for storing and retrieving information, in contrast to classical attention mechanisms that need memory to expand with the duration of the input sequence.Â

The goal of this system’s parameter adjustment process is to assimilate new information into memory while maintaining its retrievability. However, an efficient compressive memory method that strikes a compromise between simplicity and quality has not yet been adopted by existing LLMs.

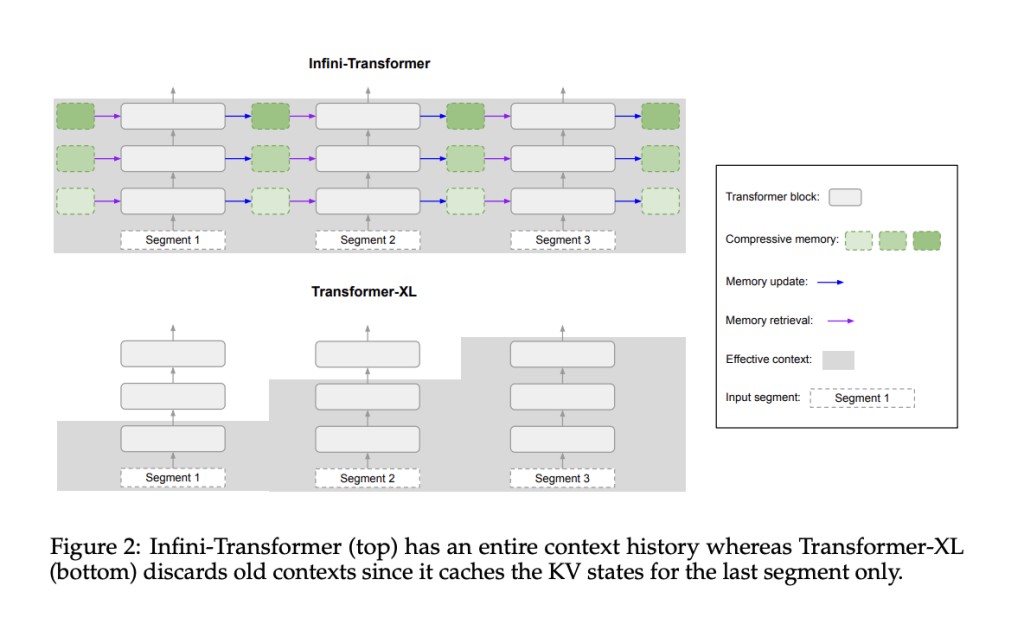

To overcome these limitations, a team of researchers from Google has proposed a unique solution that allows Transformer LLMs to handle arbitrarily lengthy inputs with a constrained memory footprint and computing power. A key component of their approach is an attention mechanism known as Infini-attention, which combines long-term linear attention and masked local attention into a single Transformer block and includes compressive memory in the conventional attention process.

The primary breakthrough of Infini-attention is its capacity to effectively manage memory while processing lengthy sequences. The model can store and recall data with a fixed set of parameters by using compressive memory, which eliminates the requirement for memory to expand with the length of the input sequence. This keeps computing costs within reasonable bounds and helps control memory consumption.

The team has shared that this method has shown to be effective in a number of tasks, such as book summarising tasks with input sequences of 500,000 tokens, passkey context block retrieval for sequences up to 1 million tokens in length, and long-context language modeling benchmarks. LLMs of sizes ranging from 1 billion to 8 billion parameters have been used to solve these tasks.Â

The ability to include minimal bounded memory parameters, that is, to limit and anticipate the model’s memory requirements, is one of this approach’s main advantages. Also, fast streaming inference for LLMs has been made possible by the suggested approach, which makes it possible to analyze sequential input efficiently in real-time or almost real-time circumstances.Â

The team has summarized their primary contributions as follows,

The team has presented Infini-attention, a unique attention mechanism that blends local causal attention with long-term compressive memory. This method is both useful and effective since it effectively represents contextual dependencies over both short and long distances.Â

The standard scaled dot-product attention mechanism needs only be slightly altered to accommodate infini-attention. This enables plug-and-play continuous pre-training and long-context adaptation, and makes incorporation into current Transformer structures simple.Â

The method keeps constrained memory and computational resources while allowing Transformer-based LLMs to accommodate endlessly long contexts. The approach guarantees optimal resource utilization by processing very long inputs in a streaming mode, which enables LLMs to function well in large-scale data real-world applications.

In conclusion, this study is a major step forward for LLMs, allowing for the efficient handling of very long inputs in terms of computation and memory utilization.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

The post Google AI Introduces an Efficient Machine Learning Method to Scale Transformer-based Large Language Models (LLMs) to Infinitely Long Inputs appeared first on MarkTechPost.

Source: Read MoreÂ