Digital agents, software entities designed to facilitate and automate interactions between humans and digital platforms, are gaining prominence as tools for reducing the effort required in routine digital tasks. Such agents can autonomously navigate web interfaces or manage device controls, potentially transforming how users interact with technology. The field is ripe for advancements that boost the reliability and efficiency of these agents across varied tasks and environments.

Despite their potential, digital agents frequently misinterpret user commands or fail to adapt to new or complex environments, leading to inefficiencies and errors. The challenge is developing agents that can consistently understand and execute tasks accurately, even when faced with unfamiliar instructions or interfaces.

Current methods to assess digital agent performance typically involve static benchmarks. These benchmarks evaluate whether an agent’s actions align with predefined expectations based on human-generated scenarios. However, these traditional methods do not always capture the dynamic nature of real-world interactions, where user instructions can vary significantly. Thus, there is a need for more flexible and adaptive evaluation approaches.

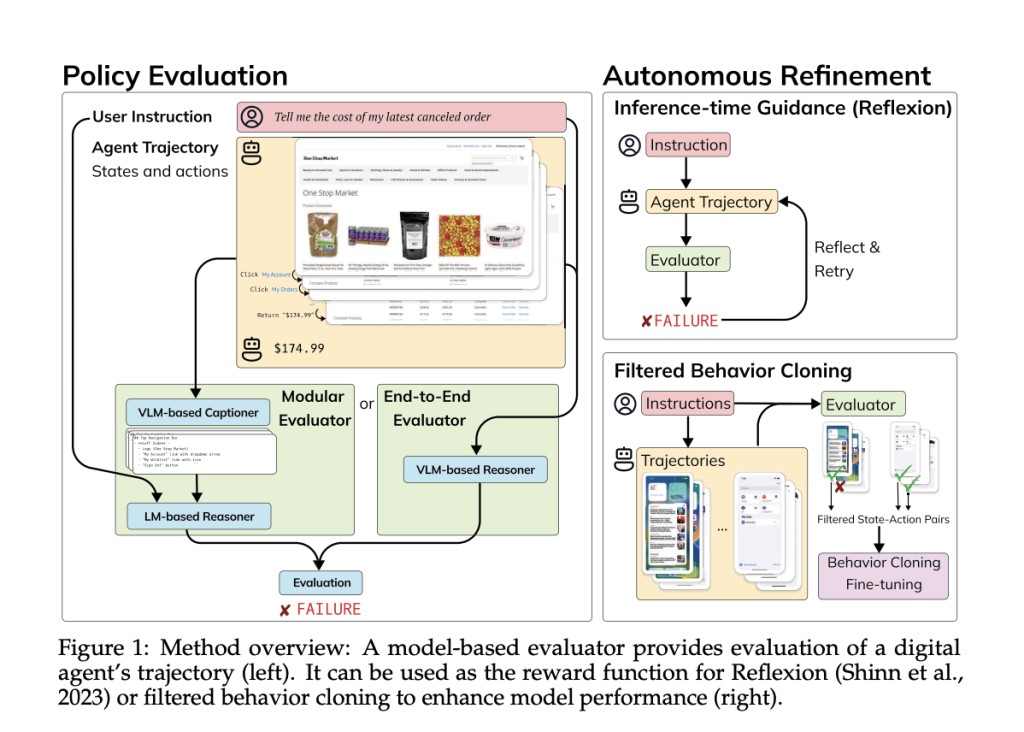

Researchers from UC Berkeley and the University of Michigan proposed a new approach using domain-general evaluation models. These models autonomously assess and refine the performance of digital agents using advanced machine-learning techniques. Unlike traditional methods, these new models do not require human oversight. Instead, they employ a combination of vision and language models to evaluate agents’ actions against a broad spectrum of tasks, providing a more nuanced understanding of agent capabilities.

Two primary methods of this new approach include a fully integrated model and a modular, two-step evaluation process. The integrated model directly assesses agent actions from user instructions and screenshots, leveraging powerful pre-trained vision-language models. Meanwhile, the modular approach first converts visual input into text before using language models to evaluate the textual descriptions against user instructions. This method promotes transparency and can be executed at a lower computational cost, making it suitable for real-time applications.

The effectiveness of these new evaluation models has been substantiated through rigorous testing. For instance, the models have improved the success rate of existing digital agents by up to 29% on standard benchmarks like WebArena. In domain transfer tasks, where agents are applied to new environments without prior training, the models have facilitated a 75% increase in accuracy, underscoring their adaptability and robustness.

Research Snapshot

In conclusion, the research addresses the persistent challenge of digital agents failing in complex or unfamiliar environments. The study showcases significant strides in enhancing digital agent performance by deploying autonomous domain-general evaluation models. These integrated and modular models autonomously refine agent actions, leading to up to a 29% improvement on standard benchmarks and a 75% boost in domain transfer tasks. This breakthrough demonstrates the potential of adaptive AI technologies to revolutionize digital agent reliability and efficiency, marking a critical advancement towards their broader application across various digital platforms.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

The post Autonomous Domain-General Evaluation Models Enhance Digital Agent Performance: A Breakthrough in Adaptive AI Technologies appeared first on MarkTechPost.

Source: Read MoreÂ