Artificial intelligence is revolutionizing scientific research and engineering design by providing an alternative to slow and costly physical experiments. Technologies such as neural operators significantly advance handling complex problems where traditional numerical simulations fail. These problems typically involve dynamics intractable with conventional methods due to their demands for extensive computational resources and detailed data inputs.

The primary challenge in current scientific and engineering simulations is the inefficiency of traditional numerical methods. These methods rely heavily on computational grids to solve partial differential equations, which significantly slows down the process and restricts the integration of high-resolution data. Furthermore, traditional approaches must generalize beyond the specific conditions of the data used during their training phase, limiting their applicability in real-world scenarios.

Existing research includes numerical simulations like finite element methods for solving partial differential equations (PDEs) in fluid dynamics and climate modeling. Machine learning techniques such as sparse representation and recurrent neural networks have been utilized for dynamical systems. Convolutional neural networks and transformers have shown prowess in image and text processing but need help with continuous scientific data. Fourier neural operators (FNO) and Graph Neural Operators (GNO) advance modeling by handling global dependencies and non-local interactions effectively, while Physics-Informed Neural Operators (PINO) integrate physics-based constraints to enhance predictive accuracy and resolution.

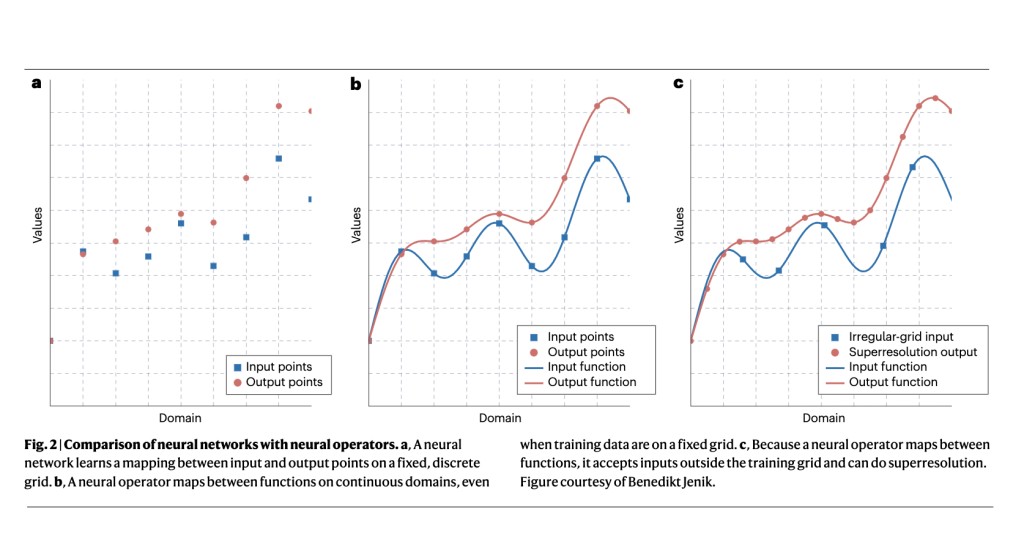

Researchers from NVIDIA and Caltech have introduced an innovative solution using neural operators that fundamentally enhances the capacity to model complex systems efficiently. This method stands out because it leverages the continuity of functions across domains, allowing the model to predict outputs beyond the discretized training data. By integrating domain-specific constraints and employing a differentiable framework, neural operators facilitate direct optimization of design parameters in inverse problems, showcasing adaptability across varied applications.

The methodology centers on implementing neural operators, specifically FNO and PINO. These operators are applied to continuously defined functions, enabling precise predictions across varied resolutions. FNO handles the computation in the Fourier domain, facilitating efficient global integration, while PINO incorporates physics-based loss functions derived from partial differential equations to ensure physical law compliance. Key datasets include the ERA-5 reanalysis dataset for training and validating weather forecasting models. This systematic approach allows the model to predict with high accuracy and generalizability, even when extrapolating beyond training data scopes.

The neural operators introduced in the research have achieved significant quantitative improvements in scientific simulations. For example, FNO facilitated a 45,000x speedup in weather forecasting accuracy. In computational fluid dynamics, enhancements led to a 26,000x increase in simulation speed. PINO demonstrated accuracy by closely matching the ground-truth spectrum, achieving test errors as low as 0.01 at resolutions unobserved during training. Additionally, this operator enabled zero-shot super-resolution capabilities, effectively predicting higher frequency details beyond the training data’s limit. These results underscore the neural operators’ capacity to enhance simulation efficiency and accuracy across diverse scientific domains drastically.

In conclusion, the research on neural operators marks a significant advancement in scientific simulations, offering substantial speedups and enhanced accuracy over traditional methods. By integrating FNO and PINO, the study effectively handles continuous domain functions, achieving unprecedented computational efficiencies in weather forecasting and fluid dynamics. These innovations reduce the time required for complex simulations and improve their predictive precision, thereby broadening the scope for scientific exploration and practical applications in various engineering and environmental fields.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

The post Accelerating Engineering and Scientific Discoveries: NVIDIA and Caltech’s Neural Operators Transform Simulations appeared first on MarkTechPost.

Source: Read MoreÂ