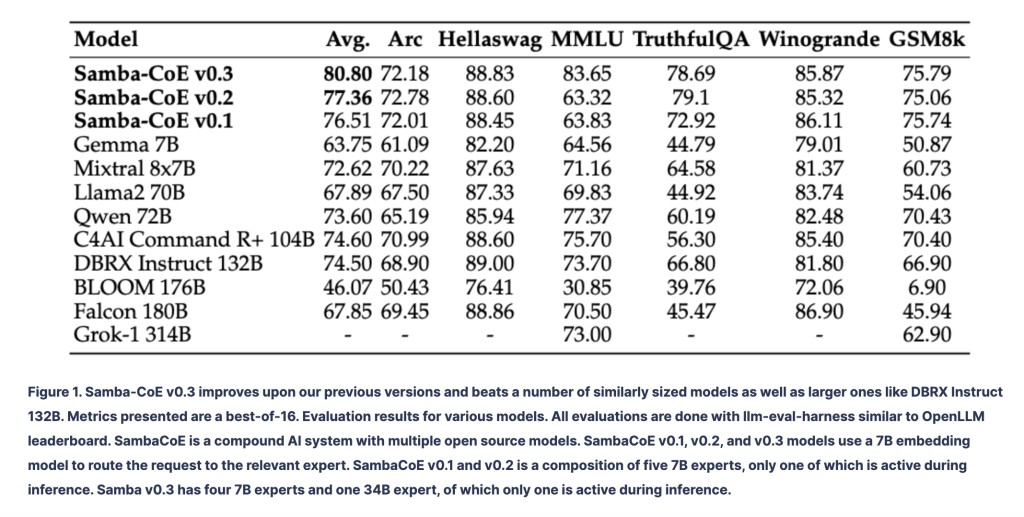

The field of artificial intelligence is advancing rapidly, and SambaNova’s recent introduction of Samba-CoE v0.3 is a significant development in the efficiency and effectiveness of machine learning models. This latest version of the Composition of Experts (CoE) system has surpassed competitors such as DBRX Instruct 132B and Grok-1 314B in the OpenLLM Leaderboard, demonstrating its superior capabilities in handling complex queries.

Samba-CoE v0.3 introduces a new and improved routing mechanism that efficiently directs user queries to the most suitable expert system within its framework. This innovative model is based on the foundational methodologies of its predecessors, Samba-CoE v0.1 and v0.2, which used an embedding router to manage input queries across five different experts.

One of the most notable features of Samba-CoE v0.3 is its improved router quality, achieved through the incorporation of uncertainty quantification. This advancement allows the system to rely on a strong base language model (LLM) when the router’s confidence is low, ensuring that even in uncertain scenarios, the system maintains high accuracy and reliability. This feature is especially critical for a system that needs to handle a wide range of tasks without compromising the quality of its output.

The Samba-CoE v0.3 is powered by a highly advanced text embedding model, known as intfloat/e5-mistral-7b-instruct, which has demonstrated impressive performance on the MTEB benchmark. The development team has further improved the router’s capabilities by incorporating k-NN classifiers that have been enhanced with an entropy-based uncertainty measurement technique. This approach ensures that the router can not only identify the most appropriate expert for a given query but also handle out-of-distribution prompts and noise in training data with great accuracy.

Despite its strengths, Samba-CoE v0.3 is not without limitations. The model primarily supports single-turn conversations, which might result in suboptimal interactions during multi-turn exchanges. Additionally, the limited number of experts and the absence of a dedicated coding expert may restrict the model’s applicability to certain specialized tasks. Furthermore, the system currently supports only one language, which could be a barrier for multilingual applications.

However, the Samba-CoE v0.3 model still stands as a pioneering example of how multiple smaller expert systems can be integrated into a seamless and efficient larger model. This approach not only enhances processing efficiency but also reduces the computational overhead associated with operating a singular, large-scale AI model.

Key Takeaways:

Advanced Query Routing:Â Samba-CoE v0.3 introduces an enhanced router with uncertainty quantification, ensuring high accuracy and reliability across diverse queries.

Efficient Model Composition:Â The system exemplifies the effective integration of multiple expert systems into a cohesive unit, providing a unified solution that mimics a single, more powerful model.

Performance Excellence:Â The model has surpassed major competitors on the OpenLLM Leaderboard, demonstrating its capability in handling complex machine learning tasks.

Scope for Improvement:Â Despite its advancements, the model exhibits areas for improvement, such as support for multi-turn conversations and expansion into multilingual capabilities.

The post Samba-CoE v0.3: Redefining AI Efficiency with Advanced Routing Capabilities appeared first on MarkTechPost.

Source: Read MoreÂ