Medical imaging is a complex field where interpreting results can be challenging.

AI models can assist doctors by analyzing images that might indicate a disease or something unusual.

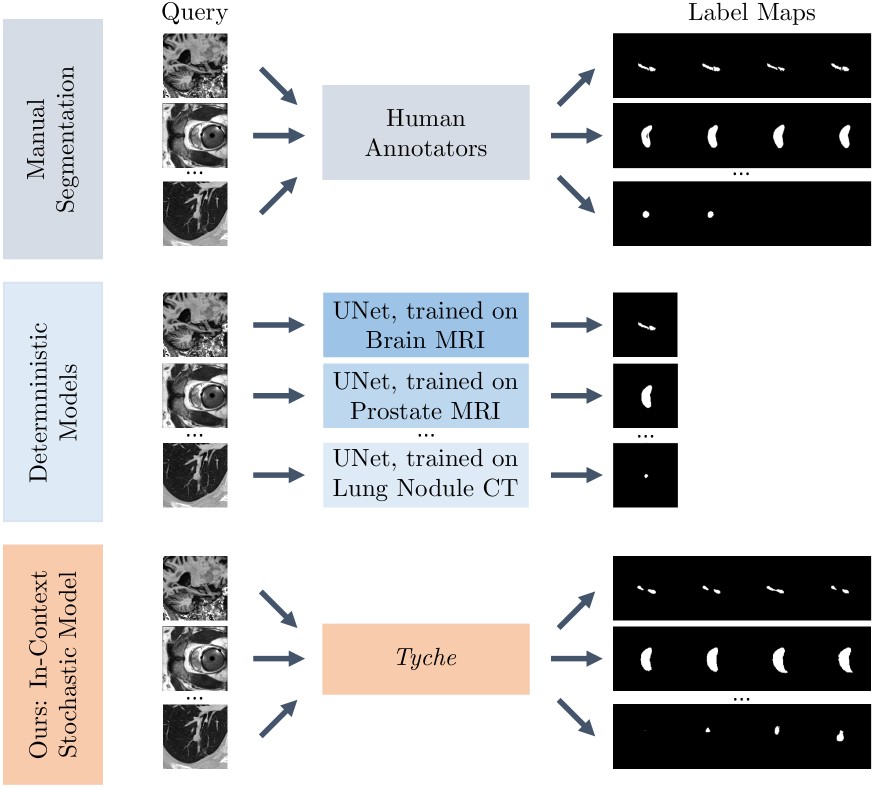

But there’s a catch: these AI models usually come up with a single solution when, in reality, medical images often have multiple interpretations.

If you ask five experts to outline an area of interest, like a small lump in a lung scan, you might end up with five different drawings, as they could all have their own opinions on where the lump starts and ends.

To tackle this problem, researchers from MIT, the Broad Institute of MIT Harvard, and Massachusetts General Hospital have created Tyche, an AI system that embraces the ambiguity in medical image segmentation.

Segmentation involves labeling specific pixels in a medical image that represent important structures, like organs or cells.Â

Marianne Rakic, an MIT computer science PhD candidate and lead author of the study, explains, “Having options can help in decision-making. Even just seeing that there is uncertainty in a medical image can influence someone’s decisions, so it is important to take this uncertainty into account.â€

Named after the Greek goddess of chance, Tyche generates multiple possible segmentations for a single medical image to capture ambiguity.Â

Each segmentation highlights slightly different regions, allowing users to choose the most suitable one for their needs.Â

Rakic tells MIT News, “Outputting multiple candidates and ensuring they are different from one another really gives you an edge.â€

So, how does Tyche work? Let’s break it down into four simple steps:

Learning by example: Users give Tyche a small set of example images, called a “context set,†that show the segmentation task they want to perform. These examples can include images segmented by different human experts, helping the model understand the task and the potential for ambiguity.

Neural network tweaks: The researchers took a standard neural network architecture and made some modifications to allow Tyche to handle uncertainty. They adjusted the network’s layers so that the potential segmentations generated at each step can “communicate†with each other and the context set examples.

Multiple possibilities: Tyche is designed to output multiple predictions based on a single medical image input and the context set.Â

Rewarding quality: The training process was tweaked to reward Tyche for producing the best possible prediction. If the user asks for five predictions, they can see all five medical image segmentations produced by Tyche, even if one might be better.Â

At the top, human annotators show variations in segmenting medical image outputs, as there are multiple interpretations. Traditional automated techniques (middle) are generally designed for specific tasks, generating a single segmentation per image. In contrast, Tyche (bottom) adeptly captures the range of annotator disagreements across various modalities and anatomical structures, eliminating the need for retraining or adjustments. Source: ArXiv.

One of Tyche’s biggest strengths is its adaptability. It can take on new segmentation tasks without needing to be retrained from scratch.Â

Normally, AI models for medical image segmentation use neural networks that require extensive training on large datasets and machine learning expertise.Â

In contrast, Tyche can be used “out of the box†for various tasks, from spotting lung lesions in X-rays to identifying brain abnormalities in MRIs.

Numerous studies have been conducted in AI medical imaging, including major breakthroughs in breast cancer screening and AI diagnostics that match or even beat doctors in interpreting images.Â

Looking to the future, the research team plans to explore using more flexible context sets, possibly including text or multiple types of images.Â

They also want to develop ways to improve Tyche’s worst predictions and enable the system to recommend the best segmentation candidates.

The post Researchers build “Tyche†to embrace uncertainty in medical imaging appeared first on DailyAI.

Source: Read MoreÂ