Modern image-generating tools have come a long way thanks to large-scale text-to-image diffusion models like GLIDE, DALL-E 2, Imagen, Stable Diffusion, and eDiff-I. Thanks to these models, users can create realistic pictures using a variety of textual cues. Kandinsky and Stable Unclip take images as inputs to generate variations that retain the visual components of the reference. The emergence of image-conditioned generation works like Kandinsky, and Stable Unclip is a response to the fact that textual descriptions, while effective, frequently fail to convey detailed visual features.

Image personalization or subject-driven generation is the next logical step in this area. Early attempts in this field include using learnable text tokens to represent target concepts and converting input photos to text. Nevertheless, the substantial resources needed for instance-specific tuning and model storage severely restrict the practicality of these approaches despite their accuracy. To overcome these constraints, tuning-free methods have become more popular. Despite their efficacy in modifying textures, these methods frequently produce tuning-free detail defects and necessitate further tuning to achieve ideal results with target objects.

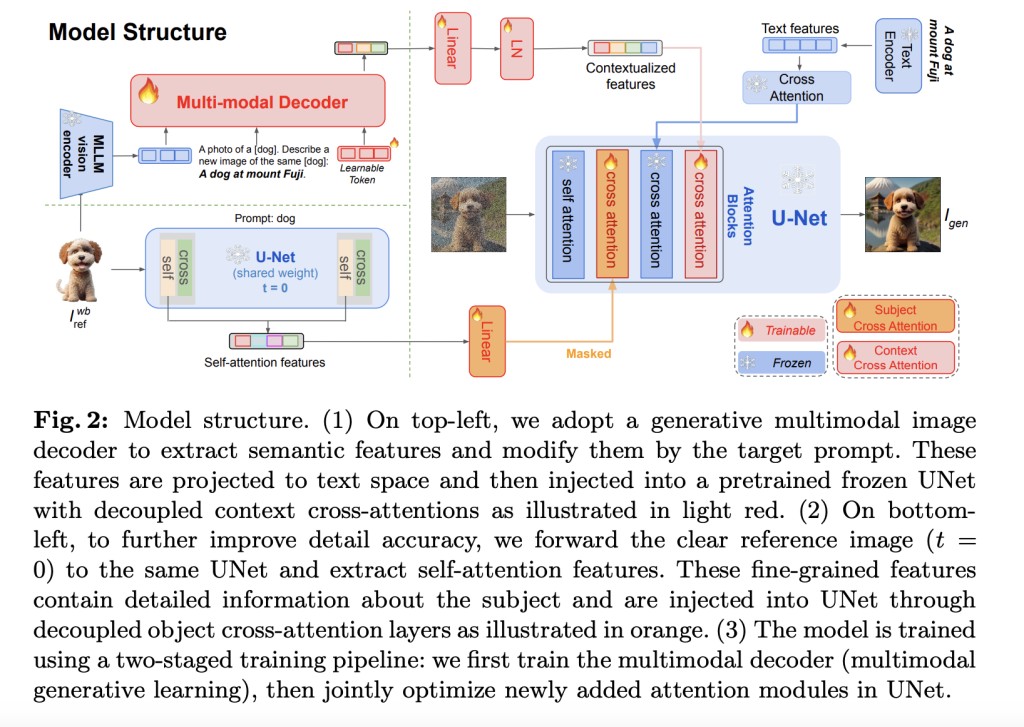

A recent study by ByteDance and Rutgers University presents a new model called MoMA for picture personalization that does not require tweaking and uses an open vocabulary. It overcomes these issues by effectively integrating logical textual prompts, achieving excellent detail fidelity, and resembling object identities. MoMA for text-to-image diffusion model rapid picture customization.

This approach consists of three parts:

First, the researchers use a generative multimodal decoder to retrieve the reference picture’s features. Then, they alter them according to the target prompt to get the contextualized image feature.Â

Meanwhile, they use the original UNet’s self-attention layers to extract the object image feature by replacing the background of the original image with white color and leaving only the object’s pixels.Â

Lastly, they used the UNet diffusion model with the object-cross-attention layers and the contextualized picture attributes to generate new images. The layers were trained specifically for this purpose.

The team used the OpenImage-V7 dataset to build a dataset of 282K image/caption/image-mask triplets for model training. After generating image captions using BLIP-2 OPT6.7B, any subjects pertaining to humans, color, form, and texture keywords were eliminated.

The experimental results speak volumes about the MoMA model’s superiority. By harnessing the power of Multimodal Large Language Models (MLLMs), the model seamlessly combines the visual characteristics of the target object with text prompts, enabling changes to both the backdrop context and object texture. The suggested self-attention shortcut significantly enhances detail quality while imposing a minimal computational burden. The model’s expanded applicability is a testament to its potential, as it can be directly integrated with community models that have been fine-tuned using the same basic model, opening up new possibilities in the field of image generation and machine learning.Â

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post MoMA: An Open-Vocabulary and Training Free Personalized Image Model that Boasts Flexible Zero-Shot Capabilities appeared first on MarkTechPost.

Source: Read MoreÂ