Automated Audio Captioning (AAC) is an innovative field that translates audio streams into descriptive natural language text. Creating AAC systems hinges on vast, accurately annotated audio-text data availability. However, the traditional method of manually pairing audio segments with text captions is not only costly and labor-intensive but also prone to inconsistencies and biases, which restricts the scalability of AAC technologies.

Existing research in AAC includes encoder-decoder architectures that utilize audio encoders like PANN, AST, and HTSAT to extract audio features. These features are interpreted by language generation components such as BART and GPT-2. The CLAP model advances this by using contrastive learning to align audio and text data in multimodal embeddings. Techniques like adversarial training and contrastive losses refine AAC systems, enhancing caption diversity and accuracy while addressing vocabulary limitations inherent in earlier models.

Microsoft and Carnegie Mellon University researchers have proposed an innovative text-only training methodology for AAC systems using the CLAP model. This novel approach circumvents the need for audio data during training by leveraging text data alone, fundamentally altering the traditional AAC training process. It allows the system to generate audio captions without directly learning from audio inputs, thus presenting a significant shift in AAC technology.

The researchers employed the CLAP framework to exclusively train AAC systems using text data for methodology. During training, captions are generated by a decoder conditioned on embeddings from a CLAP text encoder. At inference, the text encoder is substituted with a CLAP audio encoder to adapt the system for actual audio inputs. The model is evaluated on two prominent datasets, AudioCaps and Clotho, utilizing a mix of Gaussian noise injection and a lightweight learnable adapter to effectively bridge the modality gap between text and audio embeddings, ensuring the system’s performance remains robust.

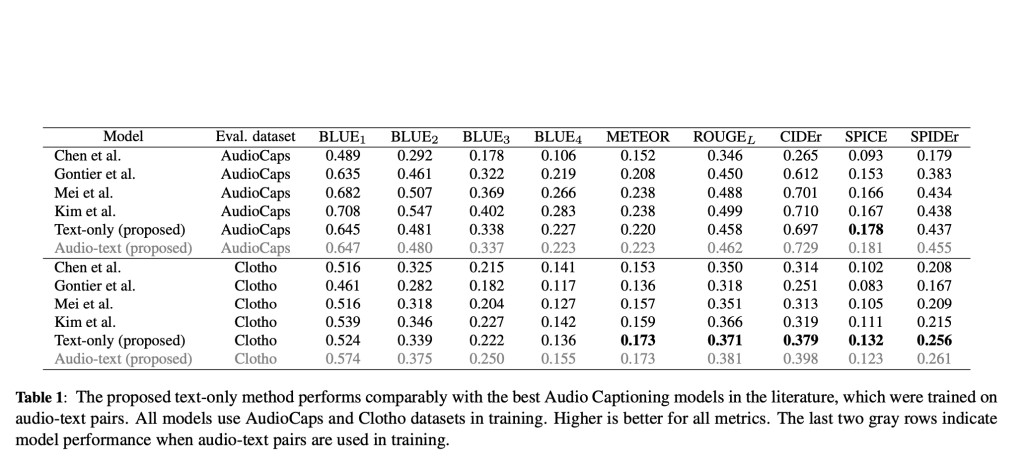

The evaluation of the text-only AAC methodology demonstrated robust results. Specifically, the model achieved a SPIDEr score of 0.456 on the AudioCaps dataset and 0.255 on the Clotho dataset, showcasing competitive performance with state-of-the-art AAC systems trained with paired audio-text data. Moreover, using the Gaussian noise injection and the learnable adapter, the model bridged the modality gap effectively, evidenced by the minimization of the variance in embeddings to approximately 0.015. These quantitative outcomes validate the effectiveness of the proposed text-only training approach in generating accurate and relevant audio captions.

To conclude, the research presents a text-only training method for AAC using the CLAP model, eliminating the dependency on audio-text pairs. The methodology leverages text data to train AAC systems, demonstrated by achieving competitive SPIDEr scores on the AudioCaps and Clotho datasets. This approach simplifies AAC system development, enhances scalability, and reduces dependency on costly data annotation processes. Such innovations in AAC training can significantly broaden the application and accessibility of audio captioning technologies.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

The post Microsoft and CMU Researchers Propose a Machine Learning Method to Train an AAC (Automated Audio Captioning) System Using Only Text appeared first on MarkTechPost.

Source: Read MoreÂ