Natural Language Processing (NLP) tasks heavily rely on text embedding models as they translate the semantic meaning of text into vector representations. These representations make it possible to quickly complete a variety of NLP tasks, including information retrieval, grouping, and semantic textual similarity.Â

Pre-trained bidirectional encoders or encoder-decoders, such as BERT and T5, have historically been the preferred models for this use. Lately, the trend in text embedding jobs has been to use Large Language Models (LLMs) that are decoder-only.

In the NLP field, decoder-only LLMs have proven sluggish in taking off text embedding tasks. Their causal attention mechanism, which restricts their capacity to produce rich contextualized representations, is partially responsible for this reluctance. With causal attention, the representation of each token is determined only by the tokens that came before it, which limits the model’s ability to extract information from the full input sequence.Â

Despite this drawback, decoder-only LLMs are superior to their encoder-only counterparts in a number of ways. Decoder-only LLMs become more sample-efficient by learning from all input tokens during pre-training. They also gain from an existing ecosystem that includes a wealth of tooling and pre-training recipes. Decoder-only LLMs are now highly proficient at the instruction following tasks because of recent improvements in instruction fine-tuning, which makes them adaptable to a wide range of NLP applications.

In order to overcome the drawback of decoder-only LLMs for text embedding, a team of researchers from Mila, McGill University, ServiceNow Research, and Facebook CIFAR AI Chair has proposed LLM2Vec, a straightforward, unsupervised method to convert any pre-trained decoder-only LLM into a text encoder. LLM2Vec is very data and parameter-efficient and does not require any labeled data.Â

There are three simple steps in LLM2Vec: First, it permits bidirectional attention, which lets the model build representations by taking into account both tokens that come before and after it. Second, it uses a technique called masked next token prediction, in which the model predicts the masked tokens that will appear in the input sequence, assisting it in inefficiently comprehending and encoding contextual information. Finally, it makes use of unsupervised contrastive learning, which contrasts similar and different occurrences in the embedding space to help the model develop robust representations.

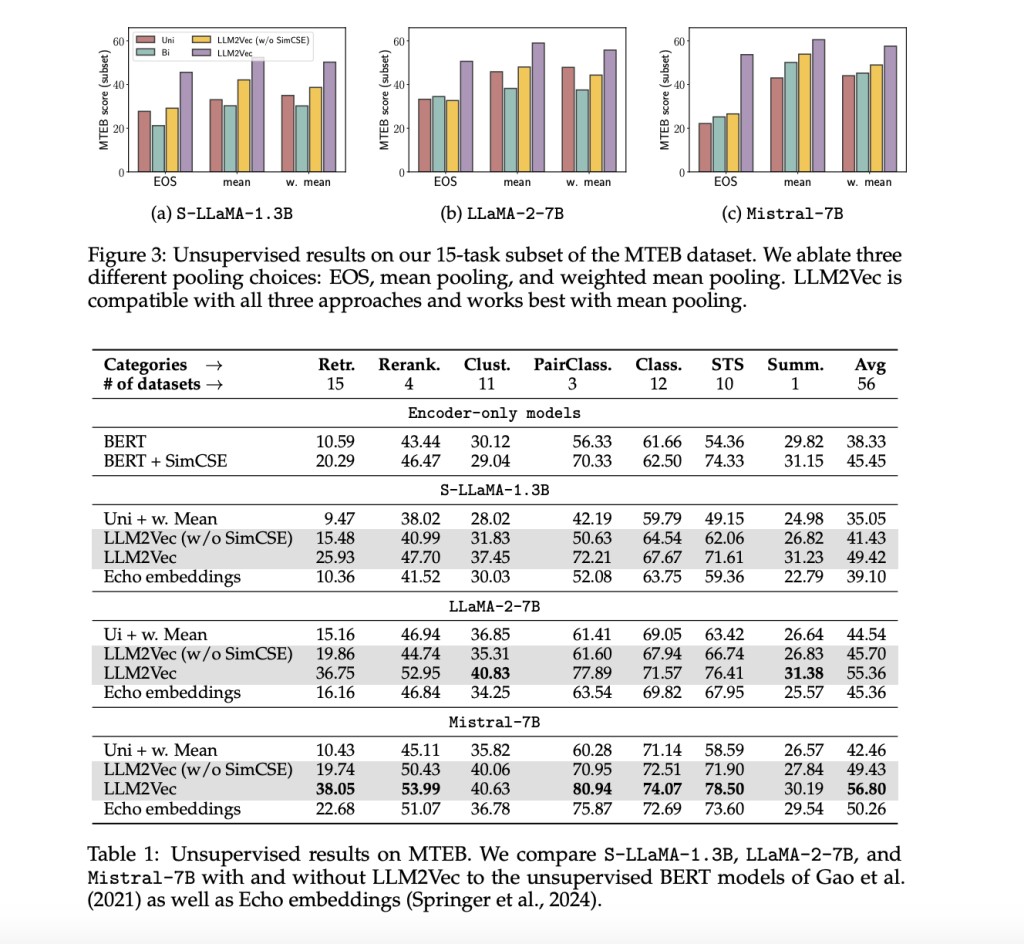

LLM2Vec has been used on three well-known LLMs with parameter sizes ranging from 1.3 billion to 7 billion to verify its effectiveness. The team has tested the converted models on many word and sequence-level tasks in English. The tests have shown notable gains in performance over conventional encoder-only models, especially when it comes to word-level tasks. The method has set a new benchmark performance in unsupervised learning on the Massive Text Embeddings Benchmark (MTEB).

The team has shared that they have attained state-of-the-art results on MTEB by combining LLM2Vec with supervised contrastive learning. The extensive research and empirical results have highlighted how well LLMs work as universal text encoders. This transition has been accomplished in a parameter-efficient way, removing the requirement for expensive adaptation or the creation of synthetic data using fictitious models such as GPT-4.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

The post LLM2Vec: A Simple AI Approach to Transform Any Decoder-Only LLM into a Text Encoder Achieving SOTA Performance on MTEB in the Unsupervised and Supervised Category appeared first on MarkTechPost.

Source: Read MoreÂ