The abundance of web-scale textual data available has been a major factor in the development of generative language models, such as those pretrained as multi-purpose foundation models and tailored for particular Natural Language Processing (NLP) tasks. These models use enormous volumes of text to pick up complex linguistic structures and patterns, which they subsequently use for a variety of downstream tasks.Â

However, their performance on these tasks is highly dependent on the quality and quantity of data used during fine-tuning, particularly in real-world circumstances where precise predictions on uncommon ideas or minority classes are essential. In imbalanced classification problems, active learning presents substantial challenges, mainly due to the intrinsic rarity of minority classes.Â

In order to ensure that minority cases are included, it becomes necessary to collect a sizable pool of unlabeled data in order to properly handle this difficulty. Using conventional pool-based active learning techniques on these unbalanced datasets comes with its own set of challenges. When working with big pools, these methods are typically computationally demanding and have a low accuracy rate because of the possibility of overfitting the initial decision boundary. As a result, they might not search the input space sufficiently or find minority examples.

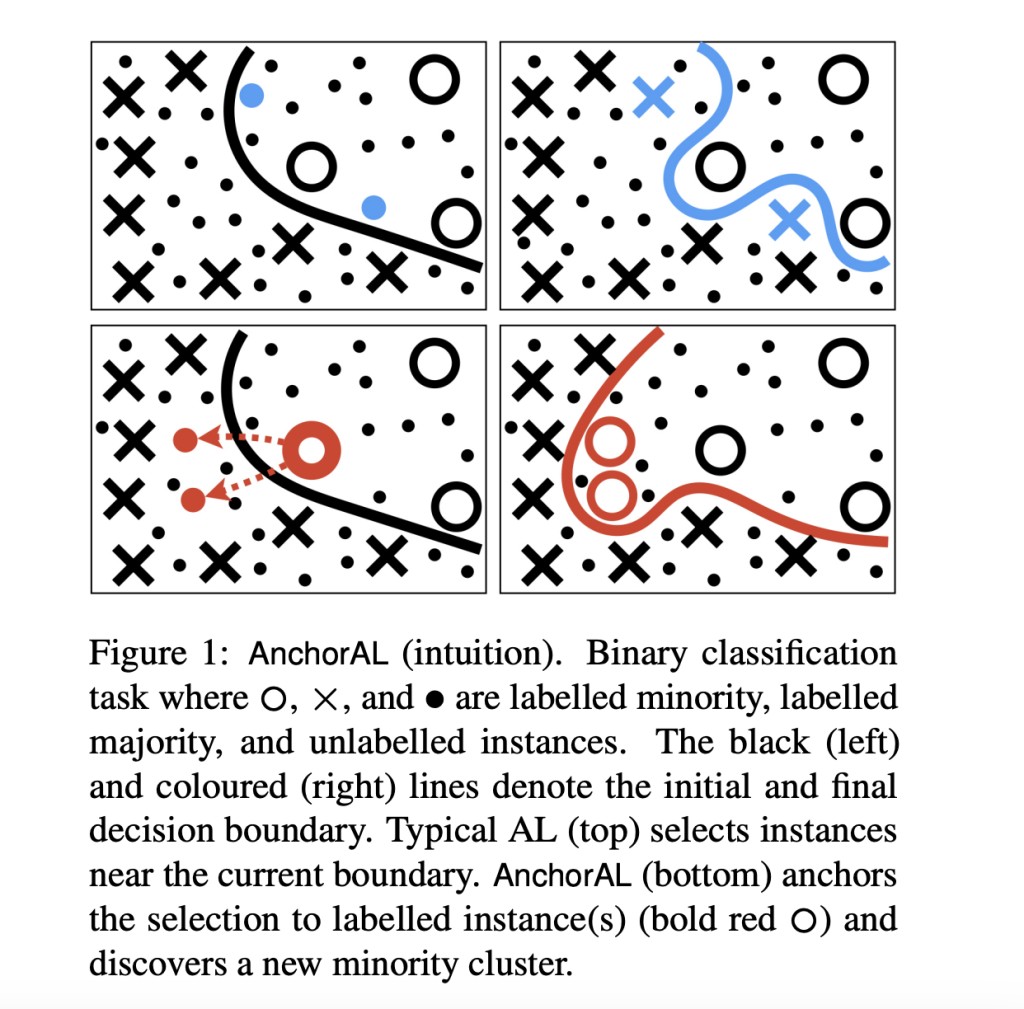

To address these issues, a team of researchers from the University of Cambridge has provided AnchorAL, a unique method for active learning in unbalanced classification tasks. AnchorAL carefully chooses class-specific examples, or anchors, from the labeled set in each iteration. These anchors are used as benchmarks to find the pool’s most comparable unlabeled examples. These comparable examples are gathered into a sub-pool, which is then used for active learning.Â

AnchorAL supports the application of any active learning approach to big datasets by using a tiny, fixed-sized subpool, so effectively scaling the process. Class balance is promoted and the original decision boundary is kept from becoming overfitted by the dynamic selection of new anchors in each iteration. The model is better able to identify new minority instance clusters within the dataset because of this dynamic modification.

AnchorAL’s effectiveness has been demonstrated by experimental evaluations carried out on a range of classification problems, active learning methodologies, and model designs. It has a number of benefits over current practices, which are as follows.Â

Efficiency: AnchorAL improves computational efficiency by drastically cutting runtime, frequently from hours to minutes.Â

Model Performance: AnchorAL improves classification accuracy by training models that are more performant than those trained by rival techniques.Â

Equitable Representation of Minority Classes: AnchorAL produces datasets with greater balance, which is necessary for precise categorization.

In conclusion, AnchorAL is a promising development in the area of active learning for imbalanced classification tasks, providing a workable answer to the problems presented by uncommon minority classes and big datasets.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post Researchers at the University of Cambridge Propose AnchorAL: A Unique Machine Learning Method for Active Learning in Unbalanced Classification Tasks appeared first on MarkTechPost.

Source: Read MoreÂ