Mathematical reasoning is vital for problem-solving and decision-making, particularly in large language models (LLMs). Evaluating LLMs’ mathematical reasoning usually focuses on the final result rather than the reasoning process intricacies. Current methodologies, like the OpenLLM leaderboard, primarily use overall accuracy, potentially overlooking logical errors or inefficient steps. Enhanced evaluation approaches are necessary to uncover underlying issues and improve LLMs’ reasoning.

Existing approaches typically evaluate mathematical reasoning in LLMs by comparing final answers with ground truth and computing overall accuracy. However, some methods assess reasoning quality by comparing generated solution steps with reference ones. Despite datasets providing ground truth, diverse reasoning paths to the same answer challenge reliance on any single reference. Prompting-based methods directly ask LLMs, often GPT-4, to judge generated solutions, but their high computational cost and transparency issues hinder the practicality of iterative model development.

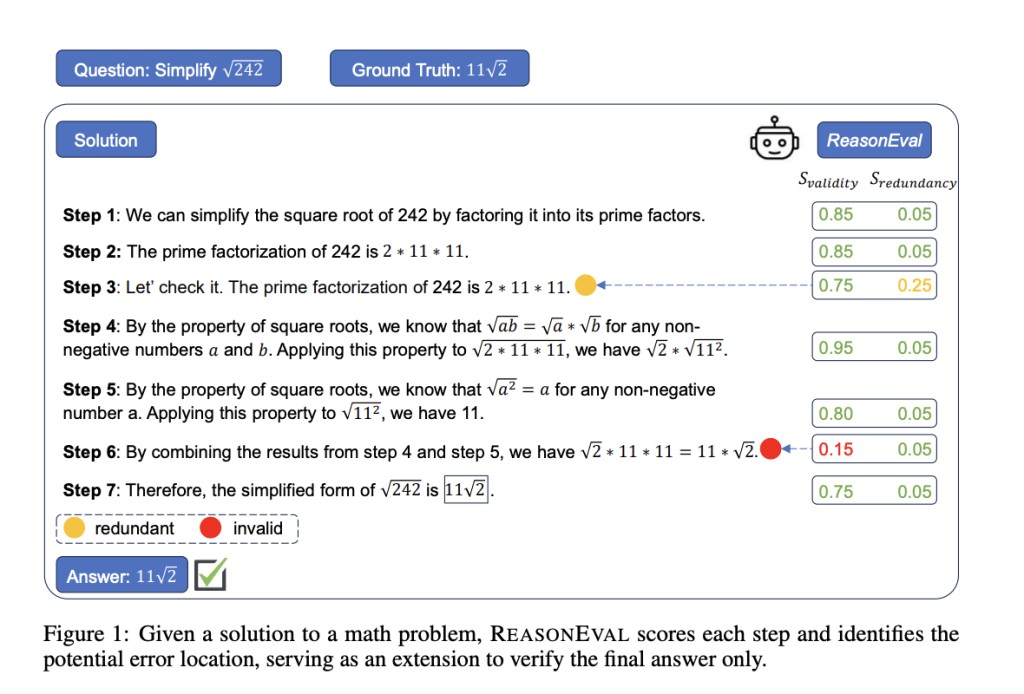

Researchers from Shanghai Jiao Tong University, Shanghai Artificial Intelligence Laboratory, Yale University, Carnegie Mellon University, and Generative AI Research Lab (GAIR) introduced REASONEVAL, a new approach to evaluating reasoning quality beyond final-answer accuracy. It utilizes validity and redundancy metrics to characterize reasoning steps’ quality, which is automatically assessed by accompanying LLMs. REASONEVAL relies on base models with robust mathematical knowledge, trained on high-quality labeled data, to instantiate its evaluation framework.

REASONEVAL focuses on multi-step reasoning tasks, assessing the quality of reasoning beyond final-answer accuracy. It evaluates each reasoning step for validity and redundancy, categorizing them into positive, neutral, or negative labels. Step-level scores are computed based on validity and redundancy and then aggregated to generate solution-level scores. The method utilizes various LLMs with different base models, sizes, and training strategies. Training data is sourced from PRM800K, a dataset of labeled step-by-step solutions collected by human annotators.

REASONEVAL achieves state-of-the-art performance on human-labeled datasets and can accurately detect different errors generated by perturbation. It reveals that enhanced final-answer accuracy doesn’t consistently improve the quality of reasoning steps for complex mathematical problems. The method’s assessment also aids in data selection. Observations highlight significant decreases in validity scores for logical and calculation errors, while redundancy scores remain stable. REASONEVAL distinguishes between errors affecting validity and those introducing redundancy.

In conclusion, the research introduces REASONEVAL, an effective metric for assessing reasoning step quality based on correctness and efficiency. Experimentation confirms its ability to identify diverse errors and competitive performance compared to existing methods. REASONEVAL exposes inconsistencies between final-answer accuracy and reasoning step quality while also proving effective in data selection for training.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post This AI Paper Introduces ReasonEval: A New Machine Learning Method to Evaluate Mathematical Reasoning Beyond Accuracy appeared first on MarkTechPost.

Source: Read MoreÂ