Language models often need more exposure to fruitful mistakes during training, hindering their ability to anticipate consequences beyond the next token. LMs must improve their capacity for complex decision-making, planning, and reasoning. Transformer-based models struggle with planning due to error snowballing and difficulty in lookahead tasks. While some efforts have integrated symbolic search algorithms to address these issues, they merely supplement language models during inference. Yet, enabling language models to search for training could facilitate self-improvement, fostering more adaptable strategies to tackle challenges like error compounding and look-ahead tasks.

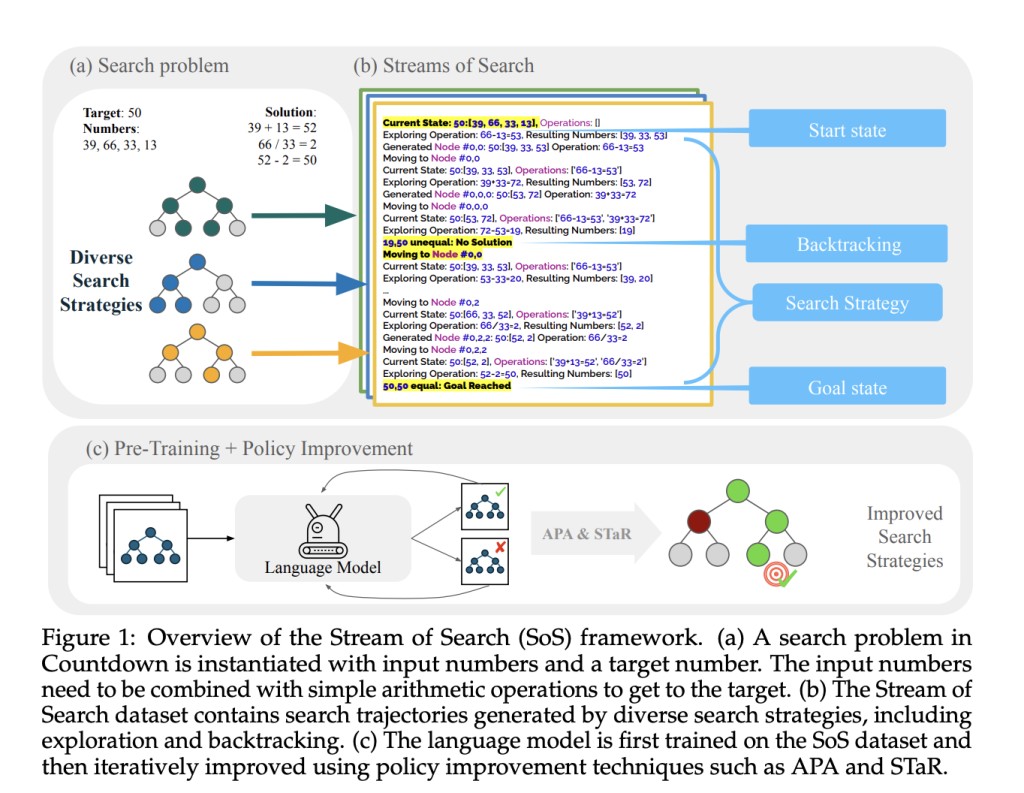

Researchers from Stanford University, MIT, and Harvey Mudd have devised a method to teach language models how to search and backtrack by representing the search process as a serialized string, Stream of Search (SoS). They proposed a unified language for search, demonstrated through the game of Countdown. Pretraining a transformer-based language model on streams of search increased accuracy by 25%, while further finetuning with policy improvement methods led to solving 36% of previously unsolved problems. This showcases that language models can learn to solve problems via search, self-improve, and discover new strategies autonomously.

Recent studies integrate language models into search and planning systems, employing them to generate and assess potential actions or states. These methods utilize symbolic search algorithms like BFS or DFS for exploration strategy. However, LMs primarily serve for inference, needing improved reasoning ability. Conversely, in-context demonstrations illustrate search procedures using language, enabling the LM to conduct tree searches accordingly. Yet, these methods are limited by the demonstrated procedures. Process supervision involves training an external verifier model to provide detailed feedback for LM training, outperforming outcome supervision but requiring extensive labeled data.

The problem domain is a Markov Decision Process (MDP), with states, actions, transition, and reward functions defining the search process. The search involves exploring a tree from the initial to the goal state through sequences of states and actions. A vocabulary of primitive operations guides different search algorithms, including current state, goal state, state queue, state expansion, exploration choice, pruning, backtracking, goal check, and heuristic. For the “Countdown†task, a synthetic dataset with diverse search strategies is created, measuring accuracy based on the model’s ability to generate correct solution trajectories and assessing alignment between different search strategies through correctness and state overlap metrics.

Researchers explore the effectiveness of training LMs on optimal solutions or suboptimal search trajectories for solving Countdown problems. Using a GPT-Neo model, researchers train on datasets representing both scenarios. Results indicate that models trained on suboptimal search trajectories outperform those trained on optimal solutions. Moreover, they investigate self-improvement strategies using reinforcement learning (RL), such as expert iteration and Advantage-Induced Policy Alignment (APA). These strategies enhance the model’s ability to solve previously unsolved and difficult problems, demonstrating improved efficiency and accuracy in navigating the search space. Additionally, insights into the models’ search strategies reveal flexible utilization of various methods, potentially leading to the discovery of heuristics.

In conclusion, the SoS framework introduces a method for language models to learn problem-solving through simulated search processes in language. Addressing criticisms of language models for planning, SoS enables models to backtrack and explore alternative paths, fostering adaptability and overcoming errors. Unlike symbolic search methods, SoS models learn internal “world models†for search, potentially improving generalization. While the study focused on the Countdown game, SoS shows promise for tackling complex real-world tasks. Future research could enhance SoS by incorporating formalizable operations and exploring domain transferability. Ultimately, SoS demonstrates the potential for LMs to excel in problem-solving through diverse search strategies and iterative refinement.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post Researchers at Stanford and MIT Introduced the Stream of Search (SoS): A Machine Learning Framework that Enables Language Models to Learn to Solve Problems by Searching in Language without Any External Support appeared first on MarkTechPost.

Source: Read MoreÂ