The evolution of artificial intelligence through the development of Large Language Models (LLMs) has marked a significant milestone in the quest to mirror human-like abilities in generating text, reasoning, and decision-making. However, aligning these models with human ethics and values has remained complex. Traditional methods, such as Reinforcement Learning from Human Feedback (RLHF), have made strides in integrating human preferences by fine-tuning LLMs post-training. These methods, however, often rely on simplifying the multifaceted nature of human preferences into scalar rewards, a process that may not capture the entirety of human values and ethical considerations.

Researchers from Microsoft Research have introduced an approach known as Direct Nash Optimization (DNO), a novel strategy aimed at refining LLMs by focusing on general preferences rather than solely on reward maximization. The method emerges as a response to the limitations of traditional RLHF techniques, which, despite their advances, struggle to fully embody complex human preferences within the full training of LLMs. DNO introduces a paradigm shift by employing a batched on-policy algorithm alongside a regression-based learning objective.

DNO is rooted in the observation that existing methods might not fully harness the potential of LLMs to understand and generate content that aligns with nuanced human values. DNO offers a comprehensive framework for post-training LLMs by directly optimising general preferences. This approach is characterized by its simplicity and scalability, attributed to the method’s innovative use of batched on-policy updates and regression-based objectives. These features allow DNO to provide a more refined alignment of LLMs with human values, as demonstrated in extensive empirical evaluations.

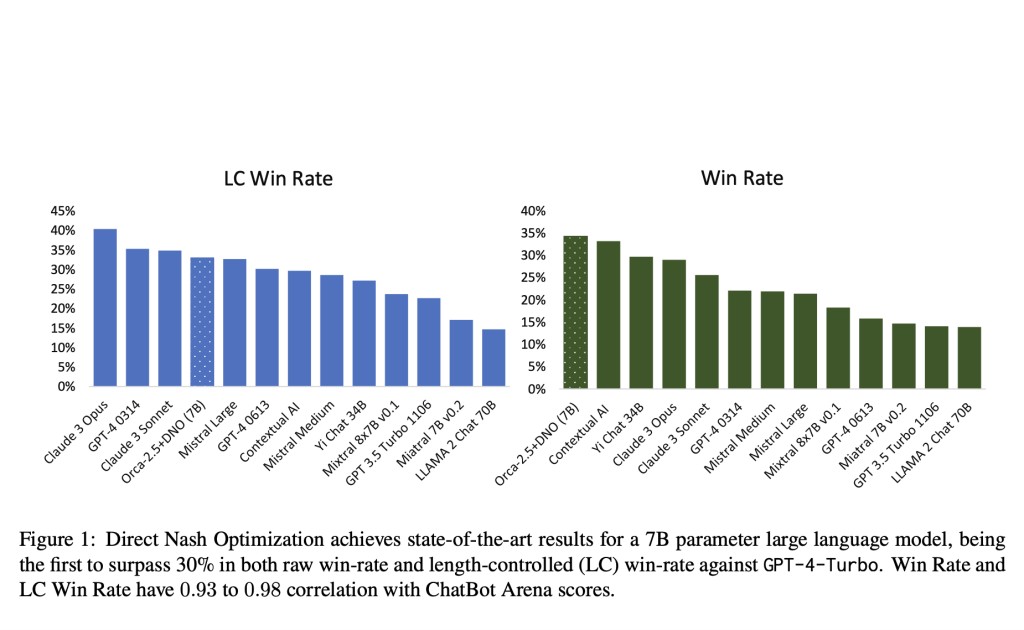

One of DNO’s standout achievements is its implementation with the 7B parameter Orca-2.5 model, which showed an unprecedented 33% win rate against GPT-4-Turbo in AlpacaEval 2.0. This represents a significant leap from the model’s initial 7% win rate, showcasing an absolute gain of 26% through the application of DNO. This remarkable performance positions DNO as a leading method for post-training LLMs. It highlights its potential to surpass traditional models and methodologies in aligning LLMs more closely with human preferences and ethical standards.

Research Snapshot

In conclusion, the DNO method emerges as a pivotal advancement in refining LLMs, addressing the significant challenge of aligning these models with human ethical standards and complex preferences. By shifting focus from traditional reward maximization to optimizing general preferences, DNO overcomes the limitations of previous RLHF techniques and sets a new benchmark for post-training LLMs. The remarkable success demonstrated by the Orca-2.5 model’s impressive performance gain in AlpacaEval 2.0 underscores its potential to revolutionize the field.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post Microsoft AI Introduces Direct Nash Optimization (DNO): A Scalable Machine Learning Algorithm that Combines the Simplicity and Stability of Contrastive Learning with the Theoretical Generality of Optimizing General Preferences appeared first on MarkTechPost.

Source: Read MoreÂ