The training of Large Language Models (LLMs) has been shackled by the limitations of subword tokenization, a method that, while effective to a degree, demands considerable computational resources. This has not only capped the potential for model scaling but also restricted the training on expansive datasets without incurring prohibitive costs. The challenge has been twofold: how to significantly compress text to facilitate efficient model training and simultaneously maintain or even enhance the performance of these models.

Existing research includes leveraging transformer language models, such as the Chinchilla model, for efficient data compression, demonstrating substantial text size reduction capabilities. Innovations in Arithmetic Coding, adjusted for better LLM compatibility, and exploring “token-free†language modeling through convolutional downsampling offer alternative paths for neural tokenization. Using learned tokenizers in audio compression and applying GZip’s modeling components for varied AI tasks extend the utility of compression algorithms. Studies employing static Huffman coding with n-gram models present a different approach, prioritizing simplicity over maximum compression efficiency.

Google Deepmind and Anthropic researchers have introduced a novel approach for training LLMs on neurally compressed text, named ‘Equal-Info Windows.’ This technique achieves significantly higher compression rates than traditional methods without compromising the learnability or performance of LLMs. The key innovation lies in processing highly compressed text that retains efficiency and effectiveness in model training and inference tasks.

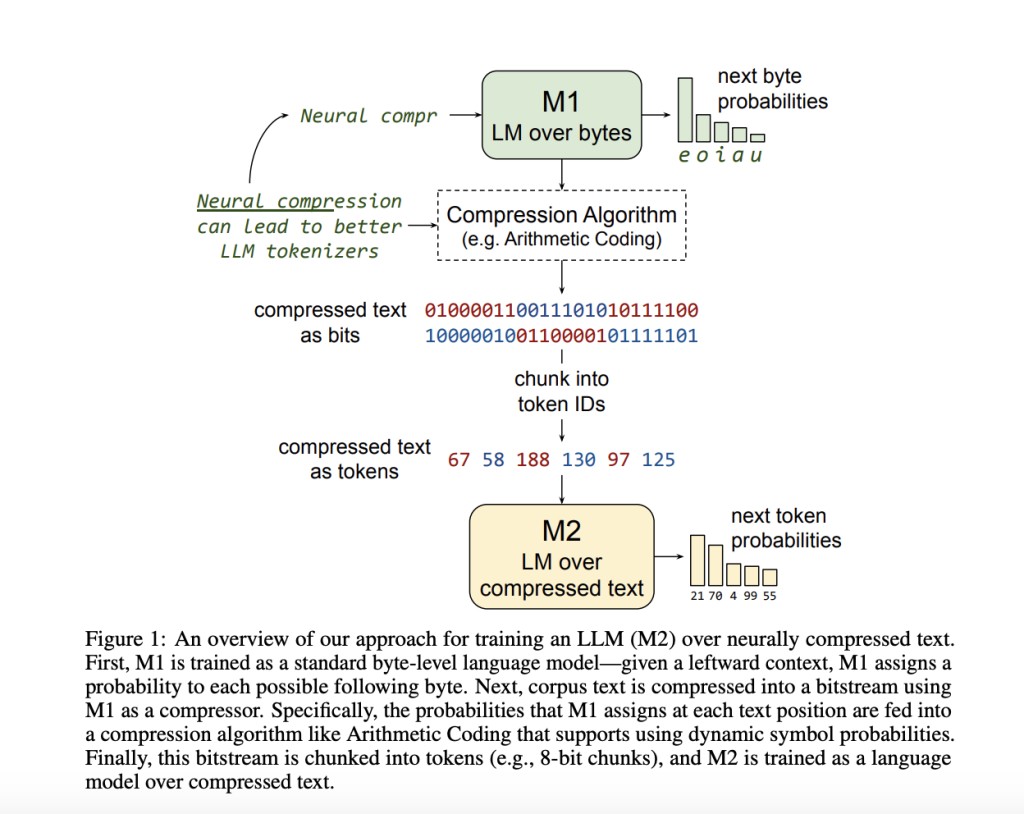

The methodology employs a two-model system: M1, a smaller language model for compressing text using Arithmetic Coding, and M2, a larger LLM trained on the compressed output. The process involves segmenting text into uniform blocks that each compress to a specific bit length and then tokenizing this compressed data for M2 training. The research utilizes the C4 (Cleaned Common Crawl Corpus) dataset for model training. This setup aims to maintain efficiency and effectiveness in model performance across large datasets by ensuring consistent compression rates and providing stable inputs for the LLM, highlighting the practical application of the “Equal-Info Windows†technique.

The results show that models trained using “Equal-Info Windows†significantly outperform traditional methods. Specifically, LLMs utilizing this technique remarkably improved perplexity scores and inference speeds. For example, models trained with “Equal-Info Windows†on perplexity benchmarks surpassed byte-level baselines by a wide margin, reducing perplexity by up to 30% across various tests. Furthermore, there was a noticeable acceleration in inference speed, with models demonstrating up to a 40% increase in processing speed compared to conventional training setups. These metrics underscore the effectiveness of the proposed method in enhancing the efficiency and performance of large language models trained on compressed text.

In conclusion, the research introduced “Equal-Info Windows,†a novel method for training large language models on compressed text, achieving higher efficiency without compromising performance. Segmenting text into uniform blocks for consistent compression enhances model learnability and inference speeds. The successful application of the C4 dataset demonstrates the method’s effectiveness, marking a significant advancement in model training methodologies. This work improves the scalability and performance of language models and opens new avenues for research in data compression and efficient model training.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter with 24k+ members…

Don’t Forget to join our 40k+ ML SubReddit

The post Google DeepMind and Anthropic Researchers Introduce Equal-Info Windows: A Groundbreaking AI Method for Efficient LLM Training on Compressed Text appeared first on MarkTechPost.

Source: Read MoreÂ