Linear attention-based models are gaining attention for their faster processing speed and comparable performance to Softmax transformers. However, large language models (LLMs), due to their large size and longer sequence lengths, exert significant strain on contemporary GPU hardware because a single GPU’s memory confines a language model’s maximum sequence length.

Sequence Parallelism (SP) techniques are often utilized to divide a long sequence into several sub-sequences and train them on multiple GPUs separately. However, current SP methods underutilize linear attention features, resulting in inefficient parallelism and usability issues.Â

Researchers from Shanghai AI Laboratory and TapTap present the linear attention sequence parallel (LASP) technique, which optimizes sequence parallelism on linear transformers. It employs point-to-point (P2P) communication for efficient state exchange among GPUs within or across nodes. LASP maximizes the use of right-product kernel tricks in linear attention. Importantly, it doesn’t rely on attention head partitioning, making it adaptable to multi-head, multi-query, and grouped-query attentions.

LASP employs a tiling approach to partition input sequences into sub-sequence chunks distributed across GPUs. It distinguishes attention computation into intra-chunks and inter-chunks for utilizing linear attention’s right-product advantage. Intra-chunks use conventional attention computation, while inter-chunks exploit kernel tricks. The method also includes data distribution, forward pass, and backward pass mechanisms to enhance parallel processing efficiency.

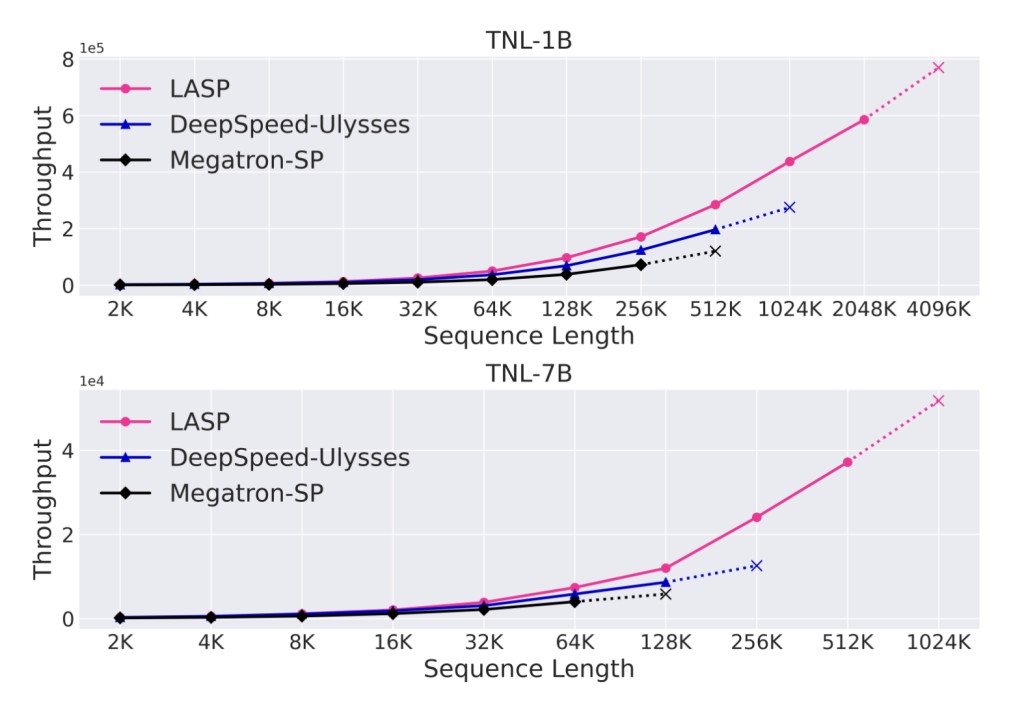

LASP achieves significant throughput enhancement for linear attention through efficient communication design, surpassing DeepSpeed-Ulysses by 38% and Megatron by 136% in throughput at 256K sequence length on 1B model. Moreover, LASP, with system optimizations like kernel fusion and KV State caching, supports longer sequence lengths within the same cluster, reaching 2048K for the 1B model and 512K for the 7B model.

Key contributions of this research are as follows:Â

A new SP strategy tailored to linear attention: Enabling linear attention-based models to scale for long sequences without being limited by a single GPU.Â

Sequence length-independent communication over-head: Their elegant communication mechanism harnesses the right-product kernel trick of linear attention to ensure that the exchanging of linear attention intermediate states is sequence length-independent.

GPU-friendly implementation: Optimized LASP’s execution on GPUs through meticulous system engineering, including kernel fusion and KV State caching.

Data-parallel compatibility: LASP is compatible with all batch-level DDP methods, such as PyTorch/Legacy DDP, FSDP, and ZeRO-series optimizers.

In conclusion, LASP is introduced to overcome the limitations of existing SP methods on linear transformers by leveraging linear attention features to enhance parallelism efficiency and usability. Implementing P2P communication, kernel fusion, and KV state caching reduces communication traffic and improves GPU cluster utilization. Compatibility with batch-level DDP methods ensures practicality for large-scale distributed training. Experiments highlight LASP’s advantages in scalability, speed, memory usage, and convergence performance compared to existing SP methods.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

The post Linear Attention Sequence Parallel (LASP): An Efficient Machine Learning Method Tailored to Linear Attention-Based Language Models appeared first on MarkTechPost.

Source: Read MoreÂ