Creating deep learning architectures requires a lot of resources because it involves a large design space, lengthy prototyping periods, and expensive computations related to at-scale model training and evaluation. Architectural improvements are achieved through an opaque development process guided by heuristics and individual experience rather than systematic procedures. This is due to the combinatorial explosion of possible designs and the lack of reliable prototyping pipelines despite progress on automated neural architecture search methods. The necessity for principled and agile design pipelines is further emphasized by the high expenses and lengthy iteration periods linked to training and testing new designs, exacerbating the problem.Â

Despite the abundance of potential architectural designs, most models use variants on a standard Transformer recipe that alternates between memory-based (self-attention layers) and memoryless (shallow FFNs) mixers. The original Transformer design is the basis for this specific set of computational primitives known to enhance quality. Empirical evidence suggests that these primitives excel at specific sub-tasks within sequence modeling, such as context versus factual recall.Â

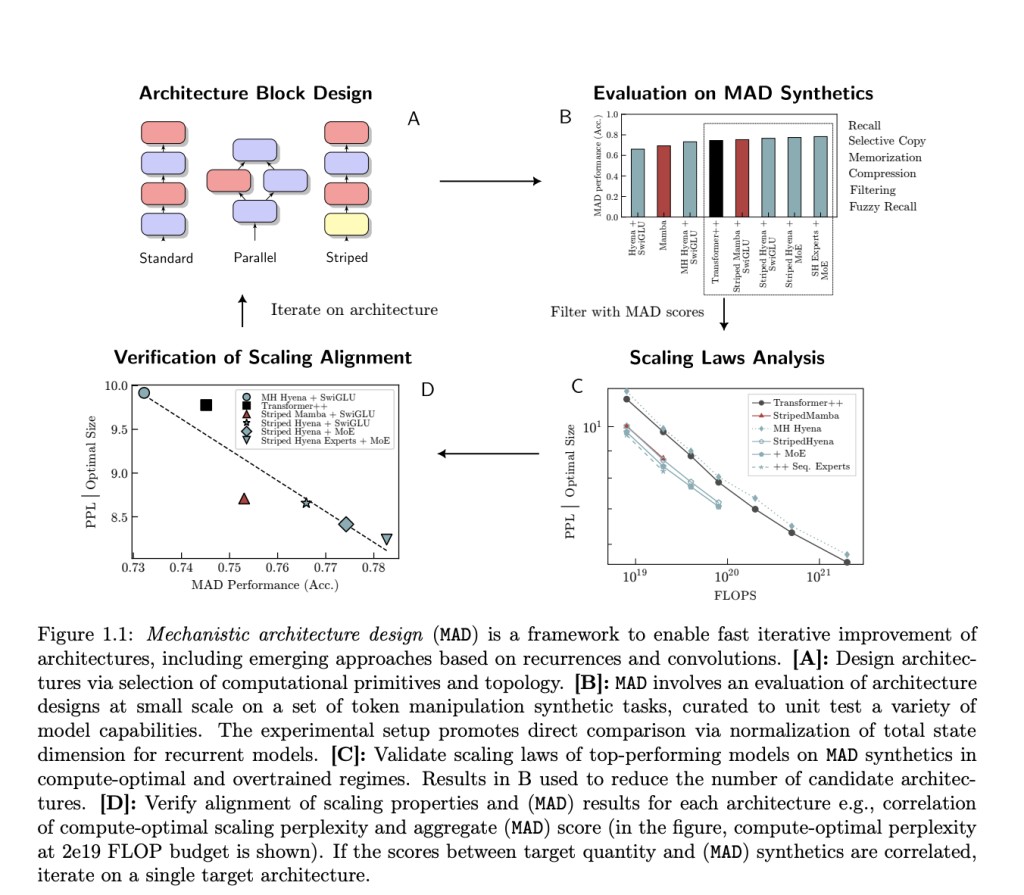

Researchers from Together AI, Stanford University, Hessian AI, RIKEN, Arc Institute, CZ Biohub, and Liquid AI investigate architecture optimization, ranging from scaling rules to artificial activities that test certain model capabilities. They introduce mechanistic architectural design (MAD), an approach for rapid architecture prototypes and testing. Selected to function as discrete unit tests for critical architecture characteristics, MAD comprises a set of synthetic activities like compression, memorization, and recall that necessitate just minutes of training time. Developing better methods for manipulating sequences, such as in-context learning and recall, has led to a better understanding of sequence models like Transformers, which has inspired MAD problems.Â

Using MAD, the team evaluates designs that use well-known and unfamiliar computational primitives, including gated convolutions, gated input-varying linear recurrences, and additional operators like mixtures of experts (MoEs). They use MAD to filter to find potential candidates for architecture. This has led to the discovery and validation of various design optimization strategies, such as striping—creating hybrid architectures by sequentially interleaving blocks made of various computational primitives with a predetermined connection topology.Â

The researchers investigate the link between MAD synthetics and real-world scaling by training 500 language models with diverse architectures and 70–7 billion parameters to conduct the broadest scaling law analysis on developing architectures. Scaling rules for compute-optimal LSTMs and Transformers are the foundation of their protocol. Overall, hybrid designs outperform their non-hybrid counterparts in scaling, reducing pretraining losses over a range of FLOP compute budgets at the compute-optimal frontier. Their work also demonstrates that novel architectures are more resilient to extensive pretraining runs outside the optimal frontier.

The state’s size, similar to kv-caches in standard Transformers, is an important factor in MAD and its scaling analysis. It determines inference efficiency and memory cost and likely directly affects recall capabilities. The team presents a state-optimal scaling methodology to estimate the complexity scaling with the state dimension of various model designs. They discover hybrid designs that strike a good compromise between complexity, state dimension, and computing requirements.

By combining MAD with newly developed computational primitives, they can create cutting-edge hybrid architectures that achieve 20% lower perplexity while maintaining the same computing budget as the top Transformer, convolutional, and recurrent baselines (Transformer++, Hyena, Mamba).Â

The findings of this research have significant implications for machine learning and artificial intelligence. By demonstrating that a well-chosen set of MAD simulated tasks can accurately forecast scaling law performance, the team opens the door to automated, faster architecture design. This is particularly relevant for models of the same architectural class, where MAD accuracy is closely associated with compute-optimal perplexity at scale.Â

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

The post This Machine Learning Research Introduces Mechanistic Architecture Design (Mad) Pipeline: Encompassing Small-Scale Capability Unit Tests Predictive of Scaling Laws appeared first on MarkTechPost.

Source: Read MoreÂ