The proficiency of large language models (LLMs) in deciphering the complexities of human language has been a subject of considerable acclaim. Yet, when it comes to mathematical reasoning—a skill that intertwines logic with numerical understanding—these models often falter, revealing a gap in their ability to mimic human cognitive processes comprehensively. This gap necessitates an urgent need for innovation in AI, propelling research endeavors to enhance the mathematical understanding of LLMs without diluting their linguistic prowess.

Existing research includes the Chain of Thought prompting, refined through frameworks like Tree of Thoughts and Graph of Thoughts, guiding LLMs through structured reasoning. Supervised Fine-tuning (SFT) and Reinforcement Learning (RL) methods, as seen in WizardMath and high-quality supervisory data, have aimed at direct capability improvement. Moreover, strategies like Self-Consistency and tools like MATH-SHEPHERD enhance problem-solving. Mammoth and Tora utilize code insertion to surpass computational limits, showcasing diverse approaches to augmenting LLMs’ mathematical reasoning.

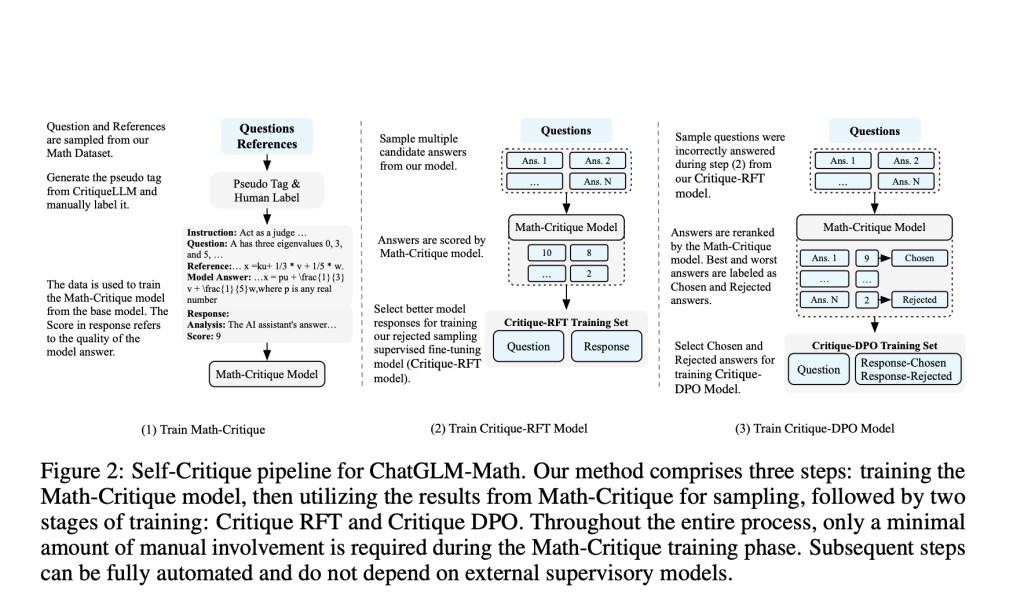

Researchers from Zhipu.AI and Tsinghua University have introduced the “Self-Critique†pipeline, which distinguishes itself by employing the model’s output for feedback-driven enhancement. Unlike traditional methods focusing on external feedback, this approach internalizes improvement mechanisms, facilitating simultaneous advancements in mathematical reasoning and language processing capabilities.

The methodology unfolds through a structured two-phase process. Initially, a Math-Critique model assesses the LLM’s mathematical outputs, facilitating the Rejective Fine-tuning (RFT) phase where only responses meeting a set criterion are retained for further refinement. This is followed by the Direct Preference Optimization (DPO) stage, which sharpens the LLM’s problem-solving understanding by learning from pairs of correct and incorrect answers. The efficacy of this pipeline is tested on the ChatGLM3-32B model, utilizing both established academic datasets and the specially curated MATH USER EVAL dataset to benchmark the model’s enhanced mathematical reasoning and language processing capabilities.

The Self-Critique pipeline, applied to the ChatGLM3-32B model, demonstrated significant quantitative improvements in mathematical problem-solving. On the MATH USER EVAL dataset, the enhanced model showcased a performance boost, achieving a 17.5% increase in accuracy compared to its baseline version. Furthermore, compared with other leading models, such as InternLM2-Chat-20B and DeepSeek-Chat-67B, which observed improvements of 5.1% and 1.2% respectively, ChatGLM3-32 B’s performance stood out markedly. Furthermore, the model’s language capabilities saw a parallel enhancement, with an improvement of 6.8% in linguistic task accuracy, confirming the pipeline’s efficacy in balancing mathematical and language processing strengths.

In summary, this research presents the “Self-Critique†pipeline, a practical tool that significantly boosts LLMs’ mathematical problem-solving capabilities while maintaining linguistic proficiency. By leveraging the model’s outputs for feedback through the Math-Critique model and implementing stages of Rejective Fine-tuning and Direct Preference Optimization, the ChatGLM3-32B model demonstrated substantial improvements in mathematical accuracy and language processing. This methodological innovation represents a significant stride towards developing more adaptable and intelligent AI systems, pointing to a promising direction for future AI research and applications.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

The post Researchers from Zhipu AI and Tsinghua University Introduced the ‘Self-Critique’ pipeline: Revolutionizing Mathematical Problem Solving in Large Language Models appeared first on MarkTechPost.

Source: Read MoreÂ