The evolution of large language models (LLMs) marks a transition toward systems capable of understanding and expressing languages beyond the dominant English, acknowledging the global diversity of linguistic and cultural landscapes. Historically, the development of LLMs has been predominantly English-centric, reflecting primarily the norms and values of English-speaking societies, particularly those in North America. This focus has inadvertently limited these models’ effectiveness across the rich tapestry of global languages, each with unique linguistic attributes, cultural nuances, and societal contexts. With its distinctive linguistic structure and deep cultural context, Korean has often posed a challenge for conventional English-based LLMs, prompting a shift toward more inclusive and culturally aware AI research and development.

Existing research includes models such as GPT-3 by OpenAI, renowned for its English text generation, and multilingual frameworks like mT5 and XLM-R, expanding LLM capabilities across languages. Focused models like BERTje and CamemBERT cater to Dutch and French, respectively, highlighting the importance of language-specific approaches. Codex further explores the integration of code generation within LLMs. Additionally, Korean-focused models such as KR-BERT and KoGPT underline efforts towards developing LLMs attuned to specific linguistic and cultural contexts, setting the stage for advanced, culture-sensitive AI models.

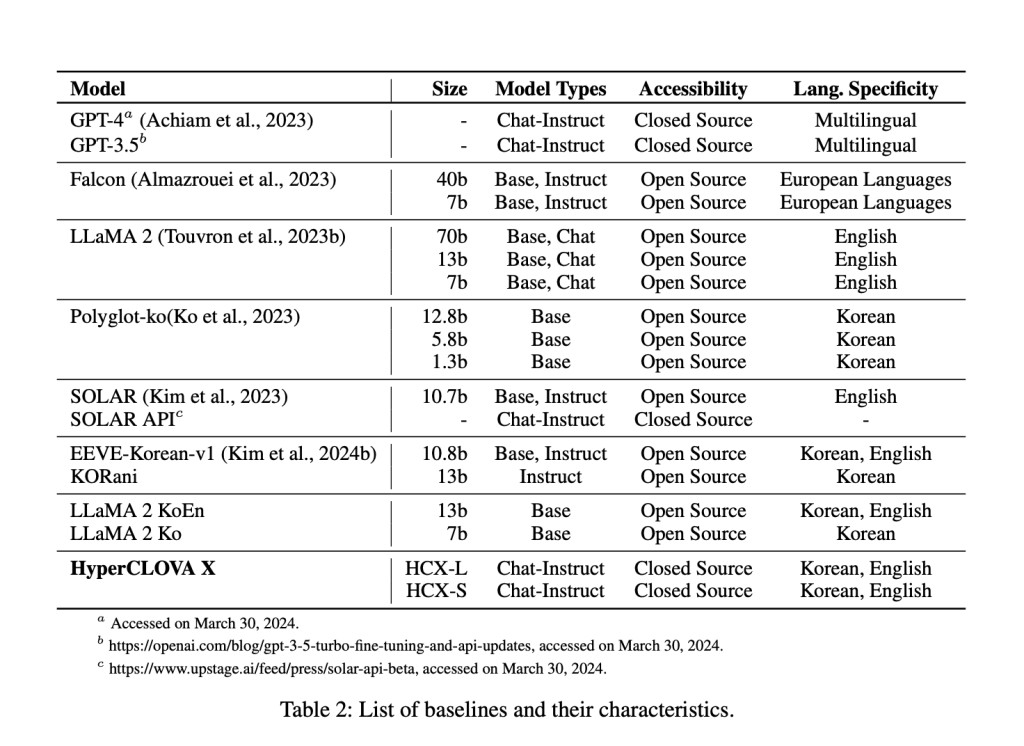

Researchers from NAVER Cloud’s HyperCLOVA X Team introduce HyperCLOVA X, which focuses on the Korean language and culture while maintaining proficiency in English and coding. Its innovation lies in the equilibrium of Korean and English data alongside programming code, refined through instruction tuning against high-quality, human-annotated datasets under stringent safety guidelines.

HyperCLOVA X’s methodology integrates transformer architecture enhancements, specifically rotary position embeddings, and grouped-query attention, to extend context understanding and training stability. The model underwent Supervised Fine-Tuning (SFT) using human-annotated demonstration datasets, followed by Reinforcement Learning from Human Feedback (RLHF) to align outputs with human values. Training utilized a balanced mix of Korean, English, and programming code data, aiming for comprehensive multilingual proficiency. This combination of advanced architectural modifications and alignment learning techniques, supported by a diverse dataset, ensures HyperCLOVA X’s effectiveness in understanding and generating contextually rich and culturally nuanced content across languages, particularly Korean.

HyperCLOVA X achieved a remarkable 72.07% accuracy in the comprehensive Korean benchmarks, surpassing its predecessors and setting a new standard for Korean language understanding. It closely matched top English-centric LLMs with a 58.25% accuracy rate on English reasoning tasks. HyperCLOVA X demonstrated its versatility in coding challenges by securing a 56.83% success rate, showcasing its adeptness in linguistic tasks and technical coding assessments. These figures underscore HyperCLOVA X’s breakthrough in bridging the gap between multilingual comprehension and application-specific performance, establishing it as a frontrunner in culturally nuanced AI technologies.

In conclusion, the research introduces HyperCLOVA X, a language model by NAVER Cloud, distinguished for its proficiency in Korean and English, developed through advanced transformer architecture and alignment learning. Achieving remarkable language understanding and coding benchmarks significantly advances AI’s linguistic and cultural adaptability. Beyond its linguistic achievements, a significant focus was on safety, ensuring the model’s outputs aligned with ethical guidelines and cultural sensitivities.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

The post NAVER Cloud Researchers Introduce HyperCLOVA X: A Multilingual Language Model Tailored to Korean Language and Culture appeared first on MarkTechPost.

Source: Read MoreÂ