Robust benchmarks are indispensable tools in the arsenal of researchers, providing a rigorous framework for evaluating new methods across a diverse array of datasets. These benchmarks are pivotal in advancing the state-of-the-art, fostering innovation, and ensuring fair and meaningful comparisons among competing methodologies. Notably, benchmarks such as ImageNet have set the gold standard in computer vision by offering a substantial dataset for rigorous evaluation, thus catalyzing progress in the field. However, existing benchmarks for Time Series Forecasting (TSF) exhibit limitations in comprehensively evaluating methods across various application domains and with equitable fairness.

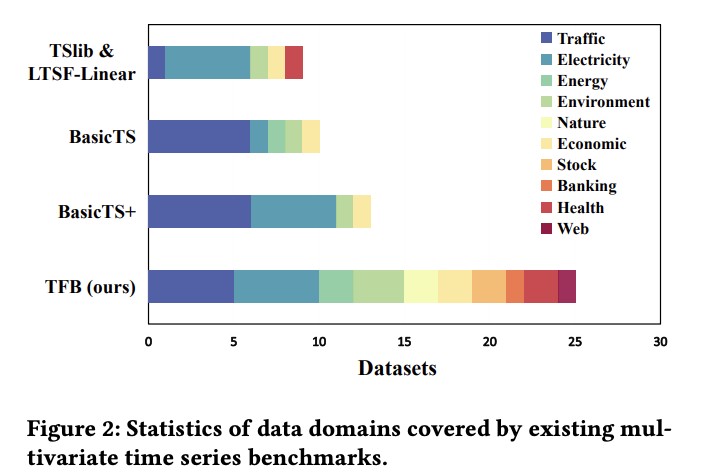

Addressing these limitations head-on, a team of researchers from China Normal University, Huawei Cloud Computing Technologies, and Aalborg University introduces the Time series Forecasting Benchmark (TFB), designed to facilitate the empirical evaluation and comparison of TSF methods with enhanced fairness. TFB comprises a curated collection of complex, realistic datasets that are meticulously organized according to taxonomy to ensure diversity across multiple domains and settings. Their comprehensive dataset collection aims to provide researchers with a robust and extensive evaluation platform, addressing dataset bias and limited coverage prevalent in existing benchmarks.

TFB boasts several key characteristics crucial for fostering fair and rigorous evaluations in TSF. Firstly, it offers broad coverage of existing methods, spanning statistical learning, machine learning, and deep learning approaches, accompanied by an array of evaluation strategies and metrics. This breadth enables comprehensive evaluations across various methodologies and evaluation settings, thus enriching the landscape of TSF research. Secondly, TFB features a flexible and scalable pipeline, enhancing the fairness of method comparisons by employing a unified evaluation strategy and standardized datasets, thereby eliminating biases and enabling more accurate performance assessments.

Through experimentation conducted using TFB, researchers have gained practical insights into the performance of different TSF methods across diverse datasets and characteristics. These insights include the comparative performance of statistical methods like VAR and linear regression against state-of-the-art methods, as well as the efficacy of Transformer-based approaches on datasets exhibiting marked seasonality and nonlinear patterns. Furthermore, TFB underscores the significance of considering dependencies between channels, particularly on multivariate time series datasets with strong correlations.

In summary, the introduction of TFB represents a significant advancement in the field of TSF, offering researchers a comprehensive and standardized platform for evaluating forecasting methods. By addressing issues of fairness, dataset diversity, and method coverage, TFB aims to propel research in TSF forward, fostering innovation and enabling more robust comparisons among competing methodologies.Â

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

The post TFB: An Open-Source Machine Learning Library Designed for Time Series Researchers appeared first on MarkTechPost.

Source: Read MoreÂ