The cascades concept has emerged as a critical mechanism, particularly for large language models (LLMs). These cascades enable a smaller, localized model to seek assistance from a significantly larger, remote model when it encounters challenges in accurately labeling user data. Such systems have gained prominence for their ability to maintain high task performance while substantially lowering inference costs. However, a significant concern arises when these systems handle sensitive data, as the interaction between local and remote models could potentially lead to privacy breaches.

Solving privacy concerns in cascade systems involves navigating the complex challenge of preventing sensitive data from being shared with or exposed to the remote model. Traditional cascade systems lack mechanisms to protect privacy, raising alarms about the potential for sensitive data to be inadvertently forwarded to remote models or incorporated into their training datasets. This exposure compromises user privacy and undermines trust in deploying machine learning models in sensitive applications.

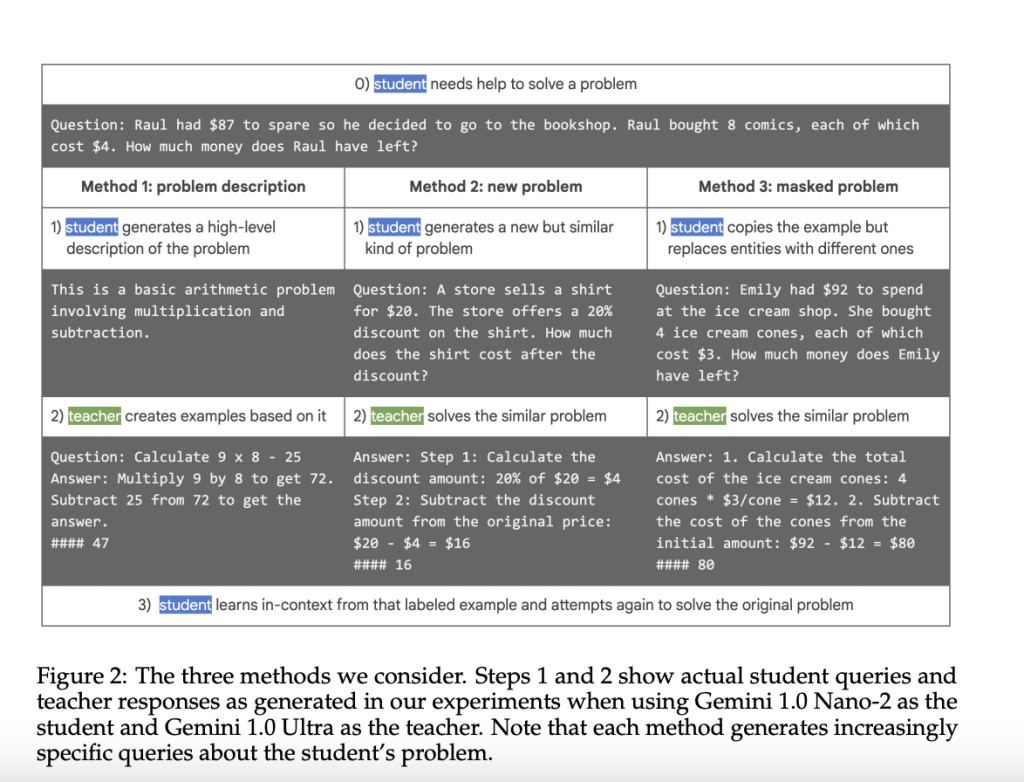

Researchers from Google Research have introduced a novel methodology that leverages privacy-preserving techniques within cascade systems. Integrating the social learning paradigm, where models learn collaboratively through natural language exchanges, ensures that the local model can securely query the remote model without exposing sensitive information. The innovation lies in using data minimization and anonymization techniques, alongside leveraging LLMs’ in-context learning (ICL) capabilities, to create a privacy-conscious bridge between the local and remote models.

The proposed method’s core balances reveal enough information to garner useful assistance from the remote model while ensuring the details remain private. By employing gradient-free learning through natural language, the local model can describe its problem to the remote model without sharing the data. This method preserves privacy and allows the regional model to benefit from the remote model’s capabilities.Â

The researchers’ experiments demonstrate the efficacy of their approach across multiple datasets. One notable finding is the improvement in task performance when using privacy-preserving cascades compared to non-cascade baselines. For instance, in one of the experiments, the method that involves generating new, unlabeled examples by the local model (and subsequently labeled by the remote model) achieved a remarkable task success rate of 55.9% for math problem-solving and 94.6% for intent recognition when normalized by the teacher’s performance. These results underscore the method’s potential to maintain high task performance while minimizing privacy risks.

The research delves into privacy metrics to quantitatively assess the effectiveness of their privacy-preserving techniques. The study introduces two concrete metrics: entity leak and mapping leak metrics. These metrics are crucial for understanding and quantifying the privacy implications of the proposed cascade system. Replacing entities in original examples with placeholders demonstrated the most impressive privacy preservation, with the entity leak metric significantly lower than other methods.Â

In conclusion, this research encapsulates a groundbreaking approach to leveraging cascade systems in machine learning while addressing the paramount privacy issue. Through integrating social learning paradigms and privacy-preserving techniques, the researchers have demonstrated a pathway to enhancing the capabilities of local models without compromising sensitive data. The results are promising, showing a reduction in privacy risks and an enhancement in task performance, illustrating the potential of this methodology to revolutionize the use of LLMs in privacy-sensitive applications.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

The post Researchers at Google AI Innovates Privacy-Preserving Cascade Systems for Enhanced Machine Learning Model Performance appeared first on MarkTechPost.

Source: Read MoreÂ