In an era where artificial intelligence (AI) development often seems gated behind billion-dollar investments, a new breakthrough promises to democratize the field. Research from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and Myshell AI has unveiled that training potent large language models (LLMs), akin to LLaMA2-level, can be remarkably economical. Their findings suggest that an investment of just $0.1 million— a fraction of the costs incurred by giants like OpenAI and Meta—is sufficient for crafting models that challenge the industry’s titans.

The research proposes JetMoE-8B, a super-efficient model that not only defies the traditional cost barrier associated with LLMs but also surpasses the performance of its more expensively trained counterparts, such as LLaMA2-7B from Meta AI. The research underscores a pivotal shift: the training of high-performance LLMs, once the exclusive domain of well-funded entities, is now within reach of a broader spectrum of research institutes and companies, courtesy of JetMoE’s innovative approach.

Democratizing AI Development

JetMoE-8B represents a paradigm shift in AI training, crafted to be both fully open-source and academia-friendly. Its reliance solely on public datasets for training and open-sourced code ensures that no proprietary resources are necessary, making it an attractive option for institutions with limited budgets. Additionally, JetMoE-8B’s architecture allows for fine-tuning on consumer-grade GPUs, further reducing the entry barriers to high-quality AI research and development.

A New Benchmark in Efficiency and Performance

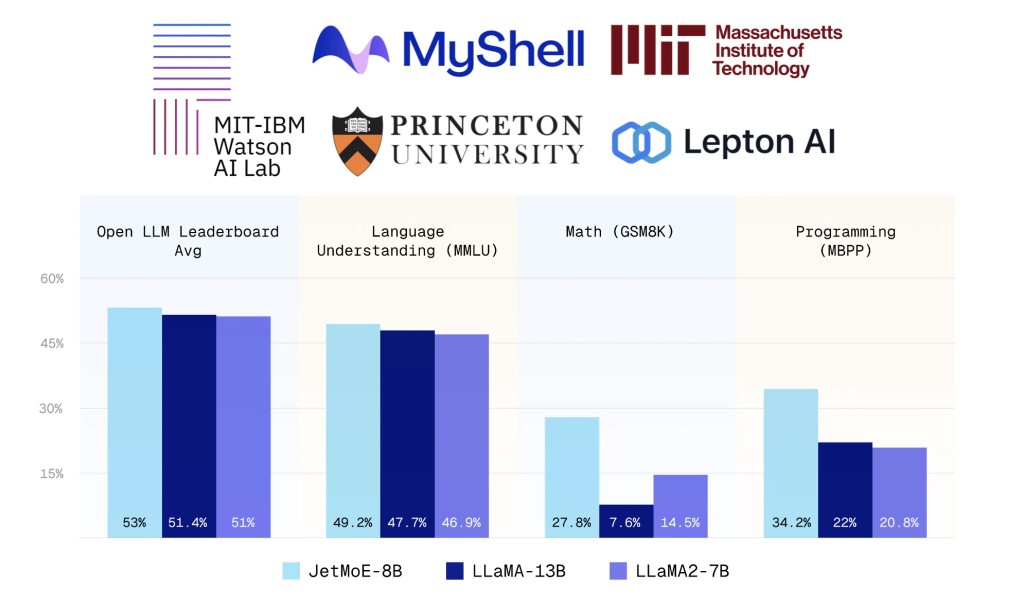

Utilizing a sparsely activated architecture inspired by ModuleFormer, JetMoE-8B incorporates 24 blocks, each featuring two types of Mixture of Experts (MoE) layers. This design results in a total of 8 billion parameters, with only 2.2 billion active during inference, significantly lowering computational costs without sacrificing performance. In benchmarks, JetMoE-8B has outperformed several models with larger training budgets and computational resources, including LLaMA2-7B and LLaMA-13B, highlighting its exceptional efficiency.

Cost-Effective Training

The affordability of JetMoE-8B’s training process is noteworthy. Utilizing a 96×H100 GPU cluster for two weeks, the total cost approximated $0.08 million. This was achieved by following a two-phase training methodology, incorporating both a constant learning rate with linear warmup and an exponential learning rate decay, across a training corpus of 1.25 trillion tokens from open-source datasets.

Key Takeaways:

JetMoE-8B challenges the conventional belief that high-quality LLM training necessitates massive financial investments, demonstrating that it can be achieved with as little as $0.1 million.

Its fully open-source nature and minimal computational requirements during fine-tuning make JetMoE-8B accessible to a wide array of research bodies and companies.

Despite its lower cost and computational footprint, JetMoE-8B delivers superior performance compared to models trained with significantly larger budgets.

JetMoE democratizes access to high-performance LLMs, paving the way for more inclusive and widespread AI research and development.

The breakthrough represented by JetMoE-8B signals a significant democratization of AI technology, potentially catalyzing a wave of innovation from a more diverse set of contributors than ever before.

Check out the HF Page, Github, and Demo. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

The post Myshell AI and MIT Researchers Propose JetMoE-8B: A Super-Efficient LLM Model that Achieves LLaMA2-Level Training with Just US $0.1M appeared first on MarkTechPost.

Source: Read MoreÂ