In the field of machine learning, aligning language models (LMs) to interact appropriately with multimodal data like videos has been a persistent challenge. The crux of the issue lies in developing a robust reward system that can distinguish preferred responses from less desirable ones, especially when dealing with video inputs. The risk of hallucinations further exacerbates this challenge – instances where models generate misleading or factually inconsistent content due to the scarcity of alignment data across different modalities.

While recent advancements in reinforcement learning and direct preference optimization (DPO) have proven effective in guiding language models toward producing more honest, helpful, and harmless content, their effectiveness in multimodal contexts has been limited. A critical obstacle has been the difficulty in scaling human preference data collection, which, although invaluable, is both costly and labor-intensive. Existing approaches for distilling preferences from image data encounter scalability issues when applied to video inputs, which require analyzing multiple frames, significantly increasing the complexity of the data.

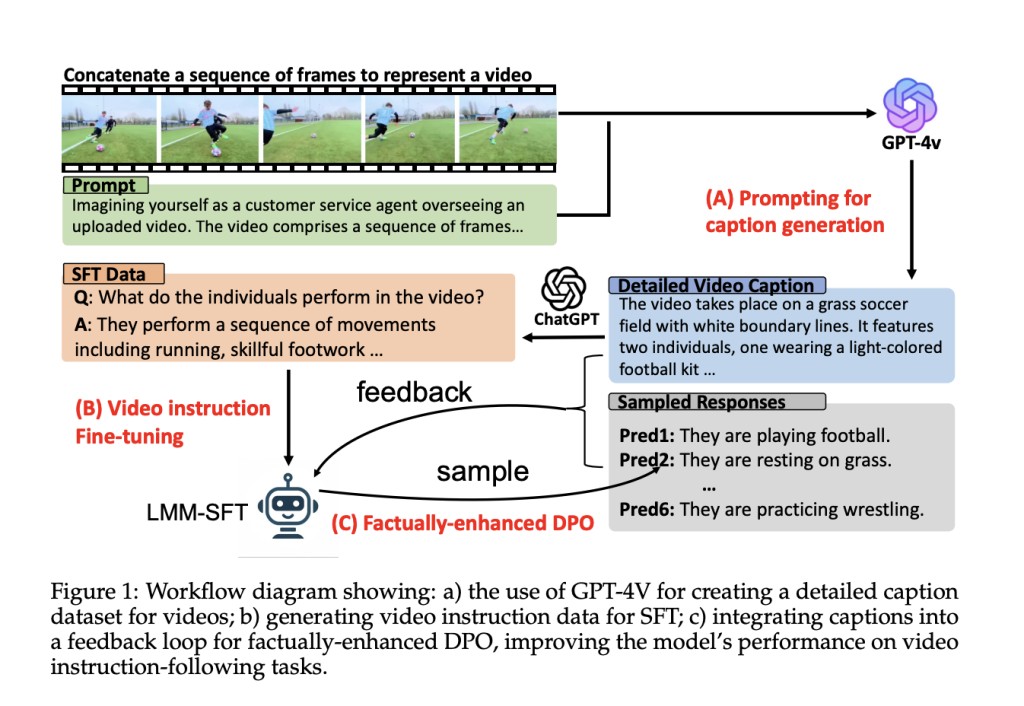

Addressing these challenges, the researchers have introduced a unique and cost-effective reward mechanism. This mechanism is designed to reliably evaluate the quality of responses generated by video language models (VLMs). The key innovation is the use of detailed video captions as proxies for the actual video frames. By analyzing these captions, a language model can assess the factual accuracy of a VLM’s response to a video-related question and detect potential hallucinations. The language model then provides natural language feedback, along with a numerical reward score, facilitating a cost-effective feedback system.

However, obtaining high-quality video captions is crucial for this process. To mitigate the shortage of high-quality video captions, the researchers have developed a comprehensive video caption dataset, SHAREGPTVIDEO, using a novel prompting technique with the GPT-4V model. This dataset comprises 900k captions encompassing a wide range of video content, including temporal dynamics, world knowledge, object attributes, and spatial relationships.

With this video caption dataset available, the researchers verified that their reward mechanism, which utilizes video captions as proxies, is well-aligned with evaluations derived from the more powerful but costlier GPT-4V model-generated rewards. Employing this reward mechanism as the basis for a DPO algorithm, they trained a model called LLAVA-HOUND-DPO, which achieved an 8.1% accuracy improvement over its supervised fine-tuning (SFT) counterpart on video question-answering tasks.

The methodology of this research involves several stages. These include caption pre-training, supervised fine-tuning, and DPO training. Notably, the researchers found that their generated video instruction data closely matches the quality of existing video question-answering datasets. This finding further validates their approach and underscores the potential of their method.

To assess their method’s effectiveness, the researchers conducted a comparative analysis with GPT-4V as a video question-answering evaluator. The results showed a moderate positive correlation between the two reward systems, with most of the language model’s scores falling within one standard deviation of GPT-4V’s scores. Additionally, the agreement on preference between the two systems exceeded 70%, cautiously supporting the applicability of the proposed reward mechanism.

This research presents a promising approach to enhancing the alignment of video language models through a cost-effective reward system based on detailed video captions. By addressing the scarcity of high-quality alignment data across modalities, this method paves the way for more accurate and truthful responses from video LMs while potentially reducing the associated costs and computational resources required.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

The post Enhancing Video AI with Smart Caption-Based Rewards appeared first on MarkTechPost.

Source: Read MoreÂ