In our rapidly advancing artificial intelligence (AI) world, we have witnessed remarkable breakthroughs in natural language processing (NLP) capabilities. From virtual assistants that can converse fluently to language models that can generate human-like text, the potential applications are truly mind-boggling. However, as these AI systems become more sophisticated, they also become increasingly complex and opaque, operating as inscrutable “black boxes†– a cause for concern in critical domains like healthcare, finance, and criminal justice.

A team of researchers from Imperial College London have proposed a framework for evaluating explanations generated by AI systems, enabling us to understand the grounds behind their decisions.

At the heart of their work lies a fundamental question: How can we ensure that AI systems are making predictions for the right reasons, especially in high-stakes scenarios where human lives or significant resources are at stake?

The researchers have identified three distinct classes of explanations that AI systems can provide, each with its own structure and level of complexity:

Free-form Explanations: These are the simplest form, consisting of a sequence of propositions or statements that attempt to justify the AI’s prediction.

Deductive Explanations: Building upon free-form explanations, deductive explanations link propositions through logical relationships, forming chains of reasoning akin to a human thought process.

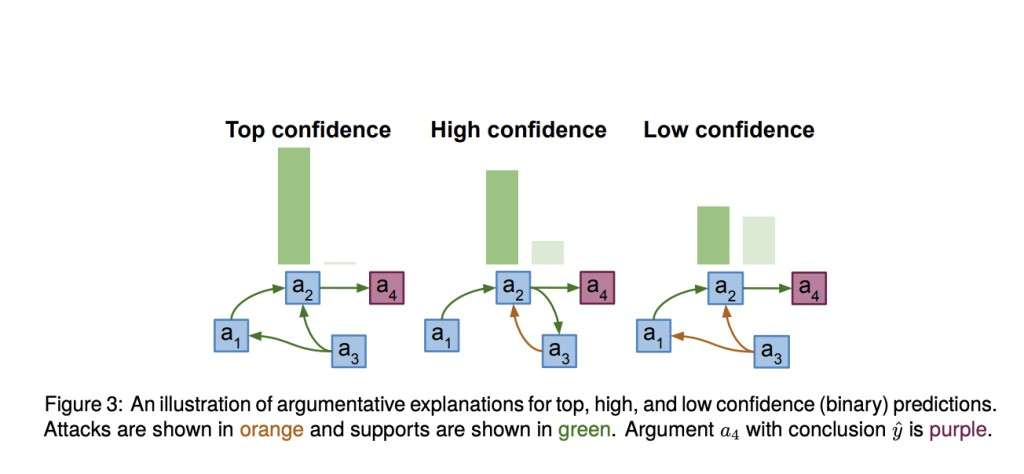

Argumentative Explanations: The most intricate of the three, argumentative explanations mimic human debates by presenting arguments with premises and conclusions, connected through support and attack relationships.

The researchers have laid the foundation for a comprehensive evaluation framework by defining these explanation classes. But their work doesn’t stop there.

To ensure the validity and usefulness of these explanations, the researchers have proposed a set of properties tailored to each explanation class. For instance, free-form explanations are evaluated for coherence, ensuring that the propositions do not contradict one another. On the other hand, deductive explanations are assessed for relevance, non-circularity, and non-redundancy, ensuring that the chains of reasoning are logically sound and free from superfluous information.

Argumentative explanations, being the most complex, are subjected to rigorous evaluation through properties like dialectical faithfulness and acceptability. These properties ensure that the explanations accurately reflect the AI system’s confidence in its predictions and that the arguments presented are logically consistent and defensible.

But how do we quantify these properties? The researchers have devised ingenious metrics that assign numerical values to the explanations based on their adherence to the defined properties. For example, the coherence metric (Coh) measures the degree of coherence in free-form explanations, while the acceptability metric (Acc) evaluates the logical soundness of argumentative explanations.

The significance of this research cannot be overstated. We take a crucial step towards building trust in these systems by providing a framework for evaluating the quality and human-likeness of AI-generated explanations. Imagine a future where AI assistants in healthcare can not only diagnose illnesses but also provide clear, structured explanations for their decisions, allowing doctors to scrutinize the reasoning and make informed choices.

Moreover, this framework has the potential to foster accountability and transparency in AI systems, ensuring that they are not perpetuating biases or making decisions based on flawed logic. As AI permeates more aspects of our lives, such safeguards become paramount.

The researchers have set the stage for further advancements in explainable AI, inviting collaboration from the broader scientific community. With continued effort and innovation, we may one day unlock the full potential of AI while maintaining human oversight and control – a testament to the harmony between technological progress and ethical responsibility.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

The post To Unveil the AI Black Box: Researchers at Imperial College London Proposes a Machine Learning Framework for Making AI Explain Itself appeared first on MarkTechPost.

Source: Read MoreÂ