In artificial intelligence, models are constantly sought to generate code accurately and efficiently. These models play an important role in various applications, from automating software development tasks to aiding programmers in their work. However, many existing models are large and resource-intensive, making them challenging to deploy and use in practical scenarios.

Some solutions already exist in the form of large-scale models like Jamba. Jamba is a sophisticated generative text model designed to deliver impressive performance on coding tasks. With its hybrid SSM-Transformer architecture and extensive parameter count, Jamba is a significant model in natural language processing.

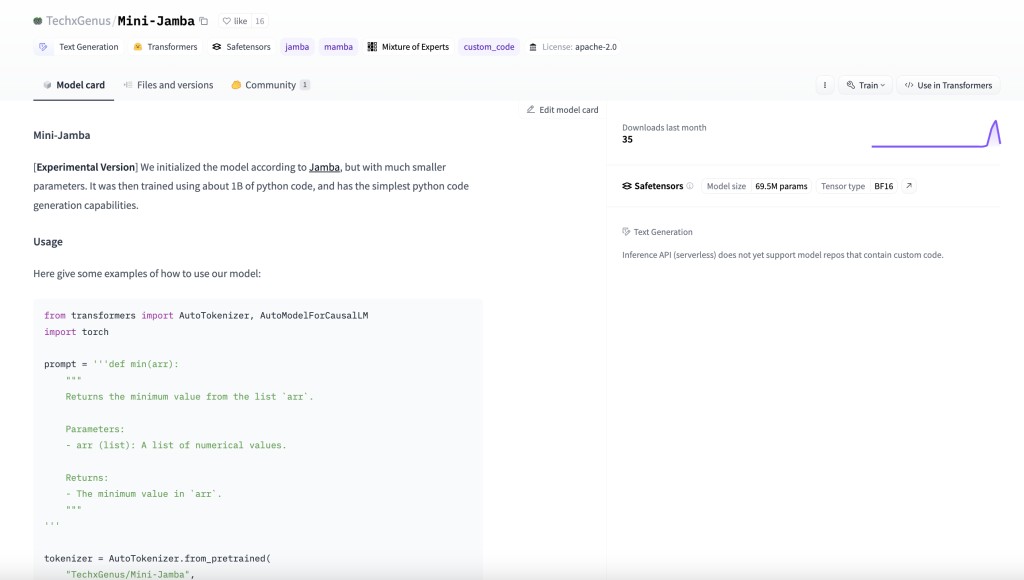

Meet Mini-Jamba, an experimental version of Jamba tailored for lightweight use cases. Mini-Jamba inherits the essence of its predecessor but with significantly reduced parameters, making it more accessible and easier to deploy in resource-constrained environments. Despite its smaller size, Mini-Jamba retains the fundamental capabilities of generating Python code, albeit with simpler code generation abilities.

Despite its experimental nature, Mini-Jamba demonstrates promising capabilities in generating Python code snippets. Its reduced parameter count allows for faster inference times and lower resource consumption compared to larger models like Jamba. Although it may occasionally produce errors or struggle with non-coding tasks, Mini-Jamba is a valuable tool for developers seeking lightweight solutions for code generation tasks.

Mini-Jamba showcases its efficiency through its reduced resource footprint and faster inference times. By leveraging fewer parameters, Mini-Jamba achieves comparable performance to larger models while consuming fewer computational resources. Its ability to generate Python code accurately and efficiently makes it a suitable choice for various coding tasks, especially in resource-constrained environments.

In conclusion, Mini-Jamba represents a step towards democratizing access to sophisticated generative text models for code generation. While it may not match the performance of larger models like Jamba in all scenarios, its lightweight nature and simplified code generation capabilities make it a valuable addition to developers’ and researchers’ toolkits.

The post Meet Mini-Jamba: A 69M Parameter Scaled-Down Version of Jamba for Testing and Has the Simplest Python Code Generation Capabilities appeared first on MarkTechPost.

Source: Read MoreÂ