From a young age, humans exhibit an incredible ability to recombine their knowledge and skills in novel ways. A child can effortlessly combine running, jumping, and throwing to invent new games. A mathematician can flexibly recombine basic mathematical operations to solve complex problems. This talent for compositional reasoning – constructing new solutions by remixing primitive building blocks – has proven to be a formidable challenge for artificial intelligence.

However, a multi-institutional team of researchers may have cracked the code. In a groundbreaking study to be presented at ICLR 2024, scientists from ETH Zurich, Google, and Imperial College London unveil new theoretical and empirical insights into how modular neural network architectures called hypernetworks can discover and leverage the hidden compositional structure underlying complex tasks.

Current state-of-the-art AI models like GPT-3 are remarkable, but they are also incredibly data-hungry. These models require massive training datasets to master new skills, as they lack the ability to flexibly recombine their knowledge to solve novel problems outside their training regimes. Compositionality, on the other hand, is a defining feature of human intelligence that allows our brains to rapidly build complex representations from simpler components, enabling the efficient acquisition and generalization of new knowledge. Endowing AI with this compositional reasoning capability is considered a holy grail objective in the field. It could lead to more flexible and data-efficient systems that radically generalize their skills.

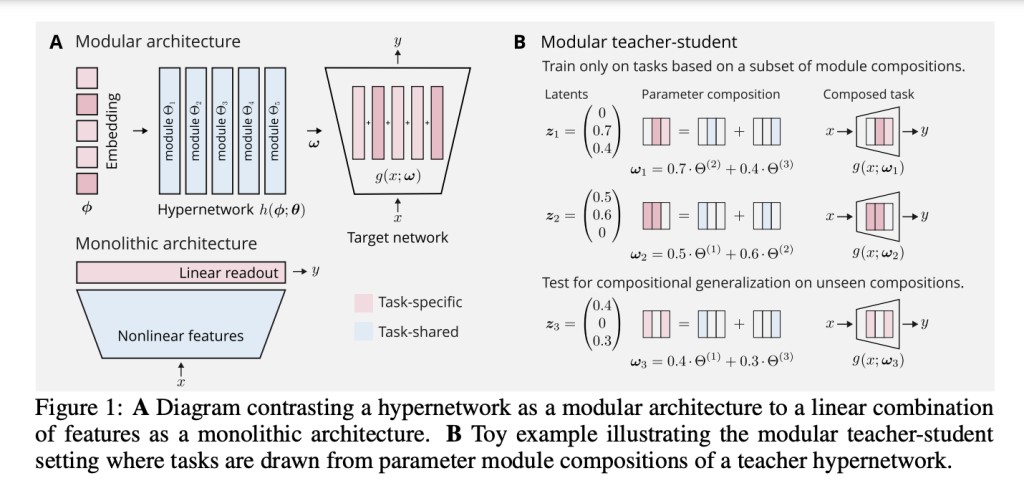

The researchers hypothesize that hypernetworks may hold the key to unlocking compositional AI. Hypernetworks are neural networks that generate the weights of another neural network through modular, compositional parameter combinations. Unlike conventional “monolithic†architectures, hypernetworks can flexibly activate and combine different skill modules by linearly combining parameters in their weight space.

Picture each module as a specialist focused on a particular capability. Hypernetworks act as modular architects, able to assemble tailored teams of these experts to tackle any new challenge that arises. The core question is: Under what conditions can hypernetworks recover the ground truth expert modules and their compositional rules simply by observing the outputs of their collective efforts?

Through a theoretical analysis leveraging the teacher-student framework, the researchers derived surprising new insights. They proved that under certain conditions on the training data, a hypernetwork student can provably identify the ground truth modules and their compositions – up to a linear transformation – from a modular teacher hypernetwork. The crucial conditions are:

Compositional support: All modules must be observed at least once during training, even when combined with others.

Connected support: No modules can exist in isolation – every module must co-occur with others across training tasks.

No overparameterization: The student’s capacity cannot vastly exceed the teacher’s, or it may simply memorize each training task independently.

Remarkably, despite the exponentially many possible module combinations, the researchers showed that fitting just a linear number of examples from the teacher is sufficient for the student to achieve compositional generalization to any unseen module combination.

The researchers went beyond theory, conducting a series of ingenious meta-learning experiments that demonstrated hypernetworks’ ability to discover compositional structure across diverse environments – from synthetic modular compositions to scenarios involving modular preferences and compositional goals.

In one experiment, they pitted hypernetworks against conventional architectures like ANIL and MAML in a sci-fi world where an agent had to navigate mazes, perform actions on colored objects, and maximize its modular “preferences.†While ANIL and MAML faltered when extrapolating to unseen preference combinations, hypernetworks flexibly generalized their behavior with high accuracy.

Remarkably, the researchers observed instances where hypernetworks could linearly decode the ground truth module activations from their learned representations, showcasing their ability to extract the underlying modular structure from sparse task demonstrations.

While these results are promising, challenges remain. Overparameterization was a key obstacle – too many redundant modules caused hypernetworks to memorize individual tasks simply. Scalable compositional reasoning will likely require carefully balanced architectures. This work has exposed the veil obscuring the path to artificial compositional intelligence. With deeper insights into inductive biases, learning dynamics, and architectural design principles, researchers can pave the way toward AI systems that acquire knowledge more akin to humans – efficiently recombining skills to generalize their capabilities radically.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

The post ETH Zurich Researchers Unveil New Insights into AI’s Compositional Learning Through Modular Hypernetworks appeared first on MarkTechPost.

Source: Read MoreÂ