Human Resource Management Systems, or HRMS, are essential for organizations. They make HR processes and hr tasks easier, especially during the hiring process. They also help keep everything compliant and manage employee data management well. This includes things like performance evaluations. An effective HRMS software application is important for testing. It makes sure the system

The post HRMS Testing: A Comprehensive Guide with Checklist appeared first on Codoid.

Software Engineering

I’ve got a C# framework using Playwright.NET and Reqnroll (formerly SpecFlow).

I’ve moved common steps across multiple projects into a separate project within the same solution to enable easy reuse.

I’ve referenced the binding assembly in the reqnroll.json file and the tests run fine.

All projects have the Reqnroll.NUnit NuGet package installed. I’d like to remove the package from all but the shared project – the dependency should mean that the other projects get the package from my shared project.

I’ve been able to do this with other packages, like Playwright – that’s only installed in the shared project and the other projects all get at it via the project dependencies.

But when I remove Reqnroll.NUnit, code I have in a [BeforeTestRun] hook does not run. [BeforeScenario] does but I need [BeforeTestRun] too. If I re-add the package to the project then [BeforeTestRun] works as normal. But the moment I remove it (keeping it in the shared project) then [BeforeTestRun] gets skipped.

Any idea why?

Testing an algorithm is really important. It ensures the algorithm works correctly. It also looks at how well it performs in various situations. Whether you are dealing with a sorting algorithm, a machine learning model, or a more complex one, a good testing process can find any issues before you start using it. Here’s a

The post How to Test an Algorithm appeared first on Codoid.

Exception in thread “main” org.openqa.selenium.SessionNotCreatedException: Could not start a new session. Possible causes are invalid address of the remote server or browser start-up failure.

Host info: host: ‘XXXXX’, ip: ‘XXXXX’

Build info: version: ‘4.26.0’, revision: ’69f9e5e’

System info: os.name: ‘Windows 11’, os.arch: ‘amd64’, os.version: ‘10.0’, java.version: ‘23.0.1’

Driver info: io.appium.java_client.android.AndroidDriver

Command: [null, newSession {capabilities=[Capabilities {app: C:UsersxxxxxxOneDriveDoc…, appActivity: app.superssmart.ui.MainActi…, appPackage: app.superssmart, browserName: , deviceName: emulator-5554, noReset: true, platformName: ANDROID}]}]

Capabilities {app: C:UsersxxxxOneDriveDoc…, appActivity: app.superssmart.ui.MainActi…, appPackage: app.superssmart, browserName: , deviceName: emulator-5554, noReset: true, platformName: ANDROID}

at org.openqa.selenium.remote.RemoteWebDriver.execute(RemoteWebDriver.java:563)

at io.appium.java_client.AppiumDriver.startSession(AppiumDriver.java:270)

at org.openqa.selenium.remote.RemoteWebDriver.<init>(RemoteWebDriver.java:174)

at io.appium.java_client.AppiumDriver.<init>(AppiumDriver.java:91)

at io.appium.java_client.AppiumDriver.<init>(AppiumDriver.java:103)

at io.appium.java_client.android.AndroidDriver.<init>(AndroidDriver.java:109)

at appium.test.App_Main.main(App_Main.java:29)

Caused by: java.lang.IllegalArgumentException: Illegal key values seen in w3c capabilities: [app, appActivity, appPackage, deviceName, noReset]

at org.openqa.selenium.remote.NewSessionPayload.lambda$validate$5(NewSessionPayload.java:163)

at java.base/java.util.stream.ReferencePipeline$15$1.accept(ReferencePipeline.java:580)

at java.base/java.util.stream.ReferencePipeline$15$1.accept(ReferencePipeline.java:581)

at java.base/java.util.stream.ReferencePipeline$3$1.accept(ReferencePipeline.java:215)

at java.base/java.util.stream.ReferencePipeline$15$1.accept(ReferencePipeline.java:581)

at java.base/java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1709)

at java.base/java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:570)

at java.base/java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:560)

at java.base/java.util.stream.ForEachOps$ForEachOp.evaluateSequential(ForEachOps.java:151)

at java.base/java.util.stream.ForEachOps$ForEachOp$OfRef.evaluateSequential(ForEachOps.java:174)

at java.base/java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:265)

at java.base/java.util.stream.ReferencePipeline.forEach(ReferencePipeline.java:636)

at org.openqa.selenium.remote.NewSessionPayload.validate(NewSessionPayload.java:167)

at org.openqa.selenium.remote.NewSessionPayload.<init>(NewSessionPayload.java:70)

at org.openqa.selenium.remote.NewSessionPayload.create(NewSessionPayload.java:99)

at org.openqa.selenium.remote.NewSessionPayload.create(NewSessionPayload.java:84)

at org.openqa.selenium.remote.ProtocolHandshake.createSession(ProtocolHandshake.java:60)

at io.appium.java_client.remote.AppiumCommandExecutor.createSession(AppiumCommandExecutor.java:176)

at io.appium.java_client.remote.AppiumCommandExecutor.execute(AppiumCommandExecutor.java:237)

at org.openqa.selenium.remote.RemoteWebDriver.execute(RemoteWebDriver.java:545)

… 6 more

Code:

public static AndroidDriver driver;

public static void main(String[] args) throws MalformedURLException, InterruptedException {

File appDir = new File(“C:\Users\keert\OneDrive\Documents\App”);

File app=new File(appDir,”app.apk”);

DesiredCapabilities cap=new DesiredCapabilities();

cap.setCapability(CapabilityType.BROWSER_NAME, “”);

cap.setCapability(“platformName”, “Android”);

cap.setCapability(“app”, app.getAbsolutePath());

cap.setCapability(“deviceName”, “emulator-5554”);

cap.setCapability(“appPackage”, “app.superssmart”);

cap.setCapability(“appActivity”, “app.superssmart.ui.MainActivity”);

cap.setCapability(“noReset”, true);

driver=new AndroidDriver(new URL(“http://127.0.0.1:4723/”),cap);

In today’s world, where mobile devices are becoming increasingly popular, ensuring your website and its various user interfaces and elements are accessible and functional on all screen sizes is more important than ever. Responsive web design (RWD) allows your website to adapt its layout to the size of the screen it is being viewed on,

The post Best Practices for Responsive Web Design appeared first on Codoid.

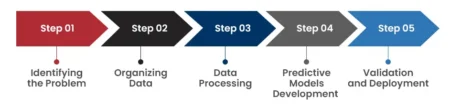

The blog discusses how predictive data analytics transforms quality assurance by enabling businesses to anticipate software issues and optimize testing. Leveraging AI, ML, and robust data models empowers QA teams to detect patterns, improve test case efficiency, and prioritize tasks for faster releases and superior outcomes.

The post How Predictive Data Analytics Transforms Quality Assurance first appeared on TestingXperts.

I’m currently working on a project that involves multiple services (some distributed across a cluster like Elasticsearch) running inside Docker containers. The whole system can be started through a docker-compose file with custom setup if required. To validate these services against specific requirements, I’ve set up a separate test project.

We’ve developed a comprehensive test suite to ensure the system functions as expected. However, one challenge remains: collecting logs from individual or multiple Docker services for a given test case. Ideally, I’d like to save the logs generated during each test run in the corresponding test results.

Upon researching common testing frameworks and practices, I identified similar scenarios:

Before running a test, reset the service’s logs

After completing a test, collect the logs

I’ve explored various possible solutions, including:

Using Docker Testcontainers: While this approach is promising, it requires custom-built services and integrating them, which seems overly complex.

Resetting all containers to clear their logs: This method is impractical due to the significant time it takes, given the large number of different services involved.

Truncating logs: Stopping a container, truncating its logs, and then restarting it works but can be slow.

Rotating logs: Could this be achieved through Docker or by utilizing a central log service?

I’m confident that others have encountered similar issues in the past. I would greatly appreciate any suggestions or ideas on how to address this problem.

The code review process is crucial in Software Development, guided by a checklist to ensure the software is high quality and meets standards. During the review, one or more team members examine the source code closely, looking for defects and verifying that it follows coding guidelines. This practice improves the entire codebase by catching bugs

The post The Importance of Code Reviews: Tips and Best Practices appeared first on Codoid.

Generative AI is becoming the new norm, widely used and more accessible to the public via platforms like ChatGPT or Meta AI, which appear on social media platforms like WhatsApp and Instagram Messenger.

Despite its being fundamentally a transformers that break sentences into tokens and predict the next word, the implications and applications are vast. However, these GPT models currently lack human-like understanding. Which might cause reliability issues and others, but considering its capabilities the new trend of agentic AI is on rise this highlights the importance of having a well-defined testing approach.

I wanted to ask:

What are the patterns or testing strategies you are following beyond basic testing strategies?

What’s your approach to identify and fix, do you follow any checkmarks ?

AI Hallucination

Fairness and Bias

Security & Ethical Issue

Coherence and relevance

Robustness and Reliability

Explainability and Interpretability

Include others you have Identified

Here are some of my observations:

Example 1: AI Hallucination

Issue: Generating factually incorrect or nonsensical outputs, The response provided has data that is not reliable however its sounds plausible or true.

Solution: Fact-checking, Human-in-the-loop, Prompt engineering, Training data quality, Model fine-tuning, Post-processing

Example 2: Bias and Fairness

Issue: Based on the data, Generating outputs that unfairly favor certain groups.

Solution: Bias audits, Fairness metrics, Diverse training data

Example 3: Adherence to Instructions

Issue: With tools like Meta AI Agents and similar others in Salesforce, we need to check if the response adheres to the instructions, as sometimes it fails to follow the guidelines and guardrails.

Solution: It might be an issue with the instruction, but we need to go back to basics and test against each instruction to check if it is followed or not.

This might become hectic any alternate

Example 4: Not in Coherence Knowledge Article Boundaries

Issue: GPT models used as chatbots with a set of knowledge articles sometimes provide results outside the set of knowledge articles as a reference.

Solution: Coherence metrics, Prompt design, Feedback

Example 5: Chain of Thought

Issue: In some cases, the generative AI assumes continuity with earlier conversations within the window period, which might cause unnecessary references.

Solution: There should be instructions to cross-verify and provide a note.

Most of these issues can be addressed with effective prompt engineering. However, I am curious about your methods for breaking these issues and any observations you have identified.

The blog discusses the transformative capabilities of DevOps consulting services in accelerating product development, enhancing collaboration, and improving software quality. Learn how customized DevOps pipelines, automation, and continuous improvement drive digital evolution and keep organizations competitive in a fast-paced landscape.

The post DevOps Consulting: The Catalyst for Digital Evolution  first appeared on TestingXperts.

I DLed a password-protection supposed-.exe from FH — FolderLock — and it was flagged by Jotti with a good deal of malware, adware, then for giggles with VirusTotal and about half flagged it, including with ‘FileHippo’ in the name. I say ‘supposed’ because the DL ends with .exe, and File Explorer does say it’s an application, but the description is “FH Manager.”

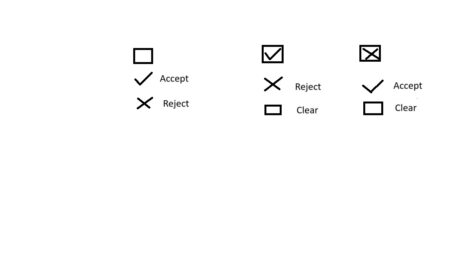

I am testing application using screen reader NVDA. there is a checkbox, if i click on checkbox there will be drop down will be display and it has 2 option 1.Accept 2. Reject.

if I select Accept and tab on to next element and come back to current check box then if i click enter the there will be drop down with option 1. Reject 2. clear

My question when screen reader focus to checkbox what should be announcement ? and tab on to each option and when selecting accept then what should be the announcement ?

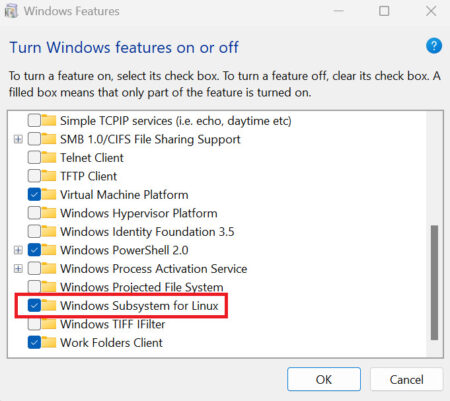

1. What is WSL2? Windows Subsystem for Linux (WSL) is a full Linux kernel built by Microsoft that allows you…

In today’s digital world, eLearning services and Learning Management Systems (LMS) are crucial for schools and organizations. Testing these systems ensures users have a seamless learning experience. It focuses on evaluating usability, performance, security, and accessibility while ensuring the system meets diverse learning needs. This comprehensive testing is essential to guarantee that the LMS functions

The post What is LMS Testing? Explore Effective Strategies appeared first on Codoid.

I’ve got a C# framework using Playwright.NET and Reqnroll (formerly SpecFlow).

I’ve moved common steps across multiple projects into a NuGet package to enable easy reuse. They used to be in a separate project within the same solution. But now they’re in the NuGet package project in a separate repo.

I’ve referenced the binding assembly in the reqnroll.json file and the tests run fine. However, I can’t seem to use F12 to jump to the external steps anymore. Steps held within the project still work fine with F12. This all worked fine when the shared steps were in the separate project within the same solution.

Any ideas on how I can resolve this? I’ve changed the NuGet package to include symbols and source. As said the tests run fine, but it’d be handy to be able to jump to the step like before.

Test Guild – Automation Testing Tools Community

Leveraging AI and Playwright for Test Case Generation

Two BIG trends the past few years in the software testing space been AI automation Testing and Playwright. In case you don’t know in a previous post I showed how Playwright has recently surpassed Cypress.io, making it a top choice for developers looking to automate their testing. in downloads. And looking at Microsoft Playwright on GitHub

You’re reading Leveraging AI and Playwright for Test Case Generation, originally posted on Test Guild – Automation Testing Tools Community – and copyrighted by Joe Colantonio

This blog discusses how ISO 20022 can transform global banking, offering enriched data and streamlined payments. It also highlights key compliance and security challenges, including data integrity, system upgrades, and regulatory requirements. Learn best practices for testing, automation, and enhancing payment systems to ensure smooth adoption. Explore strategies to safeguard financial data and meet global standards while embracing the future of banking with ISO 20022.

The post Key ISO 20022 Compliance & Security Insights for Banking Sector first appeared on TestingXperts.

Test Guild – Automation Testing Tools Community

6 Must Run Performance Tests for Black Friday

Regarding e-commerce, Black Friday is the ultimate test of endurance. It’s one of those days of the year, along with Cyber Monday, when traffic spikes, sales skyrocket, and the pressure is on to deliver a seamless online shopping experience. We all know horror stories, sites, abandoned crash carts, and customers disappearing into thin air. The

You’re reading 6 Must Run Performance Tests for Black Friday, originally posted on Test Guild – Automation Testing Tools Community – and copyrighted by Joe Colantonio

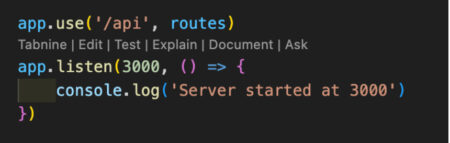

In today’s development of web applications, building RESTful APIs is crucial for effective client-server communication. These APIs enable seamless data exchange between the client and server, making them an essential component of modern web development. This blog post will show you how to build strong and scalable RESTful APIs with Node.js, a powerful JavaScript runtime

The post Building RESTful APIs with Node.js and Express appeared first on Codoid.

I am a QA/tester/test automation professional and not a software engineer. I see companies like Google & Microsoft have very few testers i.e. almost as good as zero. Google has very few Test Engineers who are are likely just great software engineers who happen to focus on testing & testing infrastructure. Microsoft has a few “QA” whose job is to test hardware like Xbox and videogames where automation is not very easy. Google seems to be doing fine & working exceptionally production bug free, but Microsoft is a mixed bag. Overall, their products seem good without the need for QA roles. There are other companies like Salesforce where they have no QA per my friend who is a senior software engineer there. This trend seems to have caught on in some smaller companies (like mine) who are slowly getting rid of their QA teams.

However, I do see many companies like Amazon, Oracle etc. which still have QA. But some of these companies are trying to move away from QA teams gradually. My guess is that they keep QA around because its hard to find great software engineers/SWEs who can also test well. As time passes, SWEs will learn more testing & get used to it thereby reducing or eliminating the need for separate QA roles. Do we really need QA roles in the long run ? I am beginning to think we don’t.

PS – It could take 10 years or less, or more. 10 is just a random guess.