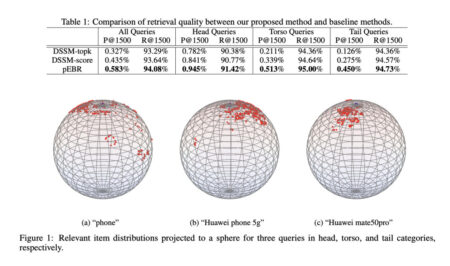

Creating a common semantic space where queries and items can be represented as dense vectors is the main goal of…

Machine Learning

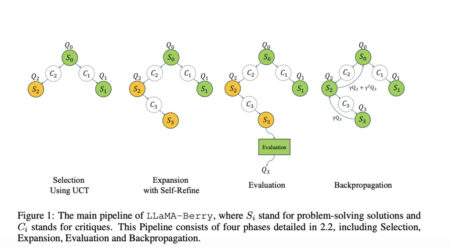

Mathematical reasoning within artificial intelligence has emerged as a focal area in developing advanced problem-solving capabilities. AI can revolutionize scientific…

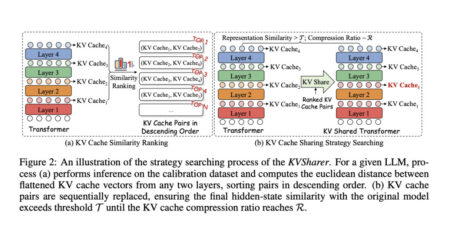

Conversational AI is now a cornerstone of technology, but achieving fast, efficient, and real-time interaction remains challenging. Latency—the delay between…

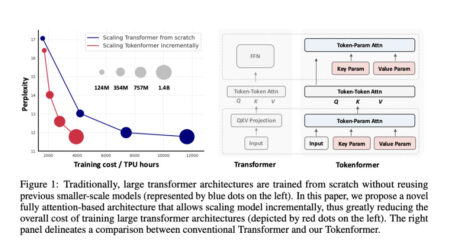

Transformers have transformed artificial intelligence, offering unmatched performance in NLP, computer vision, and multi-modal data integration. These models excel at…

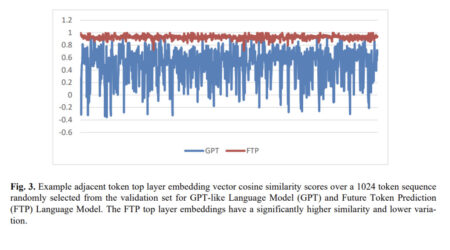

The current design of causal language models, such as GPTs, is intrinsically burdened with the challenge of semantic coherence over…

Recent advancements in Large Language Models (LLMs) have demonstrated exceptional natural language understanding and generation capabilities. Research has explored the…

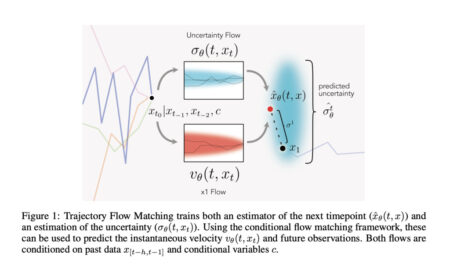

In healthcare, time series data is extensively used to track patient metrics like vital signs, lab results, and treatment responses…

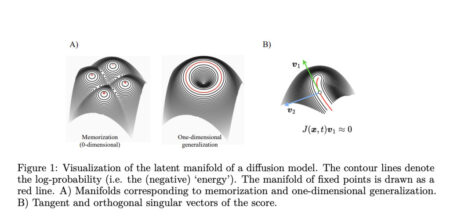

Generative diffusion models have revolutionized image and video generation, becoming the foundation of state-of-the-art generation software. While these models excel…

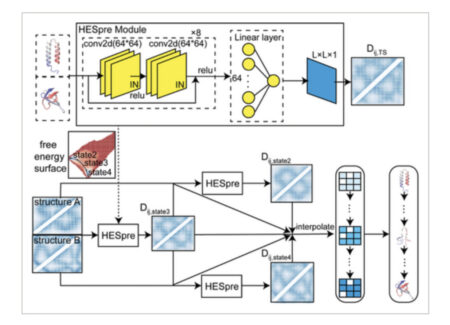

Predicting protein conformational changes remains a crucial challenge in computational biology and artificial intelligence. Breakthroughs achieved by deep learning, such…

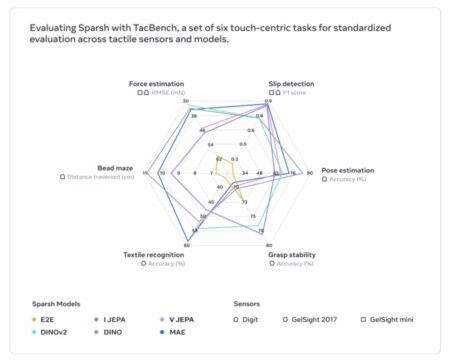

Tactile sensing plays a crucial role in robotics, helping machines understand and interact with their environment effectively. However, the current…

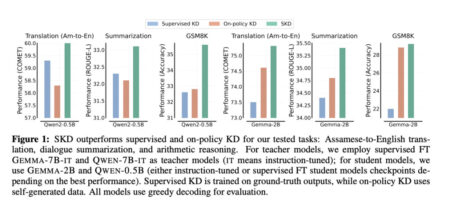

Knowledge distillation (KD) is a machine learning technique focused on transferring knowledge from a large, complex model (teacher) to a…

In today’s fast-paced business world, a strong brand name is more crucial than ever. It’s the first impression you make…

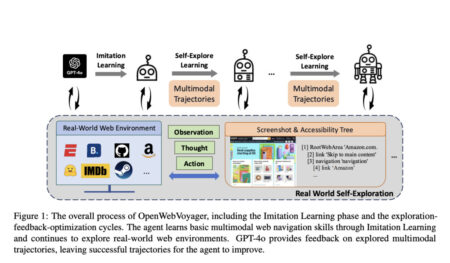

Designing autonomous agents that can navigate complex web environments raises many challenges, in particular when such agents incorporate both textual…

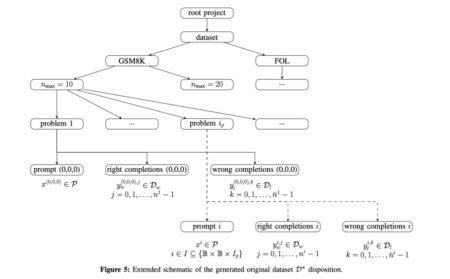

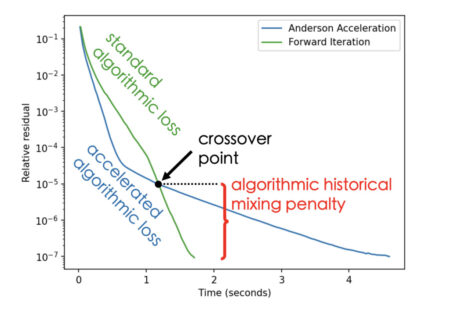

A key question about LLMs is whether they solve reasoning tasks by learning transferable algorithms or simply memorizing training data.…

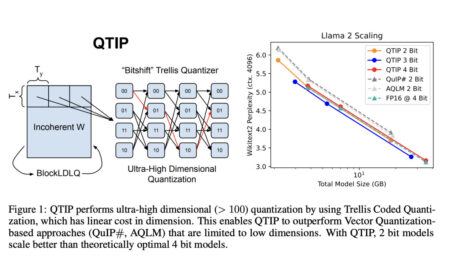

Quantization is an essential technique in machine learning for compressing model data, which enables the efficient operation of large language…

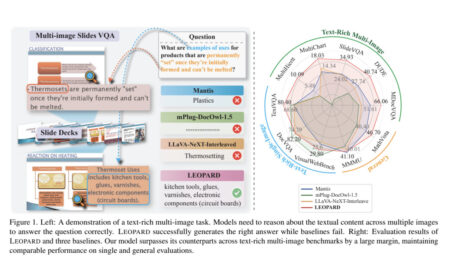

In recent years, multimodal large language models (MLLMs) have revolutionized vision-language tasks, enhancing capabilities such as image captioning and object…

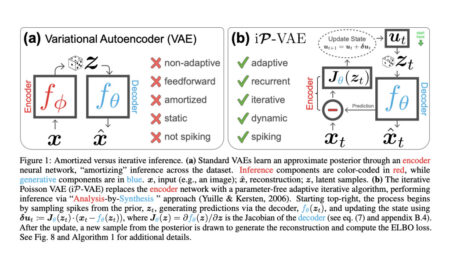

The Evidence Lower Bound (ELBO) is a key objective for training generative models like Variational Autoencoders (VAEs). It parallels neuroscience,…

In recent times, large language models (LLMs) built on the Transformer architecture have shown remarkable abilities across a wide range…

Escalation in AI implies an increased infrastructure expenditure. The massive and multidisciplinary research exerts economic pressure on institutions as high-performance…

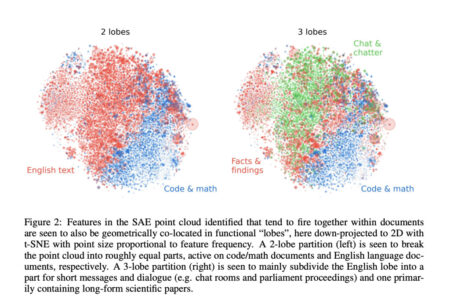

Large Language Models (LLMs) have emerged as powerful tools in natural language processing, yet understanding their internal representations remains a…